Ganit Business Solutions

https://ganitinc.comAbout

Ganit Inc. is in the business of enhancing the Decision Making Power (DMP) of businesses by offering solutions that lie at the crossroads of discovery-based artificial intelligence, hypothesis-based analytics, and the Internet of Things (IoT).

The company's offerings consist of a functioning product suite and a bespoke service offering as its solutions. The goal is to integrate these solutions into the core of their client's decision-making processes as seamlessly as possible. Customers in the FMCG/CPG, Retail, Logistics, Hospitality, Media, Insurance, and Banking sectors are served by Ganit's offices in both India and the United States. The company views data as a strategic resource that may assist other businesses in achieving growth in both their top and bottom lines of business. We build and implement AI and ML solutions that are purpose-built for certain sectors to increase decision velocity and decrease decision risk.

Connect with the team

Company social profiles

Jobs at Ganit Business Solutions

Roles & Responsibilities

- Data Engineering Excellence: Design and implement data pipelines using formats like JSON, Parquet, CSV, and ORC, utilizing batch and streaming ingestion.

- Cloud Data Migration Leadership: Lead cloud migration projects, developing scalable Spark pipelines.

- Medallion Architecture: Implement Bronze, Silver, and gold tables for scalable data systems.

- Spark Code Optimization: Optimize Spark code to ensure efficient cloud migration.

- Data Modeling: Develop and maintain data models with strong governance practices.

- Data Cataloging & Quality: Implement cataloging strategies with Unity Catalog to maintain high-quality data.

- Delta Live Table Leadership: Lead the design and implementation of Delta Live Tables (DLT) pipelines for secure, tamper-resistant data management.

- Customer Collaboration: Collaborate with clients to optimize cloud migrations and ensure best practices in design and governance.

Educational Qualifications

- Experience: Minimum 5 years of hands-on experience in data engineering, with a proven track record in complex pipeline development and cloud-based data migration projects.

- Education: Bachelor’s or higher degree in Computer Science, Data Engineering, or a related field.

- Skills

- Must-have: Proficiency in Spark, SQL, Python, and other relevant data processing technologies. Strong knowledge of Databricks and its components, including Delta Live Table (DLT) pipeline implementations. Expertise in on-premises to cloud Spark code optimization and Medallion Architecture.

Good to Have

- Familiarity with AWS services (experience with additional cloud platforms like GCP or Azure is a plus).

Soft Skills

- Excellent communication and collaboration skills, with the ability to work effectively with clients and internal teams.

- Certifications

- AWS/GCP/Azure Data Engineer Certification.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Job Description:

We are looking for a Big Data Engineer who have worked across the entire ETL stack. Someone who has ingested data in a batch and live stream format, transformed large volumes of daily and built Data-warehouse to store the transformed data and has integrated different visualization dashboards and applications with the data stores. The primary focus will be on choosing optimal solutions to use for these purposes, then maintaining, implementing, and monitoring them.

Responsibilities:

- Develop, test, and implement data solutions based on functional / non-functional business requirements.

- You would be required to code in Scala and PySpark daily on Cloud as well as on-prem infrastructure

- Build Data Models to store the data in a most optimized manner

- Identify, design, and implement process improvements: automating manual processes, optimizing data delivery, re-designing infrastructure for greater scalability, etc.

- Implementing the ETL process and optimal data pipeline architecture

- Monitoring performance and advising any necessary infrastructure changes.

- Create data tools for analytics and data scientist team members that assist them in building and optimizing our product into an innovative industry leader.

- Work with data and analytics experts to strive for greater functionality in our data systems.

- Proactively identify potential production issues and recommend and implement solutions

- Must be able to write quality code and build secure, highly available systems.

- Create design documents that describe the functionality, capacity, architecture, and process.

- Review peer-codes and pipelines before deploying to Production for optimization issues and code standards

Skill Sets:

- Good understanding of optimal extraction, transformation, and loading of data from a wide variety of data sources using SQL and ‘big data’ technologies.

- Proficient understanding of distributed computing principles

- Experience in working with batch processing/ real-time systems using various open-source technologies like NoSQL, Spark, Pig, Hive, Apache Airflow.

- Implemented complex projects dealing with the considerable data size (PB).

- Optimization techniques (performance, scalability, monitoring, etc.)

- Experience with integration of data from multiple data sources

- Experience with NoSQL databases, such as HBase, Cassandra, MongoDB, etc.,

- Knowledge of various ETL techniques and frameworks, such as Flume

- Experience with various messaging systems, such as Kafka or RabbitMQ

- Creation of DAGs for data engineering

- Expert at Python /Scala programming, especially for data engineering/ ETL purposes

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Technologies & Languages

- Azure

- Databricks

- SQL Sever

- ADF

- Snowflake

- Data Cleaning

- ETL

- Azure Devops

- Intermediate Python/Pyspark

- Intermediate SQL

- Beginners' knowledge/willingness to learn Spotfire

- Data Ingestion

- Familiarity with CI/CD or Agile

Must have:

- Azure – VM, Data Lake, Data Bricks, Data Factory, Azure DevOps

- Python/Spark (PySpark)

- SQL

Good to have:

- Docker

- Kubernetes

- Scala

He/she should have a good understanding in:

- How to build pipelines – ETL and Injection

- Data Warehousing

- Monitoring

Responsibilities:

Must be able to write quality code and build secure, highly available systems.

Assemble large, complex data sets that meet functional / non-functional business requirements.

Identify, design, and implement internal process improvements: automating manual processes, optimizing data delivery, re-designing infrastructure for greater scalability, etc with the guidance.

Create data tools for analytics and data scientist team members that assist them in building and optimizing our product into an innovative industry leader.

Monitoring performance and advising any necessary infrastructure changes.

Defining data retention policies.

Implementing the ETL process and optimal data pipeline architecture

Build analytics tools that utilize the data pipeline to provide actionable insights into customer acquisition, operational efficiency, and other key business performance metrics.

Create design documents that describe the functionality, capacity, architecture, and process.

Develop, test, and implement data solutions based on finalized design documents.

Work with data and analytics experts to strive for greater functionality in our data systems.

Proactively identify potential production issues and recommend and implement solutions

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Roles & Responsibilities-

• Responsible for bringing new logos

• Responsible for designing & institutionalizing sales process

• Researching, planning, and implementing new GTM initiatives

• Closing leads and managing them through the sales cycle

• Understanding client needs and be able to respond effectively with a plan of how to meet these

• Developing solutions and organizing, planning, creating & delivering compelling proof of concept demonstrations

• Think strategically to see the bigger picture and set aims and objectives in order to develop and expand the business

• Developing quotes and proposals for prospective clients & maintaining their tasks on track

• Handle creating, processing, managing & documenting multiple RFPs, RFQs & POCs for Clients

• Setting goals for the business development team and developing strategies to meet those goals

• Identifying and attending relevant conferences and industry events.

Qualifications-

• Master’s degree in Marketing related fields

• 5-6 years of proven experience in IT / Analytics / Consulting companies

• Excellent written and verbal communication skills

• Exceptional time management & organizational skills

• Complete knowledge of Bid Management / Pre-sales process with proven ability to deliver large scale, high-quality proposals in the US territory

• Good expertise in creating Power point decks with appropriate visual elements

• Thorough understanding of Market Analytics, Intelligence & specific industry knowledge

• Ability to flourish with minimal guidance, be proactive, and handle uncertainty.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Responsibilities:

- Work with development teams and product managers to ideate scalable software solutions

- Design client-side and server-side architecture

- Design, develop and manage well-functioning databases and applications

- Develop web applications implementing MVT architecture using Django web application frameworks

- Write effective APIs using industry standard design patterns

- Manage the complete software development process from conception to deployment

- Maintaining and upgrading the software following deployment

- Should be able to visualize a proposed system and be able to build it

- Development of applications leveraging Django/Flask based REST APIs

- Work with server-side technologies including databases and MVC/MVT patterns

- Maintain version control system such as GIT

- Should know all the phases of SDLC from requirement gathering to support and maintenance.

Requirements:

- 3-7 years provable experience as a Back End Developer

- Familiarity with common solution stacks

- Strong understanding of algorithms, data structures and system design, for scale, performance and security

- Ability to develop back-end website applications

- Knowledge of middleware languages (Python – Flask/Django) and/or JavaScript framework like Node.js

- Familiarity with databases (RDBMS – MySQL/PostgreSQL, optionally NoSQL DBs like MongoDB), web servers (Apache) and UI/UX design

- Great attention to detail

- Organizational skills

- An analytical mind

- Degree in Computer Science or relevant field

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Technical Skills & Competencies: Mandatory:

Web Development – HTML, CSS, JavaScript

Frameworks – ReactJS/AngularJS

Server-side development – Python/Django/Node.js

Database: SQL or NoSQL Databases (SQL Server/ MongoDB)

API Design concepts and Development of RESTful web services

Preferable:

- Familiarity with the Development of reusable frameworks, libraries to be used by multiple development teams

- Familiarity with cloud-native application architecture patterns

- Hands-on experience on project management tools like Jira

- Experience using DevOps Tools for CI/CD

- Conversant with agile methodologies

Roles & Responsibilities

- Work independently or in a small team setup

- Manage a team of highly skilled Frontend/Full Stack and Backend developers

- Creating, executing, and maintaining project plans including activity definition, sequence, dependencies, work effort, duration, and resource requirements

- Work with the technical team, communicating requirements and ensuring all product development meets the goals of the project and overall business and strategic goals

- Project management including release management, add-on compatibility reporting, updating documentations

- Assist in other ad-hoc or administrative tasks as part of daily/routine business activities

- Work with product technical architects to design new products or enhancements. Take part in system development including analysis, coding, and testing

- Performs design and code reviews with senior technical staff

- Act like the person everyone turns to resolve tough technical problems

- Work closely with other teams/vendors to connect front-end components with the other (often third-party) web and data services and support the back-end developers by integrating their work with the application

- Mentors team on proper standards/techniques to improve their accuracy and efficiency

- Performs the unit testing, system integration testing and assist with user acceptance testing

- Articulates business requirements in sufficient detail such that a technical solution can be designed and engineered

- Develops technical understanding of how the data flows from various source

- Provide ongoing support to applications used within the organization

Requirement

- 5+ years of experience in product development, with 2+ years of experience in a leadership role in current organization

- Reliable, self-motivated, and self-disciplined individual capable of planning and executing multiple projects simultaneously within a fast-paced environment

- Exceptional debugging skills and strong experience with performance tuning

- Excellent technical, analytical, and organizational skills

- Good range of hands-on technical experience

- Expert knowledge in Systems Development Life Cycle (SDLC)

- Expert understanding of Node.js and JavaScript

- Familiarity with code revisioning and repo maintenance on Git, code profiling and auditing

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Job Brief

We are looking for a Full Stack Developer to produce scalable software solutions. You will be part of a cross-functional team that is responsible for the full software development life cycle, from conception to deployment.

Responsibilities:

- Work with development teams and product managers to ideate scalable software solutions

- Design client-side and server-side architecture

- Build the front-end of applications through appealing visual design

- Design, develop and manage well-functioning databases and applications

- Develop web applications implementing MVT architecture using Django web application frameworks

- Write effective APIs

- Manage the complete software development process from conception to deployment

- Maintaining and upgrading the software following deployment

- Should be able to visualize a proposed system and be able to build it

- Experience with Django, Flask, rest frameworks

- Work with server-side technologies including databases and MVC and MVT patterns

- Maintain version control system such as GIT

- Should know all the phases of SDLC from requirement gathering to support and maintenance.

Requirements:

- 3-5 years proven experience as a Full Stack Developer

- Familiarity with common stacks

- Strong understanding of algorithms, data structures and system design

- Knowledge of LAMP and MEAN solution stacks

- Ability to develop front end website architecture and back-end website applications

- Knowledge of multiple front-end languages and libraries (HTML/ CSS, JavaScript, React, Angular, Vue, XML, jQuery and LESS)

- Knowledge of multiple back-end languages (C#, Java, Python) and JavaScript framework like Node.js

- Familiarity with databases (MySQL, SQL, MongoDB), web servers (Apache) and UI/UX design

- Excellent communication and teamwork skills

- Great attention to detail

- Organizational skills

- An analytical mind

- Degree in Computer Science or relevant field

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Set and manage client expectations on Data Science solutions quality

Drive customer delight through actionable insights

Identify risks and develop mitigation plan accordingly

Be the face of Ganit and represent Ganit’s vision and capabilities at the highest level of integrity and quality

Understand business requirements and translate to analytical requirements

Solve complex business problems for organizations, leveraging conventional and new

age data sources and applying cutting-edge advanced analytics techniques

Communicate effectively with client / offshore team to manage client expectations and

ensure timeliness and quality of insights.

Collaborate with internal team to execute solution development.

Challenge status quo of projects and drive superior project management.

Identify Work Breakdown Structure and manage team members

Business Development-

Explore profitable avenues and opportunities for Ganit to grow in the account

Act as a thought leader to imagine and implement new ideas at client and Ganit

Collaborate Ganit’s marketing and branding team to help in brand building activities

Identify cross-pollination ideas across accounts and subgroups

Possess business acumen to manage revenues profitably and meet financial goals consistently.

Able to quantify business value for clients and create win-win commercial propositions.

Have ability to adapt to the changing business priorities in a fast-paced business environment.

Educational Qualifications-

Master’s Degree from Tier 1 Institute

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Understand business problems and translate business requirements into technical requirements.

Conduct complex data analysis to ensure data quality & reliability i.e., make the data talk by extracting, preparing, and transforming it.

Identify, develop and implement statistical techniques and algorithms to address business challenges and add value to the organization.

Gather requirements and communicate findings in the form of a meaningful story with the stakeholders.

Build & implement data models using predictive modelling techniques. Interact with clients and provide support for queries and delivery

adoption.

Lead and mentor data analysts.

What we are looking for-

Apart from your love for data and ability to code even while sleeping you would need the following.

Minimum of 02 years of experience in designing and delivery of data science solutions.

You should have successful projects of retail/BFSI/FMCG/Manufacturing/QSR in your kitty to show-off.

Deep understanding of various statistical techniques, mathematical models, and algorithms to start the conversation with the data in hand.

Ability to choose the right model for the data and translate that into a code using R, Python, VBA, SQL, etc.

Bachelors/Masters degree in Engineering/Technology or MBA from

Tier-1 B School or MSc. in Statistics or Mathematics.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Responsibilities:

- Must be able to write quality code and build secure, highly available systems.

- Assemble large, complex datasets that meet functional / non-functional business requirements.

- Identify, design, and implement internal process improvements: automating manual processes, optimizing datadelivery, re-designing infrastructure for greater scalability, etc with the guidance.

- Create datatools for analytics and data scientist team members that assist them in building and optimizing our product into an innovative industry leader.

- Monitoring performance and advising any necessary infrastructure changes.

- Defining dataretention policies.

- Implementing the ETL process and optimal data pipeline architecture

- Build analytics tools that utilize the datapipeline to provide actionable insights into customer acquisition, operational efficiency, and other key business performance metrics.

- Create design documents that describe the functionality, capacity, architecture, and process.

- Develop, test, and implement datasolutions based on finalized design documents.

- Work with dataand analytics experts to strive for greater functionality in our data

- Proactively identify potential production issues and recommend and implement solutions

Skillsets:

- Good understanding of optimal extraction, transformation, and loading of datafrom a wide variety of data sources using SQL and AWS ‘big data’ technologies.

- Proficient understanding of distributed computing principles

- Experience in working with batch processing/ real-time systems using various open-source technologies like NoSQL, Spark, Pig, Hive, Apache Airflow.

- Implemented complex projects dealing with the considerable datasize (PB).

- Optimization techniques (performance, scalability, monitoring, etc.)

- Experience with integration of datafrom multiple data sources

- Experience with NoSQL databases, such as HBase, Cassandra, MongoDB, etc.,

- Knowledge of various ETL techniques and frameworks, such as Flume

- Experience with various messaging systems, such as Kafka or RabbitMQ

- Good understanding of Lambda Architecture, along with its advantages and drawbacks

- Creation of DAGs for dataengineering

- Expert at Python /Scala programming, especially for dataengineering/ ETL purposes

Similar companies

About the company

Founded in 2004, Inteliment helps some of the most forward-thinking enterprises worldwide derive maximum business impact through their Data. With 20+ years in analytics, we help businesses harness deep expertise and the latest technologies to drive innovation, sharpen their competitive edge, and stay future-ready.

Inteliment is recognized as a leading provider of Data Driven Analytical Solutions & Services in Visual & Predictive Analytics, Data Science, IoT, Mobility & Artificial Intelligence areas. We strive for the success of our customers through Innovation, Technology and Partnerships.

Inteliment operates its Delivery & IP Centers in India and Australia and through its group companies in Singapore, Finland and USA.

Jobs

0

About the company

Jobs

8

About the company

We are a fast growing virtual & hybrid events and engagement platform. Gevme has already powered hundreds of thousands of events around the world for clients like Facebook, Netflix, Starbucks, Forbes, MasterCard, Citibank, Google, Singapore Government etc.

We are a SAAS product company with a strong engineering and family culture; we are always looking for new ways to enhance the event experience and empower efficient event management. We’re on a mission to groom the next generation of event technology thought leaders as we grow.

Join us if you want to become part of a vibrant and fast moving product company that's on a mission to connect people around the world through events.

Jobs

6

About the company

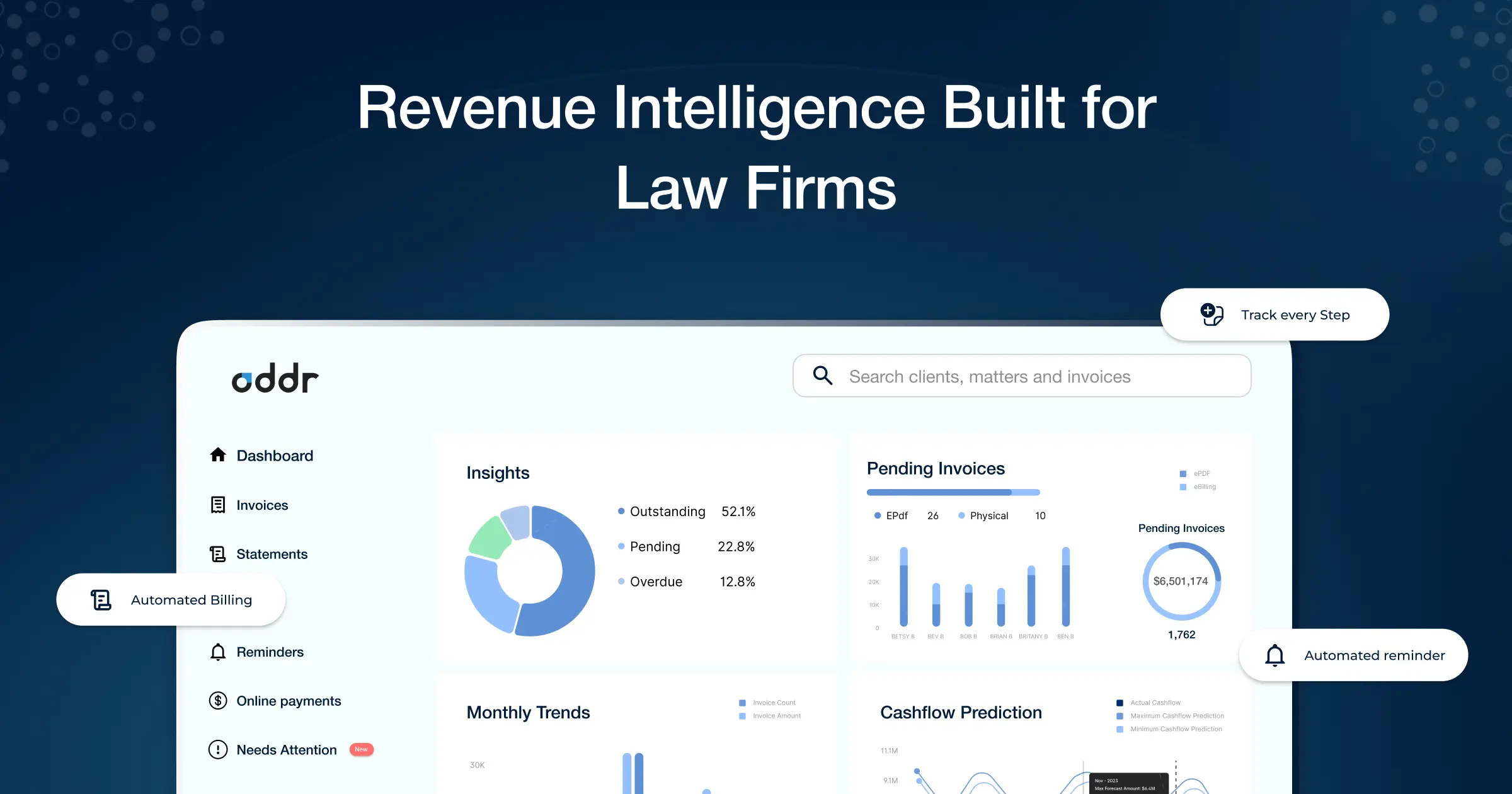

Oddr is the legal industry’s only AI-powered invoice-to-cash platform. Oddr’s AI-powered platform centralizes, streamlines and accelerates every step of billing + collections— from bill preparation and delivery to collections and reconciliation - enabling new possibilities in analytics, forecasting, and client service that eliminate revenue leakage and increase profitability in the billing and collections lifecycle.

www.oddr.com

Jobs

9

About the company

At LearnTube, we're reimagining how the world learns making education accessible, affordable, and outcome-driven using Generative AI. Our platform turns scattered internet content into structured, personalised learning journeys using:

- AI-powered tutors that teach live, solve doubts instantly, and give real-time feedback

- Frictionless delivery via WhatsApp, mobile, and web

- Trusted by 2.2 million learners across 64 countries

Founded by Shronit Ladhani and Gargi Ruparelia, both second-time entrepreneurs and ed-tech builders:

- Shronit is a TEDx speaker and an outspoken advocate for disrupting traditional learning systems.

- Gargi is one of the Top Women in AI in India, recognised by the government, and leads our AI and scalability roadmap.

Together, they bring deep product thinking, bold storytelling, and executional clarity to LearnTube’s vision. LearnTube is proudly backed by Google as part of their 2024 AI First Accelerator, giving us access to cutting-edge tech, mentorship, and cloud credits.

Jobs

4

About the company

Wama Technology integrates state-of-the-art technology smoothly to promote corporate success, providing a comprehensive “One-Stop Solution” for all digital demands, from cloud services, artificial intelligence, machine learning, and mobile and web app development technologies. Wama Technologies offers customized solutions that enable businesses to prosper in the digital era. At Wama Technology, our team prioritizes innovation, user experience, and client happiness to provide digital transformation. Wama Technology assists companies in improving user interaction, automatization processes, or delivering new ideas through the strategic use of technology.

Jobs

6

About the company

Jobs

1

About the company

Jobs

1