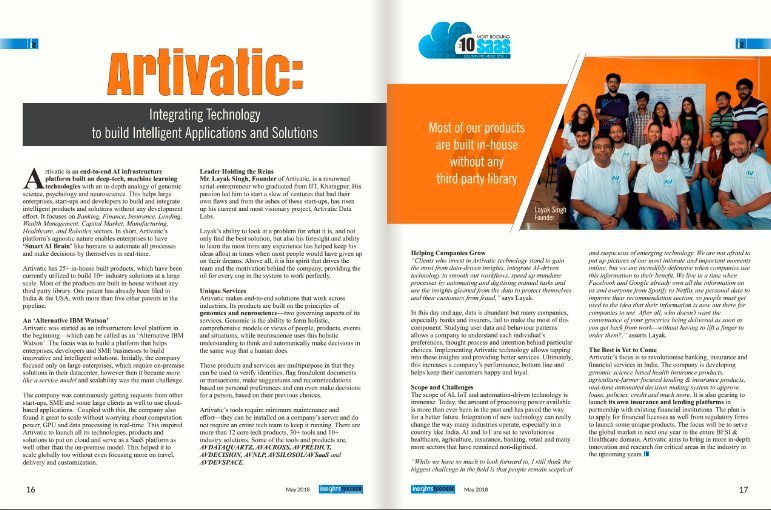

About Artivatic

About

Company video

Photos

Connect with the team

Similar jobs

Job description:

Job Description – Manager / Senior Manager – Marketplaces

Company: Indian retail sweet and snacks brand

Location: Lawrence Road Industrial Area, Delhi

Work Mode: Hybrid

Experience: 5+ years

Relevant Experience: Minimum 4+ years managing marketplaces such as Amazon, Flipkart, Quick Commerce platforms, etc.

Work Schedule: 5 Days WFO

About the Company

It is a fast-growing Indian retail sweet and snacks brand focused on nostalgic, homemade-style products with a strong emphasis on clean labelling, healthier alternatives, and authentic storytelling.

Our long-term vision is ambitious, to build a brand that achieves what traditional legacy brands accomplished over decades, but at a much faster pace.

Role Overview

- The Manager / Senior Manager – Marketplaces will be responsible for owning and scaling the brand’s presence across online marketplaces.

- This role will lead revenue growth, visibility, and profitability across platforms such as Amazon, Flipkart, and quick commerce channels.

- The role requires strong hands-on expertise in marketplace operations, performance marketing, promotions, and category growth.

- The individual will work closely with founders, supply chain, marketing, finance, and operations teams to drive sustainable marketplace performance.

Key Responsibilities

- Own end-to-end marketplace performance including product listings, revenue, margins, and growth targets.

- Manage day-to-day operations across Amazon, Flipkart, and quick commerce platforms.

- Drive visibility through search optimization, catalog management, and platform-led growth levers.

- Plan and execute promotions, deals, and seasonal campaigns in coordination with platform teams.

- Manage marketplace advertising, budgeting, and ROI optimization.

- Track sales performance, pricing, inventory health, and demand forecasting.

- Coordinate with supply chain and operations teams to ensure smooth fulfilment and inventory availability.

- Analyze marketplace data and consumer insights to improve conversion, ratings, and repeat purchases.

- Build and maintain strong relationships with marketplace account managers and platform stakeholders.

Desired Skills & Qualifications

- Minimum 5+ years of overall experience in e-commerce or digital sales.

- At least 4+ years of hands-on experience managing marketplaces such as Amazon, Flipkart, and quick commerce platforms.

- Strong understanding of marketplace algorithms, ads, promotions, and category management.

- Experience scaling brands on marketplaces in FMCG, D2C, or consumer goods categories preferred.

- Strong analytical skills with the ability to interpret performance data and drive actionable insights.

- Ability to work in fast-paced, high-growth environments with high ownership.

- Strong stakeholder management and communication skills.

Why Join Us

- Be part of building a ₹1000 Cr consumer brand from the ground up

- Opportunity to work closely with founders and leadership

- Long-term wealth creation through ESOP participation

- High ownership, high impact role in a fast-scaling organization

About the organization

Bhanzu is a math EdTech company which has recently raised Series B round led by Epiq Capital with participation from Z3Partners and existing investors

Website - https://www.bhanzu.com/

Role: Curriculum Developer - Mathematics

Location: Bangalore, HSR Layout.

Key Responsibilities:

- Develop and design interactive and engaging math content that nurtures curiosity.

- Collaborate with the curriculum team to ensure alignment with Bhanzu’s standards and learning objectives.

- Craft lesson plans, exercises, and assessments suitable for students across different grade groups.

- Continuously refine content based on feedback and best practices in educational content.

Qualifications:

- Bachelor’s degree in Mathematics (B.Sc. Mathematics Honours) or related STEM fields; Master’s in Mathematics or a relevant applied degree is preferred.

- Strong understanding of K-12 mathematics concepts and pedagogy.

Preference For Candidates with:

- Previous experience in curriculum development.

- Teaching experience, especially with K-12 students.

- Experience in developing global math content, especially for regions like the US and UK.

- Understanding of cultural nuances and educational standards.

- A creative and innovative approach to making math concepts fun and engaging.

RESPONSIBILITIES

● Responsible for communicating with customers from a collections stand-point

● Omni channel communication such as Email, SMS, Tele Calling etc.

● Contact clients and discuss their overdue payments in a friendly manner, and proactively propose solutions based on defined SOPs

● Monitor accounts on a daily basis

● Identify outstanding account receivables

● Collaborating on debt collection efforts with Finance, Sales, and the Legal team.

● Investigate historical data for debts and bills

● Developing debt collection strategies and plans

● Take actions in order to encourage timely payments

● Process payments and refunds

● Resolve billing issues ● Ensure compliance to all federal and local regulations for collection process

● Update account status records

REQUIREMENTS

● Bachelor’s Degree in Finance, Business or related field

● 3-6 years of relevant experience

● Experience working in the collections team for consumer credit products such as Credit Card, Personal Loan, Education Loan, 2W and 4W, Home Loan, etc.

● Excellent knowledge of various collection techniques

● Experience in developing and enacting debt recovery plans and strategies to prevent losses

● Knowledge of MS Office, Data Interpretation, databases, and alternate mode of collections

● Excellent communication and interpersonal skills

● Demonstrated experience of being empathetic with customers

● Problem-solving and critical-thinking skill

Hello,

Greetings from CodersBrain!

Coders Brain is a global leader in its services, digital, and business solutions that partners with its clients to simplify, strengthen, and transform their businesses. We ensure the highest levels of certainty and satisfaction through a deep-set commitment to our clients, comprehensive industry expertise, and a global network of innovation and delivery centers.

This is regarding the urgent opening for the'' ROR Developer role. We found your profile in the Cutshort database and it seems like a good fit for the organization. If you are interested, do revert back with your updated CV along with the details:

Permanent Payroll : CodersBrain

Location:- Mumbai/ Kolkata

Notice Period:- imm./15 daysJob Description

Scope of work This role is an exciting opportunity to build highly interactive

customer facing applications and products.

Candidate will help transform vast collections of data into

actionable insights with intuitive and easy to use interfaces and

visualizations.

Candidate will leverage the power of JavaScript and Ruby on

Rail to build novel features and improvements to our current suite

of tools.

This team is responsible for customer-facing applications that

deliver SEO data insights.

Through the applications customers are able to access insights,

workflows, and aggregations of information above and beyond

core data offerings

Responsibility

Build and maintain the core frontend application

Work collaboratively with the engineers on the Frontend team to

ensure quality and performance of the systems through code

reviews, documentation, analysis and employing engineering

best practices to ensure high-quality software

Contribute to org devops culture by maintaining our systems,

including creating documentation, run books, monitoring, alerting,

and integration tests, etc.

Participate in architecture design and development for new

features and capabilities, and for migration of legacy systems, to

meet business and customer needs.

Take turns in the on-call rotation, handling systems and

operations issues as they arise including responding to off-hours

alerts

Collaborate with other teams on dependent work and integrations

as well as be vigilant for activities happening outside the team

that would have an impact on the work your team is doing.

Work with Product Managers and UX Designers to deliver new

features and capabilities

Use good security practices to protect code and systems

Pitch in where needed during major efforts or when critical issues

arise

Give constructive, critical feedback to other team members

through pull requests, design reviews, and other methods

Seek out opportunities and work to grow skills and expertise.

C) Skills Required

Essential Skills JavaScript (preferred ExtJS framework)

Ruby

Ruby on Rails

MySQL

Docker

Desired Skills Terraform

Basic Unix/Linux administration

Redis

Resque

TravisCI

AWS ECS

AWS RDS

AWS EMR

AWS S3

AWS Step Functions

AWS Lambda Functions

Experience working remotely with a distributed team

Great problem-solving skills

D) Other Information

Educational Qualifications Bachelor's degree/MCA

Experience 5– 8 years

Please confirm the mail with your updated CV if you are interested in this position and also please share the below-mentioned details :

Current CTC:

Expected CTC:

Current Company:

Notice Period:

Notice Period:Are you okay with 1 week Notice period if not then comfortable as freelancing work with us till joining:-

Current Location:

Preferred Location:

Total-experience:

Relevant experience:

Highest qualification:

DOJ(If Offer in Hand from Other company):

offer in hand:

Alternate number:

Interview Availability

Requirements:

- Bachelor's or Master’s degree in Computer Science, Engineering, or related field.

- Minimum 5+ years of experience in Node.js & Angular development.

- Experience with NoSQL database technologies like MongoDB is must.

- Strong problem-solving skills and the ability to work effectively in a fast-paced environment.

- Excellent communication skills with the ability to articulate technical concepts to non-technical stakeholders.

- Ability to prioritize tasks, manage projects properly and meet project deadlines.

- Experience with version control systems (e.g., Git) and CI/CD pipelines is must.

Responsibilities:

- Lead the Product development team in designing, implementing, and maintaining robust MEAN stack Application.

- Should be able to plan & execute Development Sprints in Jira based on given Feature Documentation.

- Should be able to allocate resources for Sprints and give time estimates.

- Conduct Daily Standup Meetings for Team and make sure of Sprint Progress.

- Provide technical leadership and mentorship to junior developers, guiding them in best practices for MEAN Stack development.

- Collaborate with stakeholders to gather requirements, provide technical insights, and communicate project progress.

- Stay updated with the latest development trends, tools, and technologies, and advocate for their adoption within the team.

- Making sure that we are creating highly scalable and maintainable API’s that meet performance and security requirements.

- Make sure to have at least 90% Test Coverage for NodeJS Backend.

- Conduct detailed code reviews & refactoring and ensure adherence to coding standards, best practices, and project guidelines.

- Optimising frontend components for maximum performance across a vast array of web-capable devices and browsers.

- Develop detailed, annotated wireframes depicting all elements on unique screen types, including content, functional, navigation, and interaction specifications.

- Articulates rationale for approach in the context of both business and user needs.

- Define processes and deliverable that meet project goals and are reasonable within operational, cultural and technology constraints.

- Submit Weekly Sprint & Product Performance Report to Management

About Company:

The company is a global leader in secure payments and trusted transactions. They are at the forefront of the digital revolution that is shaping new ways of paying, living, doing business and building relationships that pass on trust along the entire payments value chain, enabling sustainable economic growth. Their innovative solutions, rooted in a rock-solid technological base, are environmentally friendly, widely accessible and support social transformation.

Requirements:

- Angular and Angular JS, Able to work Architecture, Design and Development ofComplex UI Screens

- OOP fundamentals (Encapsulation, Abstraction, Inheritance, Polymorphism), MVC architecture

- UML (Class/Sequence diagrams)

- Design Patterns (Should be able to explain some common design patterns)

- Versioning (CVS, VSS, Git etc. – why is it used?, check-in/check-out, merging, how to resolve conflicts?, etc.)

- JDBC (Statement types, Obtaining connection, ResultSet, Drivers)

- Hibernate/JPA , DB (PK, FK, Normalization), SQL (Insert/Update/Delete/select queries)

- CSS (Styles, Classes)

- SDLC (What is it?, benefits, etc.)

- Robust Programming, Debugging (Common IDE features and their use)

- Analytical skills – ability to look at a problem with different perspectives: define solutions understanding the pros and cons of each

- Hands on with Continuous Integration, Maven

- Estimation, Planning and Tracking, Timely Issue Escalation and Resolutions

- Exposure to Performance tools, Jprofiler, Dynatrace etc.

Job Description :

We are looking for a Front-End Web Developer who is motivated to combine the art of design with the art of programming. Responsibilities will include translation of the UI/UX design wireframes to actual code that will produce visual elements of the application. You will work with the UI/UX designer and bridge the gap between graphical design and technical implementation, taking an active role on both sides and defining how the application looks as well as how it works.

Responsibilities :

- Develop new user-facing features

- Build reusable code and libraries for future use

- Ensure the technical feasibility of UI/UX designs

- Optimize application for maximum speed and scalability

- Assure that all user input is validated before submitting to back-end

- Collaborate with other team members and stakeholders

Skills :

* Proficient understanding of web markup, including HTML5, CSS3.

* Basic understanding of server-side CSS pre-processing platforms, such as LESS and SASS.

* Proficient understanding of client-side scripting and JavaScript frameworks, including jQuery.

* Good understanding of asynchronous request handling, partial page updates, and AJAX.

* Basic knowledge of image authoring tools, to be able to crop, resize, or perform small adjustments on an image.

* Familiarity with tools such as Gimp or Photoshop is a plus.

* Proficient understanding of cross-browser compatibility issues and ways to work around them.

* Proficient understanding of code versioning tools, such as Git / Bitbucket.

* Good understanding of SEO principles and ensuring that application will adhere to them.

What we're looking for:

You will help define the next generation experience for Citrix Workspace app on desktop platforms and modernize tech stack. If you believe in a bottom-up culture, are passionate about delivering high quality software at scale, striving for technical agility in a fast paced, high performance environment, then we are looking for you!

Position Overview

The Engineering Manager is responsible to lead a critical iOS development team in Bangalore focused on building exciting new solutions for our iOS users .This team will be driving key strategic initiatives while collaborating closely with our iOS development teams globally.

Role Responsibilities

- Build and manage a team of highly skilled software engineers using agile practices.

- Responsible for design, development, maintenance of software which is used by millions of people daily.

- Has flexibility to spend significant time as an individual contributor as well, writing code and designing applications and APIs.

- Design and develop advanced applications for mobile platforms (Mobile Apps and/or cloud services)

- Help own and deliver on the Team goals owned out of Bangalore by challenging the existing thinking and bringing on new technologies and solutions by applying your experience to guide the future of Citrix.

- Collaborate with various stakeholders across different business functions and located in different Geos.

- Lead the team through the development process and seek opportunity for continuous improvement and best practices on multiple projects of high complexity.

Basic Qualifications

- Bachelor’s degree in computer science and 9+ years of software development experience, with 1+ years in a Technical manager role

- Extremely deep understanding of mobile development frameworks and deep experience in cross platform application development such as React, React Native, Meteor

- Experience with iOS frameworks - UIKit, Core Foundation, Core Animation, Core Graphics, Autolayout, AVFoundation, SceneKit, GCD etc.

- In depth Understanding of Apple’s recommended design principles, interface guidelines and and best practices for coding

- Experience working with remote data via REST, JSON and Web Services

- Strong foundational knowledge on computer science principles

- Sound Agile planning and Design skills

- Demonstrated ability to lead and collaborate effectively across organizational boundaries.

- Strong interpersonal and leadership skills, to drive collaboration and innovation.

- Experience and interest in guiding and leading team members of other teams

ETL Developer – Talend

Job Duties:

- ETL Developer is responsible for Design and Development of ETL Jobs which follow standards,

best practices and are maintainable, modular and reusable.

- Proficiency with Talend or Pentaho Data Integration / Kettle.

- ETL Developer will analyze and review complex object and data models and the metadata

repository in order to structure the processes and data for better management and efficient

access.

- Working on multiple projects, and delegating work to Junior Analysts to deliver projects on time.

- Training and mentoring Junior Analysts and building their proficiency in the ETL process.

- Preparing mapping document to extract, transform, and load data ensuring compatibility with

all tables and requirement specifications.

- Experience in ETL system design and development with Talend / Pentaho PDI is essential.

- Create quality rules in Talend.

- Tune Talend / Pentaho jobs for performance optimization.

- Write relational(sql) and multidimensional(mdx) database queries.

- Functional Knowledge of Talend Administration Center/ Pentaho data integrator, Job Servers &

Load balancing setup, and all its administrative functions.

- Develop, maintain, and enhance unit test suites to verify the accuracy of ETL processes,

dimensional data, OLAP cubes and various forms of BI content including reports, dashboards,

and analytical models.

- Exposure in Map Reduce components of Talend / Pentaho PDI.

- Comprehensive understanding and working knowledge in Data Warehouse loading, tuning, and

maintenance.

- Working knowledge of relational database theory and dimensional database models.

- Creating and deploying Talend / Pentaho custom components is an add-on advantage.

- Nice to have java knowledge.

Skills and Qualification:

- BE, B.Tech / MS Degree in Computer Science, Engineering or a related subject.

- Having an experience of 3+ years.

- Proficiency with Talend or Pentaho Data Integration / Kettle.

- Ability to work independently.

- Ability to handle a team.

- Good written and oral communication skills.