Infinium Associate

https://infiniumassociates.comAbout

Connect with the team

Jobs at Infinium Associate

We are looking for a visionary and hands-on Head of Data Science and AI with at least 6 years of experience to lead our data strategy and analytics initiatives. In this pivotal role, you will take full ownership of the end-to-end technology stack, driving a data-analytics-driven business roadmap that delivers tangible ROI. You will not only guide high-level strategy but also remain hands-on in model design and deployment, ensuring our data capabilities directly empower executive decision-making.

If you are passionate about leveraging AI and Data to transform financial services, we invite you to lead our data transformation journey.

Key Responsibilities

Strategic Leadership & Roadmap

- End-to-End Tech Stack Ownership: Define, own, and evolve the complete data science and analytics technology stack to ensure scalability and performance.

- Business Roadmap & ROI: Develop and execute a data analytics-driven business roadmap, ensuring every initiative is aligned with organizational goals and delivers measurable Return on Investment (ROI).

- Executive Decision Support: Create and present high-impact executive decision packs, providing actionable insights that drive key business strategies.

Model Design & Deployment (Hands-on)

- Hands-on Development: Lead by example with hands-on involvement in AI modeling, machine learning model design, and algorithm development using Python.

- Deployment & Ops: Oversee and execute the deployment of models into production environments, ensuring reliability, scalability, and seamless integration with existing systems.

- Leverage expert-level knowledge of Google Cloud Agentic AI, Vertex AI and BigQuery to build advanced predictive models and data pipelines.

- Develop business dashboards for various sales channels and drive data driven decision making to improve sales and reduce costs.

Governance & Quality

- Data Governance: Establish and enforce robust data governance frameworks, ensuring data accuracy, security, consistency, and compliance across the organization.

- Best Practices: Champion best practices in coding, testing, and documentation to build a world-class data engineering culture.

Collaboration & Innovation

- Work closely with Product, Engineering, and Business leadership to identify opportunities for AI/ML intervention.

- Stay ahead of industry trends in AI, Generative AI, and financial modeling to keep Bajaj Capital at the forefront of innovation.

Must-Have Skills & Experience

Experience:

- At least 7 years of industry experience in Data Science, Machine Learning, or a related field.

- Proven track record of applying AI and leading data science teams or initiatives that resulted in significant business impact.

Technical Proficiency:

- Core Languages: Proficiency in Python is mandatory, with strong capabilities in libraries such as Pandas, NumPy, Scikit-learn, TensorFlow/PyTorch.

- Cloud Data Stack: Expert-level command of Google Cloud Platform (GCP), specifically Agentic AI, Vertex AI and BigQuery.

- AI & Analytics Stack: Deep understanding of the modern AI and Data Analytics stack, including data warehousing, ETL/ELT pipelines, and MLOps.

- Visualization: PowerBI in combination with custom web/mobile applications.

Leadership & Soft Skills:

- Ability to translate complex technical concepts into clear business value for stakeholders.

- Strong ownership mindset with the ability to manage end-to-end project lifecycles.

- Experience in creating governance structures and executive-level reporting.

Good-to-Have / Plus

- Domain Expertise: Prior experience in the BFSI domain (Wealth Management, Insurance, Mutual Funds, or Fintech).

- Certifications: Google Professional Data Engineer or Google Professional Machine Learning Engineer certifications.

- Advanced AI: Experience with Generative AI (LLMs), RAG architectures, and real-time analytics.

Job Role: Teamcenter Admin

• Teamcenter and CAD (NX) Configuration Management

• Advanced debugging and root-cause analysis beyond L2

• Code fixes and minor defect remediation

• AWS knowledge, which is foundational to our Teamcenter architecture

• Experience supporting weekend and holiday code deployments

• Operational administration (break/fix, handle ticket escalations, problem management

• Support for project activities

• Deployment and code release support

• Hypercare support following deployment, which is expected to onboard approximately 1,000+ additional users

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

DataHavn IT Solutions is a company that specializes in big data and cloud computing, artificial intelligence and machine learning, application development, and consulting services. We want to be in the frontrunner into anything to do with data and we have the required expertise to transform customer businesses by making right use of data.

About the Role

We're seeking a talented and versatile Full Stack Developer with a strong foundation in mobile app development to join our dynamic team. You'll play a pivotal role in designing, developing, and maintaining high-quality software applications across various platforms.

Responsibilities

- Full Stack Development: Design, develop, and implement both front-end and back-end components of web applications using modern technologies and frameworks.

- Mobile App Development: Develop native mobile applications for iOS and Android platforms using Swift and Kotlin, respectively.

- Cross-Platform Development: Explore and utilize cross-platform frameworks (e.g., React Native, Flutter) for efficient mobile app development.

- API Development: Create and maintain RESTful APIs for integration with front-end and mobile applications.

- Database Management: Work with databases (e.g., MySQL, PostgreSQL) to store and retrieve application data.

- Code Quality: Adhere to coding standards, best practices, and ensure code quality through regular code reviews.

- Collaboration: Collaborate effectively with designers, project managers, and other team members to deliver high-quality solutions.

Qualifications

- Bachelor's degree in Computer Science, Software Engineering, or a related field.

- Strong programming skills in [relevant programming languages, e.g., JavaScript, Python, Java, etc.].

- Experience with [relevant frameworks and technologies, e.g., React, Angular, Node.js, Swift, Kotlin, etc.].

- Understanding of software development methodologies (e.g., Agile, Waterfall).

- Excellent problem-solving and analytical skills.

- Ability to work independently and as part of a team.

- Strong communication and interpersonal skills.

Preferred Skills (Optional)

- Experience with cloud platforms (e.g., AWS, Azure, GCP).

- Knowledge of DevOps practices and tools.

- Experience with serverless architectures.

- Contributions to open-source projects.

What We Offer

- Competitive salary and benefits package.

- Opportunities for professional growth and development.

- A collaborative and supportive work environment.

- A chance to work on cutting-edge projects.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

DataHavn IT Solutions is a company that specializes in big data and cloud computing, artificial intelligence and machine learning, application development, and consulting services. We want to be in the frontrunner into anything to do with data and we have the required expertise to transform customer businesses by making right use of data.

About the Role:

As a Data Scientist specializing in Google Cloud, you will play a pivotal role in driving data-driven decision-making and innovation within our organization. You will leverage the power of Google Cloud's robust data analytics and machine learning tools to extract valuable insights from large datasets, develop predictive models, and optimize business processes.

Key Responsibilities:

- Data Ingestion and Preparation:

- Design and implement efficient data pipelines for ingesting, cleaning, and transforming data from various sources (e.g., databases, APIs, cloud storage) into Google Cloud Platform (GCP) data warehouses (BigQuery) or data lakes (Dataflow).

- Perform data quality assessments, handle missing values, and address inconsistencies to ensure data integrity.

- Exploratory Data Analysis (EDA):

- Conduct in-depth EDA to uncover patterns, trends, and anomalies within the data.

- Utilize visualization techniques (e.g., Tableau, Looker) to communicate findings effectively.

- Feature Engineering:

- Create relevant features from raw data to enhance model performance and interpretability.

- Explore techniques like feature selection, normalization, and dimensionality reduction.

- Model Development and Training:

- Develop and train predictive models using machine learning algorithms (e.g., linear regression, logistic regression, decision trees, random forests, neural networks) on GCP platforms like Vertex AI.

- Evaluate model performance using appropriate metrics and iterate on the modeling process.

- Model Deployment and Monitoring:

- Deploy trained models into production environments using GCP's ML tools and infrastructure.

- Monitor model performance over time, identify drift, and retrain models as needed.

- Collaboration and Communication:

- Work closely with data engineers, analysts, and business stakeholders to understand their requirements and translate them into data-driven solutions.

- Communicate findings and insights in a clear and concise manner, using visualizations and storytelling techniques.

Required Skills and Qualifications:

- Strong proficiency in Python or R programming languages.

- Experience with Google Cloud Platform (GCP) services such as BigQuery, Dataflow, Cloud Dataproc, and Vertex AI.

- Familiarity with machine learning algorithms and techniques.

- Knowledge of data visualization tools (e.g., Tableau, Looker).

- Excellent problem-solving and analytical skills.

- Ability to work independently and as part of a team.

- Strong communication and interpersonal skills.

Preferred Qualifications:

- Experience with cloud-native data technologies (e.g., Apache Spark, Kubernetes).

- Knowledge of distributed systems and scalable data architectures.

- Experience with natural language processing (NLP) or computer vision applications.

- Certifications in Google Cloud Platform or relevant machine learning frameworks.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

About the Role:

We are seeking a talented Data Engineer to join our team and play a pivotal role in transforming raw data into valuable insights. As a Data Engineer, you will design, develop, and maintain robust data pipelines and infrastructure to support our organization's analytics and decision-making processes.

Responsibilities:

- Data Pipeline Development: Build and maintain scalable data pipelines to extract, transform, and load (ETL) data from various sources (e.g., databases, APIs, files) into data warehouses or data lakes.

- Data Infrastructure: Design, implement, and manage data infrastructure components, including data warehouses, data lakes, and data marts.

- Data Quality: Ensure data quality by implementing data validation, cleansing, and standardization processes.

- Team Management: Able to handle team.

- Performance Optimization: Optimize data pipelines and infrastructure for performance and efficiency.

- Collaboration: Collaborate with data analysts, scientists, and business stakeholders to understand their data needs and translate them into technical requirements.

- Tool and Technology Selection: Evaluate and select appropriate data engineering tools and technologies (e.g., SQL, Python, Spark, Hadoop, cloud platforms).

- Documentation: Create and maintain clear and comprehensive documentation for data pipelines, infrastructure, and processes.

Skills:

- Strong proficiency in SQL and at least one programming language (e.g., Python, Java).

- Experience with data warehousing and data lake technologies (e.g., Snowflake, AWS Redshift, Databricks).

- Knowledge of cloud platforms (e.g., AWS, GCP, Azure) and cloud-based data services.

- Understanding of data modeling and data architecture concepts.

- Experience with ETL/ELT tools and frameworks.

- Excellent problem-solving and analytical skills.

- Ability to work independently and as part of a team.

Preferred Qualifications:

- Experience with real-time data processing and streaming technologies (e.g., Kafka, Flink).

- Knowledge of machine learning and artificial intelligence concepts.

- Experience with data visualization tools (e.g., Tableau, Power BI).

- Certification in cloud platforms or data engineering.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

We are seeking a skilled Magic xpa Developer with strong proficiency in .NET technologies to design, develop, and maintain enterprise applications. The ideal candidate will have hands-on experience with Magic xpa (formerly uniPaaS / eDeveloper), integration with .NET components, and solid knowledge of database systems and web services.

Key Responsibilities

- Develop, enhance, and maintain business applications using Magic xpa Application Platform.

- Integrate Magic xpa applications with .NET modules, APIs, and external systems.

- Collaborate with cross-functional teams to understand business requirements and translate them into technical specifications.

- Design and implement data integration, business logic, and UI functionalities within Magic xpa and .NET frameworks.

- Debug, troubleshoot, and optimize application performance.

- Participate in code reviews, unit testing, and deployment activities.

- Work with SQL Server or other RDBMS for data modeling, stored procedures, and performance tuning.

- Ensure adherence to coding standards, security practices, and documentation requirements.

Required Skills & Experience

- 5+ years of experience in Magic xpa (version 3.x or 4.x) development.

- Strong proficiency in C#, .NET Framework / .NET Core, and Visual Studio.

- Experience integrating Magic xpa applications with REST/SOAP APIs.

- Hands-on experience with SQL Server / Oracle, including complex queries and stored procedures.

- Good understanding of software development lifecycle (SDLC), agile methodologies, and version control tools (Git, TFS).

- Ability to troubleshoot runtime and build-time issues in Magic xpa and .NET environments.

- Excellent analytical, problem-solving, and communication skills.

Nice to Have

- Experience with Magic xpi (integration platform).

- Knowledge of web technologies (ASP.NET, HTML5, JavaScript).

- Exposure to cloud environments (AWS / Azure).

- Understanding of enterprise system integration and microservices architecture

Similar companies

About the company

Jobs

2

About the company

Juntrax Solutions is a young company with a collaborative work culture, on a mission to bring efficient solutions to SMEs. We have release the first version of our product in 2019 and have over 300 users using it daily. Our current focus is to bring out release 2 of the product with a new redesign and new tech stack. Joining us you will be part of a great team building a integrated platform for SMEs to help them manage their daily business globally.

Jobs

2

About the company

We are a fast growing virtual & hybrid events and engagement platform. Gevme has already powered hundreds of thousands of events around the world for clients like Facebook, Netflix, Starbucks, Forbes, MasterCard, Citibank, Google, Singapore Government etc.

We are a SAAS product company with a strong engineering and family culture; we are always looking for new ways to enhance the event experience and empower efficient event management. We’re on a mission to groom the next generation of event technology thought leaders as we grow.

Join us if you want to become part of a vibrant and fast moving product company that's on a mission to connect people around the world through events.

Jobs

5

About the company

Deep Tech Startup Focusing on Autonomy and Intelligence for Unmanned Systems. Guidance and Navigation, AI-ML, Computer Vision, Information Fusion, LLMs, Generative AI, Remote Sensing

Jobs

5

About the company

Jobs

5

About the company

Jobs

54

About the company

Peak Hire Solutions is a leading Recruitment Firm that provides our clients with innovative IT / Non-IT Recruitment Solutions. We pride ourselves on our creativity, quality, and professionalism. Join our team and be a part of shaping the future of Recruitment.

Jobs

256

About the company

Jobs

2

About the company

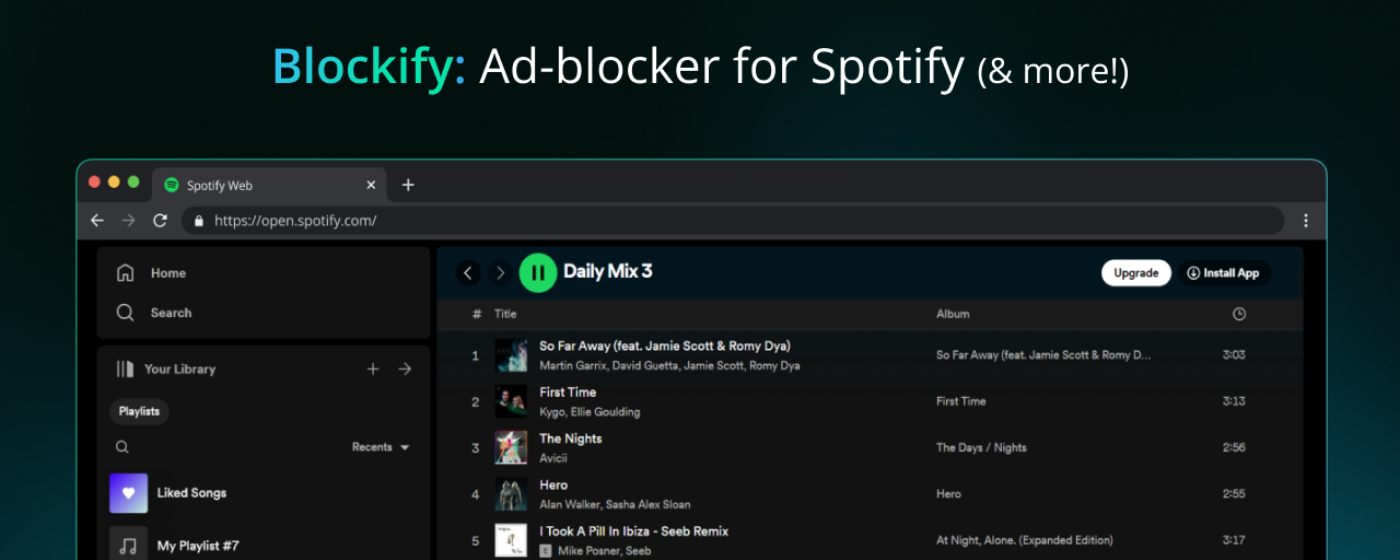

Building the most advanced ad blocker on the planet!🌎

Loved by 3,50,000+ users on Chrome!

Jobs

1

About the company

Jobs

1