Deqode

https://deqode.comJobs at Deqode

- Strong experience in Azure – mainly Azure ML Studio, AKS, Blob Storage, ADF, ADO Pipelines.

- Ability and experience to register and deploy ML/AI/GenAI models via Azure ML Studio.

- Working knowledge of deploying models in AKS clusters.

- Design and implement data processing, training, inference, and monitoring pipelines using Azure ML.

- Excellent Python skills – environment setup and dependency management, coding as per best practices, and knowledge of automatic code review tools like linting and Black.

- Experience with MLflow for model experiments, logging artifacts and models, and monitoring.

- Experience in orchestrating machine learning pipelines using MLOps best practices.

- Experience in DevOps with CI/CD knowledge (Git in Azure DevOps).

- Experience in model monitoring (drift detection and performance monitoring).

- Fundamentals of data engineering.

- Docker-based deployment is good to have.

Role Summary

We are looking for a seasoned Full Stack Developer with strong backend expertise in PHP (Laravel/Symfony) and modern frontend experience using React (Vue.js or Angular exposure is a plus). The ideal candidate should possess a full-stack mindset with experience in building scalable REST APIs and interactive UI applications.

Key Responsibilities

- End-to-end feature development (Backend + Frontend)

- Design and develop scalable, secure, and maintainable applications

- Build and optimize REST APIs and reusable UI components

- Participate in technical design discussions and code reviews

- Mentor junior developers and ensure coding best practices

- Collaborate with QA, DevOps, and Architects

- Focus on performance tuning and security enhancements

Must-Have Skills

- Strong hands-on experience in PHP (Laravel/Symfony)

- Experience building and consuming REST APIs

- Expertise in React.js (Vue.js/Angular acceptable)

- Strong knowledge of JavaScript/TypeScript

- Solid understanding of HTML5, CSS3

- Experience with scalable and maintainable application architecture

Nice-to-Have Skills

- Full-stack exposure with Node.js

- Experience with MongoDB / NoSQL databases

- Familiarity with Docker, CI/CD pipelines, Cloud platforms

- Knowledge of HCM and Payroll domain

Overview

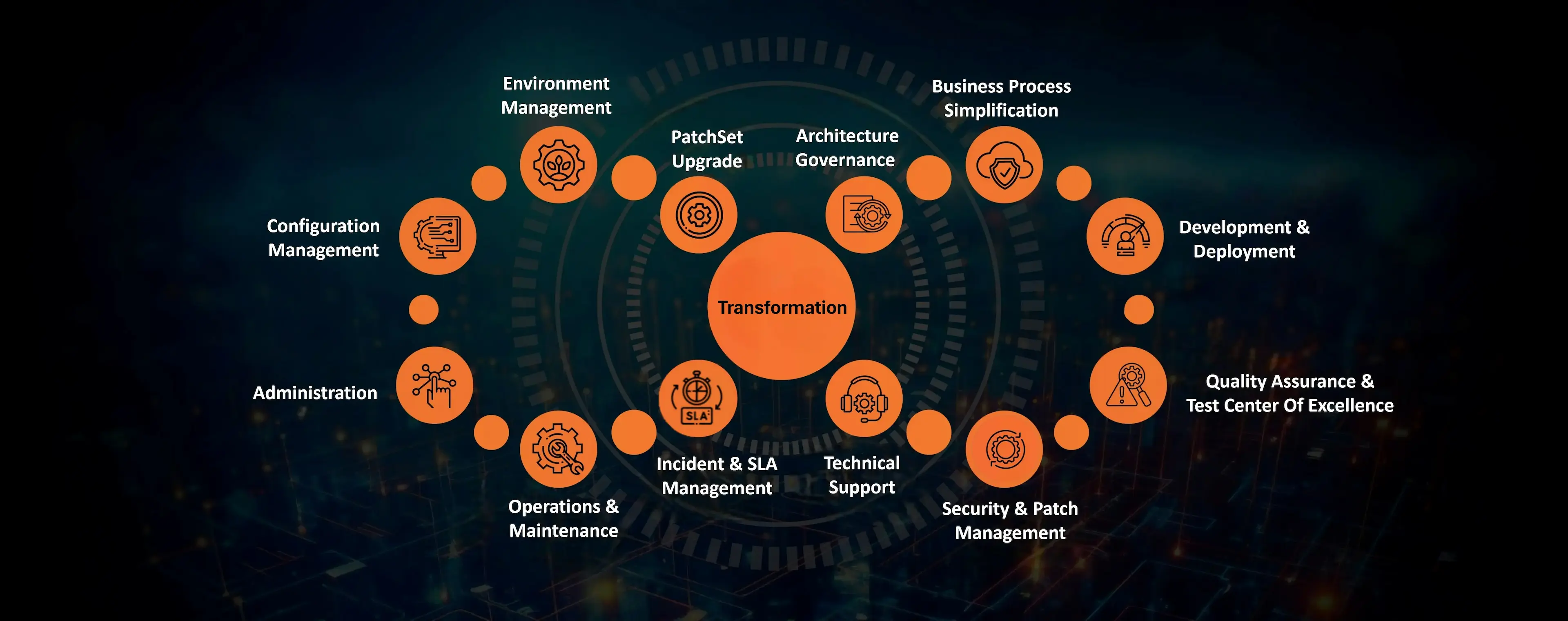

We seek a detail-oriented, collaborative Technical Enablement Lead to drive the implementation of enterprise systems and associated integrations, specifically with ERP (Enterprise Resource Planning) platforms and WMS (Warehouse Management Systems). This role is ideal for someone who thrives in cross-functional environments and excels at translating operational needs into scalable technical solutions.

Key Responsibilities

Cross-Functional Technical Integration

- Drive alignment across engineering, data, and warehouse operations teams

- Work with the teams to help translate business requirements into actionable integration tasks and system configurations

Issue Resolution & Continuous Improvement

- Identify and mitigate risks early, removing barriers to keep the team on track

- Work with the technical teams who monitor system performance and data flow integrity

- Lead the teams in root cause analysis and implement iterative enhancements to integration reliability

ETL Process Oversight

- Collaborate with partner who leads the design and validation of ETL pipelines for syncing inventory, orders, and shipment data

- Ensure teams maintain data accuracy, schema compatibility, and robust error handling across platforms

Systems Mapping & Architecture

- Partner with brand and vendor IT teams to design and document integration pathways between ERP and WMS systems

- Work with teams to identify data dependencies, transformation logic, and system constraints

- Confirm alignment with operations leads on workflows and priorities

Access Control Oversight

- Partner with teams to define and coordinate role-based access controls across integrated systems

- Collaborate with infrastructure and security teams to maintain compliance and operational integrity

Qualifications:

- 3-5 + years of experience

- Strong understanding of ERP and WMS platforms and their integration patterns

- Experience working with teams that implement integration tools, e.g., ETL, data mapping

- Familiarity with system roles and access controls

- Excellent communication and coordination skills across technical and operational teams

- Ability to document and communicate systems in a clear, structured manner

Nice to Have:

- ERP: NetSuite, or JDE,

- WMS: Blue Yonder, Deposco

- Any Project Methodology certifications: Agile, Waterfall, Etc…

Job Title: Data Engineer – GCP (Fullstack)

Location: Remote (Chennai Preferred)

Shift: Day Shift

Experience: 4+ Years

Role Overview

We are seeking a skilled Data Engineer / Platform Engineer to drive value delivery within cross-functional squads by leveraging strong technical expertise. The role involves designing, building, and supporting scalable data and application solutions using GCP, Databricks, Apache Spark, and cloud-native services, while following Agile and engineering best practices.

Key Responsibilities

- Design, build, and maintain backend services and APIs using C#, deployed on GCP Cloud Run.

- Develop and support scalable data and application solutions using Databricks.

- Implement and manage data governance, security, and lineage using Unity Catalog.

- Utilize Apache Spark for large-scale data processing and performance optimization.

- Build, optimize, and maintain robust data pipelines and transformations.

- Work closely with cross-functional teams in Agile squads for solution delivery.

- Implement CI/CD pipelines (preferably using Azure DevOps).

- Manage Infrastructure as Code (IaC) using Terraform on GCP.

- Work with Firestore (NoSQL) and relational databases like PostgreSQL/MySQL.

- Perform debugging, troubleshooting, and performance tuning of applications and data workloads.

Required Skills & Expertise

- 4+ years of experience in Data Engineering / Platform Engineering.

- Strong hands-on experience with Databricks and Apache Spark.

- Experience with Unity Catalog for governance and access control.

- Strong knowledge of GCP services, especially Cloud Run.

- Proficiency in building REST APIs using C#.

- Experience with CI/CD pipelines (Azure DevOps preferred).

- Experience with Terraform (IaC on GCP).

- Hands-on experience with Firestore and relational databases.

- Strong analytical, problem-solving, and debugging skills.

- Experience working in Agile environments.

Job Description -

Profile: AI/ML

Experience: 4-8 Years

Mode: Remote

Mandatory Skills - AI/ML, LLM, RAG, Agentic AI, Traditional ML, GCP

Must-Have:

● Proven experience as an AI/ML specifically with a focus on Generative AI and Large Language Models (LLMs) in production.

● Deep expertise in building Agentic Workflows using frameworks like LangChain, LangGraph, or AutoGen.

● Strong proficiency in designing RAG (Retrieval-Augmented Generation)

● Experience with Function Calling/Tool Use in LLMs to connect AI models with external APIs (REST/gRPC) for transactional tasks

● Hands-on experience with Google Cloud Platform (GCP), specifically Vertex AI, Model Garden, and deploying models on GPUs

● Proficiency in Python and deep learning frameworks (PyTorch or TensorFlow).

Job Summary

We are seeking an experienced Java Full Stack Developer with 8+ years of hands-on experience in designing, developing, and maintaining scalable web applications. The ideal candidate should have strong expertise in Java (Spring Boot) on the backend and React.js on the frontend, along with experience in REST APIs, Microservices architecture, and cloud-based deployments.

Key Responsibilities

- Design, develop, and maintain scalable full-stack applications using Java, Spring Boot, and React.js

- Develop RESTful APIs and Microservices-based applications

- Collaborate with cross-functional teams including UI/UX, DevOps, QA, and Product teams

- Write clean, efficient, and reusable code following best practices

- Perform code reviews and mentor junior developers

- Optimize applications for performance and scalability

- Participate in architectural discussions and technical decision-making

- Ensure application security, data protection, and compliance standards

- Troubleshoot, debug, and upgrade existing systems

Required Skills:

- 7+ years of experience in Full Stack development

- Strong hands-on expertise in Java (8/11/17)

- Proficiency in Spring Boot, Spring MVC, Spring Security

- Experience in Microservices architecture and RESTful API development

- Strong knowledge of React.js, JavaScript (ES6+), HTML5, CSS3

- Experience with state management tools (Redux/Context API)

- Hands-on experience with Hibernate/JPA

- Good understanding of SQL databases (MySQL/PostgreSQL/Oracle)

- Experience with Git, Maven/Gradle, and CI/CD pipelines

- Working knowledge of Docker/Kubernetes

- Exposure to Cloud platforms (AWS/Azure/GCP) preferred

- Strong problem-solving and analytical skills

Job Description -

Profile: Senior ML Lead

Experience Required: 10+ Years

Work Mode: Remote

Key Responsibilities:

- Design end-to-end AI/ML architectures including data ingestion, model development, training, deployment, and monitoring

- Evaluate and select appropriate ML algorithms, frameworks, and cloud platforms (Azure, Snowflake)

- Guide teams in model operationalization (MLOps), versioning, and retraining pipelines

- Ensure AI/ML solutions align with business goals, performance, and compliance requirements

- Collaborate with cross-functional teams on data strategy, governance, and AI adoption roadmap

Required Skills:

- Strong expertise in ML algorithms, Linear Regression, and modeling fundamentals

- Proficiency in Python with ML libraries and frameworks

- MLOps: CI/CD/CT pipelines for ML deployment with Azure

- Experience with OpenAI/Generative AI solutions

- Cloud-native services: Azure ML, Snowflake

- 8+ years in data science with at least 2 years in solution architecture role

- Experience with large-scale model deployment and performance tuning

Good-to-Have:

- Strong background in Computer Science or Data Science

- Azure certifications

- Experience in data governance and compliance

🚀 Hiring: Data Engineer ( Azure )

⭐ Experience: 5+ Years

📍 Location: Pune, Bhopal, Jaipur, Gurgaon, Delhi, Banglore,

⭐ Work Mode:- Hybrid

⏱️ Notice Period: Immediate Joiners

(Only immediate joiners & candidates serving notice period)

Hiring: Databricks Data Engineer – Lakeflow | Streaming | DBSQL | Data Intelligence

We are looking for a Databricks Data Engineer to build reliable, scalable, and governed data pipelines powering analytics, operational reporting, and the Data Intelligence Layer.

🔹 Key Responsibilities

- Build optimized batch pipelines using Delta Lake (partitioning, OPTIMIZE, Z-ORDER, VACUUM)

- Implement incremental ingestion using Databricks Autoloader with schema evolution & checkpointing

- Develop Structured Streaming pipelines with watermarking, late data handling & restart safety

- Implement declarative pipelines using Lakeflow

- Design idempotent, replayable pipelines with safe backfills

- Optimize Spark workloads (AQE, skew handling, shuffle & join tuning)

- Build curated datasets for Databricks SQL (DBSQL), dashboards & downstream applications

- Package and deploy using Databricks Repos & Asset Bundles (CI/CD)

- Ensure governance using Unity Catalog and embedded data quality checks

✅ Mandatory Skills (Must Have)

- Databricks & Delta Lake (Advanced Optimization & Performance Tuning)

- Structured Streaming & Autoloader Implementation

- Databricks SQL (DBSQL) & Data Modeling for Analytics

Job Summary

We are seeking an experienced Java Drools Developer to design, develop, and maintain rule-based applications using Drools. The ideal candidate will have strong backend development skills and hands-on experience with business rule management systems in enterprise environments.

Key Responsibilities

- Design, develop, and maintain business rules using Drools (BRMS)

- Create and manage Drools Rule Files (DRL), decision tables, and rule flows

- Integrate Drools with Java / Spring Boot applications

- Optimize rule execution, performance, and scalability

- Develop and consume RESTful APIs

- Collaborate with business analysts to translate requirements into rules

- Participate in code reviews and ensure best practices

- Support testing, debugging, and production issues

Required Skills

- Strong hands-on experience in Java

- Solid experience with Drools Rule Engine / BRMS

- Experience with Spring / Spring Boot and microservices architecture

- Knowledge of REST APIs and backend integrations

- Understanding of rule lifecycle management and versioning

- Good problem-solving and analytical skills

Job Description -

Profile: .Net Full Stack Lead

Experience Required: 7–12 Years

Location: Pune, Bangalore, Chennai, Coimbatore, Delhi, Hosur, Hyderabad, Kochi, Kolkata, Trivandrum

Work Mode: Hybrid

Shift: Normal Shift

Key Responsibilities:

- Design, develop, and deploy scalable microservices using .NET Core and C#

- Build and maintain serverless applications using AWS services (Lambda, SQS, SNS)

- Develop RESTful APIs and integrate them with front-end applications

- Work with both SQL and NoSQL databases to optimize data storage and retrieval

- Implement Entity Framework for efficient database operations and ORM

- Lead technical discussions and provide architectural guidance to the team

- Write clean, maintainable, and testable code following best practices

- Collaborate with cross-functional teams to deliver high-quality solutions

- Participate in code reviews and mentor junior developers

- Troubleshoot and resolve production issues in a timely manner

Required Skills & Qualifications:

- 7–12 years of hands-on experience in .NET development

- Strong proficiency in .NET Framework, .NET Core, and C#

- Proven expertise with AWS services (Lambda, SQS, SNS)

- Solid understanding of SQL and NoSQL databases (SQL Server, MongoDB, DynamoDB, etc.)

- Experience building and deploying Microservices architecture

- Proficiency in Entity Framework or EF Core

- Strong knowledge of RESTful API design and development

- Experience with React or Angular is a good to have

- Understanding of CI/CD pipelines and DevOps practices

- Strong debugging, performance optimization, and problem-solving skills

- Experience with design patterns, SOLID principles, and best coding practices

- Excellent communication and team leadership skills

Similar companies

About the company

About Us

HighLevel is an AI powered, all-in-one white-label sales & marketing platform that empowers agencies, entrepreneurs, and businesses to elevate their digital presence and drive growth. We are proud to support a global and growing community of over 2 million businesses, comprised of agencies, consultants, and businesses of all sizes and industries. HighLevel empowers users with all the tools needed to capture, nurture, and close new leads into repeat customers. As of mid 2025, HighLevel processes over 15 billion API hits and handles more than 2.5 billion message events every day. Our platform manages over 470 terabytes of data distributed across five databases, operates with a network of over 250 microservices, and supports over 1 million domain names.

Our People

With over 1,500 team members across 15+ countries, we operate in a global, remote-first environment. We are building more than software; we are building a global community rooted in creativity, collaboration, and impact. We take pride in cultivating a culture where innovation thrives, ideas are celebrated, and people come first, no matter where they call home.

Our Impact

As of mid 2025, our platform powers over 1.5 billion messages, helps generate over 200 million leads, and facilitates over 20 million conversations for the more than 2 million businesses we serve each month. Behind those numbers are real people growing their companies, connecting with customers, and making their mark - and we get to help make that happen.

EEO Statement:

At HighLevel, we value diversity. In fact, we understand it makes our organisation stronger. We are committed to inclusive hiring/promotion practices that evaluate skill sets, abilities, and qualifications without regard to any characteristic unrelated to performing the job at the highest level. Our objective is to foster an environment where really talented employees from all walks of life can be their true and whole selves, cherished and welcomed for their differences while providing excellent service to our clients and learning from one another along the way! Reasonable accommodations may be made to enable individuals with disabilities to perform essential functions.

Jobs

9

About the company

We build network solutions for the emerging Next Generation Central Office (NGCO) market.

We have re-applied design patterns from the hyper-scale world to Service Provider and cloud networks in order to faster implement new features into operational networks.

Our parallel modular architecture allows customers programmability, performance and scale to alter CAPEX and OPEX.

Jobs

4

About the company

Jobs

29

About the company

Zethic is a creative development hub for building scalable and business ready Web and Mobile applications

Jobs

1

About the company

]eShipz: Simplifying Global Shipping for Businesses: At eShipz, we are revolutionizing how businesses manage their shipping processes. Our platform is designed to offer seamless multi-carrier integration, enabling businesses of all sizes to ship effortlessly across the globe. Whether you're an e-commerce brand, a manufacturer, or a logistics provider, eShipz helps streamline your supply chain with real-time tracking, automated shipping labels, cost-effective shipping rates, and comprehensive reporting.

Our goal is to empower businesses by simplifying logistics, reducing shipping costs, and improving operational efficiency. With an easy-to-use dashboard and a dedicated support team, eShipz ensures that you focus on scaling your business while we handle your shipping needs.

Jobs

16

About the company

Quantiphi is an award-winning AI-first digital engineering company driven by the desire to reimagine and realize transformational opportunities at the heart of the business. Since its inception in 2013, Quantiphi has solved the toughest and most complex business problems by combining deep industry experience, disciplined cloud, and data-engineering practices, and cutting-edge artificial intelligence research to achieve accelerated and quantifiable business results.

Jobs

5

About the company

Celcom Solutions is a global technology services firm specialising in the telecom and BFSI sectors. Founded in the UK in 2010, the company has expanded into India (notably Chennai & Bengaluru) and supports clients across APAC, MENA, Europe, and the UK. celcomsolutions.com+2EMIS+2

Their core offerings span:

- Greenfield implementations, transformations and managed services for OSS/BSS environments.

- Digital‐transformation, testing, data & analytics services that help telcos and enterprises upgrade to newer models.

- A culture built around subject-matter expertise, global delivery capability and domain experience — making them a solid employer for professionals who want meaningful telecom/IT work.

With a committed global team of service-delivery professionals and consultants, Celcom Solutions offers the opportunity to work on large-scale, complex projects in the telecom / tech space — which makes it an interesting destination if you’re recruiting talent who want scale + domain depth.

Jobs

4

About the company

Jobs

7

About the company

Albert Invent is a cutting-edge R&D software company that’s built by scientists, for scientists. Their cloud-based platform unifies lab data, digitises workflows and uses AI/ML to help materials and chemical R&D teams invent faster and smarter. With thousands of researchers in 30+ countries already using the platform, Albert Invent is helping transform how chemistry-led companies go from idea to product.

What sets them apart: built specifically for chemistry and materials science (not generic SaaS), with deep integrations (ELN, LIMS, AI/ML) and enterprise-grade security and compliance.

Jobs

3