DataMetica

https://datametica.comAbout

Company video

Photos

Connect with the team

Jobs at DataMetica

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Datametica is looking for talented Big Query engineers

Total Experience - 2+ yrs.

Notice Period – 0 - 30 days

Work Location – Pune, Hyderabad

Job Description:

- Sound understanding of Google Cloud Platform Should have worked on Big Query, Workflow, or Composer

- Experience in migrating to GCP and integration projects on large-scale environments ETL technical design, development, and support

- Good SQL skills and Unix Scripting Programming experience with Python, Java, or Spark would be desirable.

- Experience in SOA and services-based data solutions would be advantageous

About the Company:

www.datametica.com

Datametica is amongst one of the world's leading Cloud and Big Data analytics companies.

Datametica was founded in 2013 and has grown at an accelerated pace within a short span of 8 years. We are providing a broad and capable set of services that encompass a vision of success, driven by innovation and value addition that helps organizations in making strategic decisions influencing business growth.

Datametica is the global leader in migrating Legacy Data Warehouses to the Cloud. Datametica moves Data Warehouses to Cloud faster, at a lower cost, and with few errors, even running in parallel with full data validation for months.

Datametica's specialized team of Data Scientists has implemented award-winning analytical models for use cases involving both unstructured and structured data.

Datametica has earned the highest level of partnership with Google, AWS, and Microsoft, which enables Datametica to deliver successful projects for clients across industry verticals at a global level, with teams deployed in the USA, EU, and APAC.

Recognition:

We are gratified to be recognized as a Top 10 Big Data Global Company by CIO story.

If it excites you, please apply.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Experience: 2-6 Years

Datametica is looking for talented Java engineers who would get training & the opportunity to work on Cloud and Big Data Analytics.

Job Responsibilities:

- 2-6 years, having hands-on experience in coding, usually in a pair programming environment providing solutions to real problems in the Big Data world

- Working in highly collaborative teams and building quality code

- Working in lots of different domains and client environments also understanding the business domain deeply

- Engineers highly scalable, highly available, reliable, secure, and fault-tolerant systems with minimal guidance

- Create platforms, reusable libraries, and utilities wherever applicable

- Continuously refactor applications to ensure high-quality design

- Choose the right technology stack for the product systems/subsystems

- Write high-quality code that are modular, functional, and testable; Establish the best coding practices

- Formally mentor junior engineers on design, coding, and troubleshooting

- Plan projects using agile methodologies and ensure timely delivery

- Communicate, collaborate and work effectively in a global environment

Required Skills:

- Proficient in Core Java technology stack

- Design and implement low latency RESTful services; Define API contracts between services; Expertise in API design and development, experience in dealing with a large dataset

- Should have worked on Spring boot

- Practicing the coding standards (clean code, design patterns, etc)

- Good understanding of branching, build, deployment, continuous integration methodologies

- Ability to make decisions independently

- Strong experience in collections.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

As a Director Engineering, your role & responsibility will include the following.

- Define the product roadmap and delivery planning.

- Provides technical leadership in design, delivery, and support of product software and platforms.

- Participate in driving technical architectural decisions for the product.

- Prioritization of the features and deliverable artifacts

- Augmentation of the product development staff.

- Mentor managers to implement best practices to motivate and organize their teams.

- Prepare schedules, report status as well as make hiring decisions.

- Ensure to provide proven ability to evaluate and improve software development best practices.

- Provide DevOps and other processes to assure consistency, quality and timeliness.

- Participate in interviewing as well as hiring final decisions.

- Guide and provide input to all strategic as well as technical planning for entire products.

- Monitor and provide input for evaluation and prioritize change requests.

- Create and monitor the set of policies that establish standard development languages, tools, and methodology; documentation practices; and examination procedures for developed systems to ensure alignment with overall architecture.'

- Participate in project scope, schedule, and cost reviews.

- Understand and socialize product capabilities and limitations.

- Identify and implement ways to improve and promote quality and demonstrate accuracy and thoroughness.

- Establish working relationships with external technology vendors.

- Integrate customer requirements through the engineering effort for championing next generation products.

- Quickly gain an understanding of the company's technology and markets, establish yourself as a credible leader.

- Release scheduling.

- Keeps abreast of new technologies and has demonstrated knowledge and experience in various technologies.

- Manage 3rd party consulting partners/vendors implementing products.

- Prepare and submit weekly project status reports; prepare monthly reports outlining team assignments and/or changes, project status changes, and forecasts project timelines.

- Provide leadership to individuals or team(s) through coaching, feedback, development goals, and performance management.

- Prioritize employee career development to grow the internal pipeline of leadership talent.

- Prioritize, assign, and manage department activities and projects in accordance with the department's goals and objectives. Adjust hours of work, priorities, and staff assignments to ensure efficient operation, based on workload.

Qualification & Experience

- Master’s or bachelor’s degree in Computer Science, Business Information Systems or related field or equivalent work experience required.

- Relevant certifications also preferred among other indications of someone who values continuing education.

- 15+ years’ experience "living" with various operating systems, development tools and development methodologies including Java, data structures, Scala, Python, NodeJS

- 8+ years of individual contributor software development experience.

- 6+ years management experience in a fast-growing product software environment with proven ability to lead and engage development, QA and implementation teams working on multiple projects.

- Idea generation and creativity in this position are a must, as are the ability to work with deadlines, manage and complete projects on time and within budget.

- Proven ability to establish and drive processes and procedures with quantifiable metrics to measure the success and effectiveness of the development organization.

- Proven history of delivering on deadlines/releases without compromising quality.

- Mastery of engineering concepts and core technologies: development models, programming languages, databases, testing, and documentation.

- Development experience with compilers, web Services, database engines and related technologies.

- Experience with Agile software development and SCRUM methodologies.

- Proven track record of delivering high quality software products.

- A solid engineering foundation indicated by a demonstrated understanding of

- product design, life cycle, software development practices, and support services. Understanding of standard engineering processes and software development methodologies.

- Experience coordinating the work and competences of software staff within functional project groups.

- Ability to work cross functionally and as a team with other executive committee members.

- Strong verbal and written communication skills.

- Communicate effectively with different business units about technology and processes using lay terms and descriptions.

- Experience Preferred:

- Experience building horizontally scalable solutions leveraging containers, microservices, Big Data technologies among other related technologies.

- Experience working with graphical user experience and user interface design.

- Experience working with object-oriented software development, web services, web development or other similar technical products.

- Experience with database engines, languages, and compilers

- Experience with user acceptance testing, regression testing and integration testing.

- Experience working on open-source software projects for Apache and other great open-source software organizations.

- Demonstrable experience training and leading teams as a great people leader.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Opportunity for Unix Developer!!

We at Datametica are looking for talented Unix engineers who would get trained and will get the opportunity to work on Google Cloud Platform, DWH and Big Data.

Experience - 2 to 7 years

Job location - Pune

Mandatory Skills:

Strong experience in Unix with Shell Scripting development.

What opportunities do we offer?

-Selected candidates will be provided training opportunities in one or more of following: Google Cloud, AWS, DevOps Tools and Big Data technologies like Hadoop, Pig, Hive, Spark, Sqoop, Flume and Kafka

- You would get chance to be part of the enterprise-grade implementation of Cloud and Big Data systems

- You will play an active role in setting up the Modern data platform based on Cloud and Big Data

- You would be a part of teams with rich experience in various aspects of distributed systems and computing.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

We at Datametica Solutions Private Limited are looking for an SQL Lead / Architect who has a passion for the cloud with knowledge of different on-premises and cloud Data implementation in the field of Big Data and Analytics including and not limiting to Teradata, Netezza, Exadata, Oracle, Cloudera, Hortonworks and alike.

Ideal candidates should have technical experience in migrations and the ability to help customers get value from Datametica's tools and accelerators.

Job Description :

Experience: 6+ Years

Work Location: Pune / Hyderabad

Technical Skills :

- Good programming experience as an Oracle PL/SQL, MySQL, and SQL Server Developer

- Knowledge of database performance tuning techniques

- Rich experience in a database development

- Experience in Designing and Implementation Business Applications using the Oracle Relational Database Management System

- Experience in developing complex database objects like Stored Procedures, Functions, Packages and Triggers using SQL and PL/SQL

Required Candidate Profile :

- Excellent communication, interpersonal, analytical skills and strong ability to drive teams

- Analyzes data requirements and data dictionary for moderate to complex projects • Leads data model related analysis discussions while collaborating with Application Development teams, Business Analysts, and Data Analysts during joint requirements analysis sessions

- Translate business requirements into technical specifications with an emphasis on highly available and scalable global solutions

- Stakeholder management and client engagement skills

- Strong communication skills (written and verbal)

About Us!

A global leader in the Data Warehouse Migration and Modernization to the Cloud, we empower businesses by migrating their Data/Workload/ETL/Analytics to the Cloud by leveraging Automation.

We have expertise in transforming legacy Teradata, Oracle, Hadoop, Netezza, Vertica, Greenplum along with ETLs like Informatica, Datastage, AbInitio & others, to cloud-based data warehousing with other capabilities in data engineering, advanced analytics solutions, data management, data lake and cloud optimization.

Datametica is a key partner of the major cloud service providers - Google, Microsoft, Amazon, Snowflake.

We have our own products!

Eagle Data warehouse Assessment & Migration Planning Product

Raven Automated Workload Conversion Product

Pelican Automated Data Validation Product, which helps automate and accelerate data migration to the cloud.

Why join us!

Datametica is a place to innovate, bring new ideas to live, and learn new things. We believe in building a culture of innovation, growth, and belonging. Our people and their dedication over these years are the key factors in achieving our success.

Benefits we Provide!

Working with Highly Technical and Passionate, mission-driven people

Subsidized Meals & Snacks

Flexible Schedule

Approachable leadership

Access to various learning tools and programs

Pet Friendly

Certification Reimbursement Policy

Check out more about us on our website below!

www.datametica.com

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

- Must have 4 to 7 years of experience in ETL Design and Development using Informatica Components.

- Should have extensive knowledge in Unix shell scripting.

- Understanding of DW principles (Fact, Dimension tables, Dimensional Modelling and Data warehousing concepts).

- Research, development, document and modification of ETL processes as per data architecture and modeling requirements.

- Ensure appropriate documentation for all new development and modifications of the ETL processes and jobs.

- Should be good in writing complex SQL queries.

- • Selected candidates will be provided training opportunities on one or more of following: Google Cloud, AWS, DevOps Tools, Big Data technologies like Hadoop, Pig, Hive, Spark, Sqoop, Flume and

- Kafka would get chance to be part of the enterprise-grade implementation of Cloud and Big Data systems

- Will play an active role in setting up the Modern data platform based on Cloud and Big Data

- Would be part of teams with rich experience in various aspects of distributed systems and computing.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

We at Datametica Solutions Private Limited are looking for SQL Engineers who have a passion for cloud with knowledge of different on-premise and cloud Data implementation in the field of Big Data and Analytics including and not limiting to Teradata, Netezza, Exadata, Oracle, Cloudera, Hortonworks and alike.

Ideal candidates should have technical experience in migrations and the ability to help customers get value from Datametica's tools and accelerators.

Job Description

Experience : 4-10 years

Location : Pune

Mandatory Skills -

- Strong in ETL/SQL development

- Strong Data Warehousing skills

- Hands-on experience working with Unix/Linux

- Development experience in Enterprise Data warehouse projects

- Good to have experience working with Python, shell scripting

Opportunities -

- Selected candidates will be provided training opportunities on one or more of the following: Google Cloud, AWS, DevOps Tools, Big Data technologies like Hadoop, Pig, Hive, Spark, Sqoop, Flume and Kafka

- Would get chance to be part of the enterprise-grade implementation of Cloud and Big Data systems

- Will play an active role in setting up the Modern data platform based on Cloud and Big Data

- Would be part of teams with rich experience in various aspects of distributed systems and computing

About Us!

A global Leader in the Data Warehouse Migration and Modernization to the Cloud, we empower businesses by migrating their Data/Workload/ETL/Analytics to the Cloud by leveraging Automation.

We have expertise in transforming legacy Teradata, Oracle, Hadoop, Netezza, Vertica, Greenplum along with ETLs like Informatica, Datastage, AbInitio & others, to cloud-based data warehousing with other capabilities in data engineering, advanced analytics solutions, data management, data lake and cloud optimization.

Datametica is a key partner of the major cloud service providers - Google, Microsoft, Amazon, Snowflake.

We have our own products!

Eagle – Data warehouse Assessment & Migration Planning Product

Raven – Automated Workload Conversion Product

Pelican - Automated Data Validation Product, which helps automate and accelerate data migration to the cloud.

Why join us!

Datametica is a place to innovate, bring new ideas to live and learn new things. We believe in building a culture of innovation, growth and belonging. Our people and their dedication over these years are the key factors in achieving our success.

Benefits we Provide!

Working with Highly Technical and Passionate, mission-driven people

Subsidized Meals & Snacks

Flexible Schedule

Approachable leadership

Access to various learning tools and programs

Pet Friendly

Certification Reimbursement Policy

Check out more about us on our website below!

http://www.datametica.com/">www.datametica.com

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Experience: 6+ years

Location: Pune

Lead/support implementation of core cloud components and document critical design and configuration details to support enterprise cloud initiatives. The Cloud Infrastructure Lead will be primarily responsible for utilizing technical skills to coordinate enhancements and deployment efforts and to provide insight and recommendations for implementing client solutions. Leads will work closely with Customer, Cloud Architect, other Cloud teams and other functions.

Job Requirements:

- Experience in Cloud Foundation setup from the hierarchy of Organization to individual services

- Experience in Cloud Virtual Network, VPN Gateways, Tunneling, Cloud Load Balancing, Cloud Interconnect, Cloud DNS

- Experience working with scalable networking technologies such as Load Balancers/Firewalls and web standards (REST APIs, web security mechanisms)

- Experience working with Identity and access management (MFA, SSO, AD Connect, App Registrations, Service Principals)

- Familiarity with standard IT security practices such as encryption, certificates and key management.

- Experience in Deploying and maintaining Applications on Kubernetes

- Must have worked on one or more configuration tools such an Terraform, Ansible, PowerShell DSC

- Experience on Cloud Storage services including MS SQL DB, Tables, Files etc

- Experience on Cloud Monitoring and Alerts mechanism

- Well versed with Governance and Cloud best practices for Security and Cost optimization

- Experience in one or more of the following: Shell scripting, PowerShell, Python or Ruby.

- Experience with Unix/Linux operating systems internals and administration (e.g., filesystems, system calls) or networking (e.g., TCP/IP, routing, network topologies and hardware, SDN)

- Should have strong knowledge of Cloud billing and understand costing of different cloud services

- Prior professional experience in IT Strategy, IT Business Management, Cloud & Infrastructure, or Systems Engineering

Preferred

- Compute: Infrastructure, Platform Sizing, Consolidation, Tiered and Virtualized Storage, Automated Provisioning, Rationalization, Infrastructure Cost Reduction, Thin Provisioning

- Experience with Operating systems and Software

- Sound background with Networking and Security

- Experience with Open Source: Sizing and Performance Analyses, Selection and Implementation, Platform Design and Selection

- Experience with Infrastructure-Based Processes: Monitoring, Capacity Planning, Facilities Management, Performance Tuning, Asset Management, Disaster Recovery, Data Center support

About Us!

A global Leader in the Data Warehouse Migration and Modernization to the Cloud, we empower businesses by migrating their Data/Workload/ETL/Analytics to the Cloud by leveraging Automation.

We have expertise in transforming legacy Teradata, Oracle, Hadoop, Netezza, Vertica, Greenplum along with ETLs like Informatica, Datastage, AbInitio & others, to cloud-based data warehousing with other capabilities in data engineering, advanced analytics solutions, data management, data lake and cloud optimization.

Datametica is a key partner of the major cloud service providers - Google, Microsoft, Amazon, Snowflake.

We have our own products!

Eagle – Data warehouse Assessment & Migration Planning Product

Raven – Automated Workload Conversion Product

Pelican - Automated Data Validation Product, which helps automate and accelerate data migration to the cloud.

Why join us!

Datametica is a place to innovate, bring new ideas to live and learn new things. We believe in building a culture of innovation, growth and belonging. Our people and their dedication over these years are the key factors in achieving our success.

Benefits we Provide!

Working with Highly Technical and Passionate, mission-driven people

Subsidized Meals & Snacks

Flexible Schedule

Approachable leadership

Access to various learning tools and programs

Pet Friendly

Certification Reimbursement Policy

Check out more about us on our website below!

www.datametica.com

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Datametica is Hiring for Datastage Developer

- Must have 3 to 8 years of experience in ETL Design and Development using IBM Datastage Components.

- Should have extensive knowledge in Unix shell scripting.

- Understanding of DW principles (Fact, Dimension tables, Dimensional Modelling and Data warehousing concepts).

- Research, development, document and modification of ETL processes as per data architecture and modeling requirements.

- Ensure appropriate documentation for all new development and modifications of the ETL processes and jobs.

- Should be good in writing complex SQL queries.

About Us!

A global Leader in the Data Warehouse Migration and Modernization to the Cloud, we empower businesses by migrating their Data/Workload/ETL/Analytics to the Cloud by leveraging Automation.

We have expertise in transforming legacy Teradata, Oracle, Hadoop, Netezza, Vertica, Greenplum along with ETLs like Informatica, Datastage, AbInitio & others, to cloud-based data warehousing with other capabilities in data engineering, advanced analytics solutions, data management, data lake and cloud optimization.

Datametica is a key partner of the major cloud service providers - Google, Microsoft, Amazon, Snowflake.

We have our own products!

Eagle – Data warehouse Assessment & Migration Planning Product

Raven – Automated Workload Conversion Product

Pelican - Automated Data Validation Product, which helps automate and accelerate data migration to the cloud.

Why join us!

Datametica is a place to innovate, bring new ideas to live and learn new things. We believe in building a culture of innovation, growth and belonging. Our people and their dedication over these years are the key factors in achieving our success.

Benefits we Provide!

Working with Highly Technical and Passionate, mission-driven people

Subsidized Meals & Snacks

Flexible Schedule

Approachable leadership

Access to various learning tools and programs

Pet Friendly

Certification Reimbursement Policy

Check out more about us on our website below!

www.datametica.com

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Job description

Role : Lead Architecture (Spark, Scala, Big Data/Hadoop, Java)

Primary Location : India-Pune, Hyderabad

Experience : 7 - 12 Years

Management Level: 7

Joining Time: Immediate Joiners are preferred

- Attend requirements gathering workshops, estimation discussions, design meetings and status review meetings

- Experience of Solution Design and Solution Architecture for the data engineer model to build and implement Big Data Projects on-premises and on cloud.

- Align architecture with business requirements and stabilizing the developed solution

- Ability to build prototypes to demonstrate the technical feasibility of your vision

- Professional experience facilitating and leading solution design, architecture and delivery planning activities for data intensive and high throughput platforms and applications

- To be able to benchmark systems, analyses system bottlenecks and propose solutions to eliminate them

- Able to help programmers and project managers in the design, planning and governance of implementing projects of any kind.

- Develop, construct, test and maintain architectures and run Sprints for development and rollout of functionalities

- Data Analysis, Code development experience, ideally in Big Data Spark, Hive, Hadoop, Java, Python, PySpark,

- Execute projects of various types i.e. Design, development, Implementation and migration of functional analytics Models/Business logic across architecture approaches

- Work closely with Business Analysts to understand the core business problems and deliver efficient IT solutions of the product

- Deployment sophisticated analytics program of code using any of cloud application.

Perks and Benefits we Provide!

- Working with Highly Technical and Passionate, mission-driven people

- Subsidized Meals & Snacks

- Flexible Schedule

- Approachable leadership

- Access to various learning tools and programs

- Pet Friendly

- Certification Reimbursement Policy

- Check out more about us on our website below!

www.datametica.com

Similar companies

About the company

Do you want to deliver code for a Y Combinator-funded startup?

Do you want to build world-class applications used by millions of people?

Do you want to grow along with a fast-growing company?

If YES, Codebrahma is THE place for you!

Codebrahma is a software boutique based out of Ascendas ITPL, Bangalore.

We have been technology partners for some of the most exciting startups in the world which includes 5 Y Combinator funded startups. Most of the companies that have worked with us have gone on to raise major rounds of funding and disrupting their spaces.

Now that you are all excited about what we do.

We are looking for amazing Developers!

Jobs

1

About the company

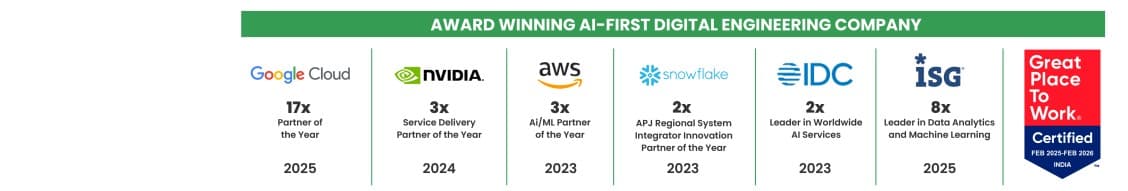

Quantiphi is an award-winning AI-first digital engineering company driven by the desire to reimagine and realize transformational opportunities at the heart of the business. Since its inception in 2013, Quantiphi has solved the toughest and most complex business problems by combining deep industry experience, disciplined cloud, and data-engineering practices, and cutting-edge artificial intelligence research to achieve accelerated and quantifiable business results.

Jobs

5

About the company

Welcome to Neogencode Technologies, an IT services and consulting firm that provides innovative solutions to help businesses achieve their goals. Our team of experienced professionals is committed to providing tailored services to meet the specific needs of each client. Our comprehensive range of services includes software development, web design and development, mobile app development, cloud computing, cybersecurity, digital marketing, and skilled resource acquisition. We specialize in helping our clients find the right skilled resources to meet their unique business needs. At Neogencode Technologies, we prioritize communication and collaboration with our clients, striving to understand their unique challenges and provide customized solutions that exceed their expectations. We value long-term partnerships with our clients and are committed to delivering exceptional service at every stage of the engagement. Whether you are a small business looking to improve your processes or a large enterprise seeking to stay ahead of the competition, Neogencode Technologies has the expertise and experience to help you succeed. Contact us today to learn more about how we can support your business growth and provide skilled resources to meet your business needs.

Jobs

354

About the company

Let's make AI work for your business. Reliable Group specializes in assembling skilled engineering teams proficient in AI, ML, as well as traditional tech stacks to deliver results in your AI-driven initiatives. At Reliable Group, we understand that the success of your AI and ML endeavors hinges on having the right team with the right expertise. With decades of experience in custom software solutions and a deep understanding of AI technologies, we ensure that your AI aspirations become tangible, efficient solutions that meet your deadlines. Why Choose Reliable Group? Expert Engineering Talent: Our carefully curated teams of AI and ML specialists are equipped with the skills and knowledge to tackle complex challenges and deliver exceptional results. Efficiency & Productivity: We assess the timelines and human talent required to achieve your deadlines and keep track along the way. Achieving Results with Confidence: We have a track record of delivering projects exactly as you envisioned them, without compromise. Dynamic Solutions: AI is changing rapidly and what was relevant yesterday may be obsolete today. We have decades of experience in machine learning and AI models to assess and implement dynamic systems for your specific needs. Ready to turn your AI questions into business-proven answers? Connect with us to explore how our team can help you meet your AI goals.

Jobs

2

About the company

Jobs

63

About the company

Jobs

6

About the company

Education management software digitizes the strategic implementation of human and material resources for smooth execution of Education management System.

Jobs

6

About the company

Jobs

3

About the company

Jobs

4