DataToBiz Pvt. Ltd.

https://datatobiz.comAbout

With vision comes the insight and with insight comes the faith. We deliver the precise information/insights for your eye to visualize the facts and take the required decision with faith when it comes to making a business move. Everything now is based on assurity, the assurity that one gets from the information that is driven via collected raw data. We at DataToBiz help frame and explore that raw data to bring forth the facts behind it. These facts can help you fuel your business to rise above all others.

Connect with the team

Jobs at DataToBiz Pvt. Ltd.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

We are seeking a highly skilled and experienced Power BI Lead / Architect to join our growing team. The ideal candidate will have a strong understanding of data warehousing, data modeling, and business intelligence best practices. This role will be responsible for leading the design, development, and implementation of complex Power BI solutions that provide actionable insights to key stakeholders across the organization.

Location - Pune (Hybrid 3 days)

Responsibilities:

Lead the design, development, and implementation of complex Power BI dashboards, reports, and visualizations.

Develop and maintain data models (star schema, snowflake schema) for optimal data analysis and reporting.

Perform data analysis, data cleansing, and data transformation using SQL and other ETL tools.

Collaborate with business stakeholders to understand their data needs and translate them into effective and insightful reports.

Develop and maintain data pipelines and ETL processes to ensure data accuracy and consistency.

Troubleshoot and resolve technical issues related to Power BI dashboards and reports.

Provide technical guidance and mentorship to junior team members.

Stay abreast of the latest trends and technologies in the Power BI ecosystem.

Ensure data security, governance, and compliance with industry best practices.

Contribute to the development and improvement of the organization's data and analytics strategy.

May lead and mentor a team of junior Power BI developers.

Qualifications:

8-12 years of experience in Business Intelligence and Data Analytics.

Proven expertise in Power BI development, including DAX, advanced data modeling techniques.

Strong SQL skills, including writing complex queries, stored procedures, and views.

Experience with ETL/ELT processes and tools.

Experience with data warehousing concepts and methodologies.

Excellent analytical, problem-solving, and communication skills.

Strong teamwork and collaboration skills.

Ability to work independently and proactively.

Bachelor's degree in Computer Science, Information Systems, or a related field preferred.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

- Develop & Maintain Dashboards: Create interactive and visually compelling dashboards and reports in Tableau, ensuring they meet business requirements and provide actionable insights.

- Data Analysis & Visualization: Design data models and build visualizations to summarize large sets of data, ensuring accuracy, consistency, and clarity in the reports.

- SQL Querying: Write complex SQL queries to extract and transform data from different data sources (databases, APIs, etc.), ensuring optimal performance.

- Data Cleansing: Clean, validate, and prepare data for analysis, ensuring data integrity and consistency.

- Collaboration: Work closely with cross-functional teams, including business analysts, data engineers, and stakeholders, to gather requirements and deliver customized reporting solutions.

- Troubleshooting & Support: Provide technical support and troubleshooting for Tableau reports, dashboards, and data integration issues.

- Performance Optimization: Optimize Tableau workbooks, dashboards, and queries for better performance and scalability.

- Best Practices: Ensure Tableau development follows best practices for data visualization, performance optimization, and user experience.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Website Performance Monitoring & Optimization:

- Monitor website load speed and ensure optimal user experience across devices.

- Identify performance gaps, suggest improvements, and track progress.

- Conduct website audits focusing on technical SEO, usability, and site speed.

Visual Analytics & User Behavior Analysis:

- Track visual analytics (heatmaps, session recordings) to identify issues.

- Report on user behavior patterns and suggest actionable improvements.

- Identify and report website issues such as broken links, slow-loading pages, or improper visual elements.

Collaboration & Reporting:

- Work with the marketing and development teams to align website goals with business objectives.

- Provide regular performance reports, highlighting issues, insights, and recommendations for improvements.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

As a Data Warehouse Engineer in our team, you should have a proven ability to deliver high-quality work on time and with minimal supervision.

Develops or modifies procedures to solve complex database design problems, including performance, scalability, security and integration issues for various clients (on-site and off-site).

Design, develop, test, and support the data warehouse solution.

Adapt best practices and industry standards, ensuring top quality deliverable''s and playing an integral role in cross-functional system integration.

Design and implement formal data warehouse testing strategies and plans including unit testing, functional testing, integration testing, performance testing, and validation testing.

Evaluate all existing hardware's and software's according to required standards and ability to configure the hardware clusters as per the scale of data.

Data integration using enterprise development tool-sets (e.g. ETL, MDM, Quality, CDC, Data Masking, Quality).

Maintain and develop all logical and physical data models for enterprise data warehouse (EDW).

Contributes to the long-term vision of the enterprise data warehouse (EDW) by delivering Agile solutions.

Interact with end users/clients and translate business language into technical requirements.

Acts independently to expose and resolve problems.

Participate in data warehouse health monitoring and performance optimizations as well as quality documentation.

Job Requirements :

2+ years experience working in software development & data warehouse development for enterprise analytics.

2+ years of working with Python with major experience in Red-shift as a must and exposure to other warehousing tools.

Deep expertise in data warehousing, dimensional modeling and the ability to bring best practices with regard to data management, ETL, API integrations, and data governance.

Experience working with data retrieval and manipulation tools for various data sources like Relational (MySQL, PostgreSQL, Oracle), Cloud-based storage.

Experience with analytic and reporting tools (Tableau, Power BI, SSRS, SSAS). Experience in AWS cloud stack (S3, Glue, Red-shift, Lake Formation).

Experience in various DevOps practices helping the client to deploy and scale the systems as per requirement.

Strong verbal and written communication skills with other developers and business clients.

Knowledge of Logistics and/or Transportation Domain is a plus.

Ability to handle/ingest very huge data sets (both real-time data and batched data) in an efficient manner.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Pipelines should be optimised to handle both real time data, batch update data and historical data.

Establish scalable, efficient, automated processes for complex, large scale data analysis.

Write high quality code to gather and manage large data sets (both real time and batch data) from multiple sources, perform ETL and store it in a data warehouse.

Manipulate and analyse complex, high-volume, high-dimensional data from varying sources using a variety of tools and data analysis techniques.

Participate in data pipelines health monitoring and performance optimisations as well as quality documentation.

Interact with end users/clients and translate business language into technical requirements.

Acts independently to expose and resolve problems.

Job Requirements :-

2+ years experience working in software development & data pipeline development for enterprise analytics.

2+ years of working with Python with exposure to various warehousing tools

In-depth working with any of commercial tools like AWS Glue, Ta-lend, Informatica, Data-stage, etc.

Experience with various relational databases like MySQL, MSSql, Oracle etc. is a must.

Experience with analytics and reporting tools (Tableau, Power BI, SSRS, SSAS).

Experience in various DevOps practices helping the client to deploy and scale the systems as per requirement.

Strong verbal and written communication skills with other developers and business client.

Knowledge of Logistics and/or Transportation Domain is a plus.

Hands-on with traditional databases and ERP systems like Sybase and People-soft.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Similar companies

About the company

Jobs

3

About the company

About Us

Incubyte is an AI-first software development agency, built on the foundations of software craft, where the “how” of building software matters as much as the “what”. We partner with companies of all sizes, from helping enterprises build, scale, and modernize; to pre-product founders bring their ideas to life.

Incubees are power users of AI across the SDLC for speed and efficiency. Guided by Software Craftsmanship values and eXtreme Programming practices, we bring velocity together with the discipline of quality engineering to deliver high-impact, reliable solutions – fast.

Guiding Principles

- Living and breathing our guiding principles on a daily basis is key to success at Incubyte:

- Relentless Pursuit of Quality with Pragmatism

- Striving for exceptional quality without losing sight of practical delivery needs.

- Extreme Ownership Taking full responsibility for decisions, work, and outcomes.

- Proactive Collaboration Actively seeking opportunities to collaborate and leverage the team’s collective strengths.

- Active Pursuit of Mastery Continuously learning, improving, and mastering the craft, never settling for ‘good enough’.

- Invite, Give, and Act on Feedback

- Creating a culture of timely, respectful, actionable feedback for mutual growth.

- Ensuring Client Success Acting as trusted consultants and focusing on delivering true value and outcomes for clients.

Job Description

This is a remote position

Experience Level

This role is ideal for engineers with 5+ years of total experience, including strong hands-on software development experience and 1+ year of technical leadership experience, with a proven track record of shipping complex projects successfully.

An experienced individual contributor and leader who thrives in large, complex projects with widespread impact.

Role Overview

As a Senior Engineer, you’ll ensure that projects don’t just get built — they get shipped. You’ll be the driving force behind architecture design, technical decision-making, project delivery, and stakeholder communication.

A Senior Craftsperson is a multiplier for any team:

- Able to independently own ill-defined, highly ambiguous projects.

- Thinks holistically across Product, Design, Platform, and Operations to deliver highly impactful solutions.

- Shapes roadmaps to tackle complex problems incrementally.

- Raises the quality, correctness, and suitability of their team’s work, with visible impact across their business domain and beyond.

- Strong mentor, role model, and coach for other engineers.

If you take pride in shipping high-quality software, mentoring teams, and creating an environment where engineers can thrive, we’d love to hear from you.

What You’ll Do

- Lead projects end-to-end, from architecture to deployment, ensuring timely, high-quality delivery.

- Collaborate with Engineering and Product Managers to plan, scope, and break work into manageable tasks.

- Always know if the project can ship, with clear trade-offs when needed.

- Drive technical decisions with a shipping-first mindset and active participation in key meetings.

- Maintain deep knowledge of your services, identifying risks and creating mitigation strategies.

- Review code for quality and best practices, mentoring engineers to improve their craft.

- Communicate clearly with stakeholders, set realistic expectations, and build trust.

- Support and guide engineers, helping unblock issues and foster collaboration.

- Anticipate challenges, prepare fallback plans, and facilitate problem-solving.

- Keep documentation accurate, up-to-date, and accessible.

You will also:

- Lead highly ambiguous projects of critical business impact, balancing engineering, operational, and client priorities.

- Link technical contributions directly to business impact, helping the team and stakeholders align.

- Contribute meaningfully to team goals, with visibility into business objectives over multiple quarters.

- Ensure safe rollout of new features through incremental releases, monitoring, and metrics.

- Anticipate and mitigate risks across connected systems, ensuring minimal operational impact.

- Proactively improve system quality and longevity while leveling up those around you.

- Shape roadmaps, vision, and practices of the engineering discipline, influencing both your team and the wider business.

Requirements

What We’re Looking For

- 5+ years of software development experience, with strong architectural design skills.

- Strong hands-on experience with Python and PHP (must-have) and react.js (good-have).

- 1+ year in a technical leadership role, managing pods or cross-functional teams.

- Proficiency in system design, service ownership, and technical documentation.

- Strong experience with code reviews and quality assurance practices.

- Proven ability to communicate effectively with technical and non-technical stakeholders.

- Track record of delivering complex projects on time.

You will also bring:

- Ability to own large, complex projects with widespread impact.

- Demonstrated influence beyond the immediate team, shaping outcomes across a business domain.

- Strengths in stakeholder management and navigating complex scenarios.

- Skills in deliberate discovery to uncover unknowns and design solutions that succeed in real-world conditions.

Benefits

What We Offer

Dedicated Learning & Development Budget: Fuel your growth with a budget dedicated solely to learning.

Conference Talks Sponsorship: Amplify your voice! If you’re speaking at a conference, we’ll fully sponsor and support your talk.

Cutting-Edge Projects: Work on exciting projects with the latest AI technologies

Employee-Friendly Leave Policy: Recharge with ample leave options designed for a healthy work-life balance.

Comprehensive Medical & Term Insurance: Full coverage for you and your family’s peace of mind.

And More: Extra perks to support your well-being and professional growth.

Work Environment

- Remote-First Culture: At Incubyte, we thrive on a culture of structured flexibility — while you have control over where and how you work, everyone commits to a consistent rhythm that supports their team during core working hours for smooth collaboration and timely project delivery. By striking the perfect balance between freedom and responsibility, we enable ourselves to deliver high-quality standards our customers recognize us by. With asynchronous tools and push for active participation, we foster a vibrant, hands-on environment where each team member’s engagement and contributions drive impactful results.

- Work-In-Person: Twice a year, we come together for two-week sprints to collaborate in person, foster stronger team bonds, and align on goals. Additionally, we host an annual retreat to recharge and connect as a team. All travel expenses are covered.

- Proactive Collaboration: Collaboration is central to our work. Through daily pair programming sessions, we focus on mentorship, continuous learning, and shared problem-solving. This hands-on approach keeps us innovative and aligned as a team.

Incubyte is an equal opportunity employer. We celebrate diversity and are committed to creating an inclusive environment for all employees.

Jobs

11

About the company

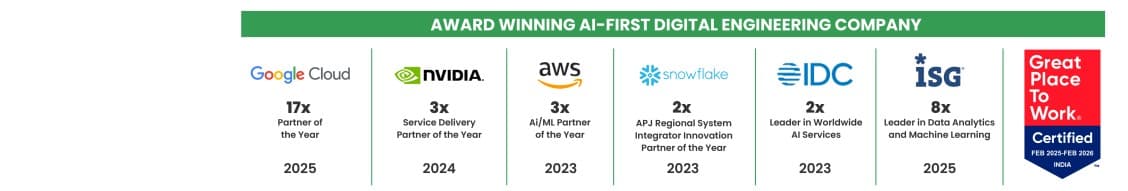

Quantiphi is an award-winning AI-first digital engineering company driven by the desire to reimagine and realize transformational opportunities at the heart of the business. Since its inception in 2013, Quantiphi has solved the toughest and most complex business problems by combining deep industry experience, disciplined cloud, and data-engineering practices, and cutting-edge artificial intelligence research to achieve accelerated and quantifiable business results.

Jobs

4

About the company

Estuate is a global IT services company that offers innovative software solutions ranging from Product Engineering services to Subscription Billing and GRC to Digital Transformation. They help businesses thrive with their out-of-the-box tech solutions and expert consulting services. Estuate's IBM InfoSphere Optim Archive Viewer 11.7 is a powerful and intuitive solution for accessing and analyzing archived data, fully compatible with IBM’s Cloud Pack for Data technology stack.

Estuate's software solutions aid organizations in managing data properly throughout its lifetime, allowing them to make informed decisions and stay compliant with industry regulations. Their IBM InfoSphere Optim Archive family of tools handles older data in active applications and retains data in retired applications for legal, regulatory, or analytical purposes. Industries in which Estuate operates include healthcare, finance, retail, and technology. They have a worldwide presence with operations in several parts of the world including Canada, India, and the UK.

Jobs

5

About the company

Welcome to Neogencode Technologies, an IT services and consulting firm that provides innovative solutions to help businesses achieve their goals. Our team of experienced professionals is committed to providing tailored services to meet the specific needs of each client. Our comprehensive range of services includes software development, web design and development, mobile app development, cloud computing, cybersecurity, digital marketing, and skilled resource acquisition. We specialize in helping our clients find the right skilled resources to meet their unique business needs. At Neogencode Technologies, we prioritize communication and collaboration with our clients, striving to understand their unique challenges and provide customized solutions that exceed their expectations. We value long-term partnerships with our clients and are committed to delivering exceptional service at every stage of the engagement. Whether you are a small business looking to improve your processes or a large enterprise seeking to stay ahead of the competition, Neogencode Technologies has the expertise and experience to help you succeed. Contact us today to learn more about how we can support your business growth and provide skilled resources to meet your business needs.

Jobs

355

About the company

Highfly Sourcing, one of the best immigration consultants in delhi having expertise of providing quality solutions in immigration services to individuals, families and corporate clients those who are seeking to Settle, Work, Study, Visit or move temporarily or permanently in Canada, Australia, New Zealand , Denmark, Germany, UK, Hong Kong,Singapore and others countries globally.

We are one of the best immigration consulting services of the country. It is a matter of great pride that till date our success rate has been hundred percent. There is no doubt about the fact, that any assignment that is taken up becomes successful because of not just one but many reasons. From planning to execution, everything has to be done with utmost perfection.

Here at Highfly Sourcing, your personal and professional needs are kept into consideration before recommending a visa for you. Our consulting professionals are there to study your profile thoroughly and counsel you as per your future aspirations. We assure that once you meet our consulting professionals all your doubts and queries will take a back seat and you will just want to be proactive enough to complete the process at the earliest and take a flight to your dream country.

Our Post Landing services will be an added advantage in your new country as we will provide you with job search services, pick up assistance and accommodation assistance. We will be there for you till you are permanently settled in the country.

Jobs

24

About the company

Jobs

7

About the company

Jobs

13

About the company

Jobs

4