Propellor.ai

https://propellor.aiAbout

Who we are

At Propellor, we are passionate about solving Data Unification challenges faced by our clients. We build solutions using the latest tech stack. We believe all solutions lie in the congruence of Business, Technology, and Data Science. Combining the 3, our team of young Data professionals solves some real-world problems. Here's what we live by:

Skin in the game

We believe that Individual and Collective success orientations both propel us ahead.

Cross Fertility

Borrowing from and building on one another’s varied perspectives means we are always viewing business problems with a fresh lens.

Sub 25's

A bunch of young turks, who keep our explorer mindset alive and kicking.

Future-proofing

Keeping an eye ahead, we are upskilling constantly, staying relevant at any given point in time.

Tech Agile

Tech changes quickly. Whatever your stack, we adapt speedily and easily.

If you are evaluating us to be your next employer, we urge you to read more about our team and culture here: https://bit.ly/3ExSNA2. We assure you, it's worth a read!

Tech stack

Company video

Candid answers by the company

Photos

Connect with the team

Jobs at Propellor.ai

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Job Description: Data Scientist

At Propellor.ai, we derive insights that allow our clients to make scientific decisions. We believe in demanding more from the fields of Mathematics, Computer Science, and Business Logic. Combine these and we show our clients a 360-degree view of their business. In this role, the Data Scientist will be expected to work on Procurement problems along with a team-based across the globe.

We are a Remote-First Company.

Read more about us here: https://www.propellor.ai/consulting" target="_blank">https://www.propellor.ai/consulting

What will help you be successful in this role

- Articulate

- High Energy

- Passion to learn

- High sense of ownership

- Ability to work in a fast-paced and deadline-driven environment

- Loves technology

- Highly skilled at Data Interpretation

- Problem solver

- Ability to narrate the story to the business stakeholders

- Generate insights and the ability to turn them into actions and decisions

Skills to work in a challenging, complex project environment

- Need you to be naturally curious and have a passion for understanding consumer behavior

- A high level of motivation, passion, and high sense of ownership

- Excellent communication skills needed to manage an incredibly diverse slate of work, clients, and team personalities

- Flexibility to work on multiple projects and deadline-driven fast-paced environment

- Ability to work in ambiguity and manage the chaos

Key Responsibilities

- Analyze data to unlock insights: Ability to identify relevant insights and actions from data. Use regression, cluster analysis, time series, etc. to explore relationships and trends in response to stakeholder questions and business challenges.

- Bring in experience for AI and ML: Bring in Industry experience and apply the same to build efficient and optimal Machine Learning solutions.

- Exploratory Data Analysis (EDA) and Generate Insights: Analyse internal and external datasets using analytical techniques, tools, and visualization methods. Ensure pre-processing/cleansing of data and evaluate data points across the enterprise landscape and/or external data points that can be leveraged in machine learning models to generate insights.

- DS and ML Model Identification and Training: Identity, test, and train machine learning models that need to be leveraged for business use cases. Evaluate models based on interpretability, performance, and accuracy as required. Experiment and identify features from datasets that will help influence model outputs. Determine what models will need to be deployed, data points that need to be fed into models, and aid in the deployment and maintenance of models.

Technical Skills

An enthusiastic individual with the following skills. Please do not hesitate to apply if you do not match all of them. We are open to promising candidates who are passionate about their work, fast learners and are team players.

- Strong experience with machine learning and AI including regression, forecasting, time series, cluster analysis, classification, Image recognition, NLP, Text Analytics and Computer Vision.

- Strong experience with advanced analytics tools for Object-oriented/object function scripting using languages such as Python, or similar.

- Strong experience with popular database programming languages including SQL.

- Strong experience in Spark/Pyspark

- Experience in working in Databricks

What are the company benefits you get, when you join us as?

- Permanent Work from Home Opportunity

- Opportunity to work with Business Decision Makers and an internationally based team

- The work environment that offers limitless learning

- A culture void of any bureaucracy, hierarchy

- A culture of being open, direct, and with mutual respect

- A fun, high-caliber team that trusts you and provides the support and mentorship to help you grow

- The opportunity to work on high-impact business problems that are already defining the future of Marketing and improving real lives

To know more about how we work: https://bit.ly/3Oy6WlE" target="_blank">https://bit.ly/3Oy6WlE

Whom will you work with?

You will closely work with other Senior Data Scientists and Data Engineers.

Immediate to 15-day Joiners will be preferred.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Job Description - Data Engineer

About us

Propellor is aimed at bringing Marketing Analytics and other Business Workflows to the Cloud ecosystem. We work with International Clients to make their Analytics ambitions come true, by deploying the latest tech stack and data science and engineering methods, making their business data insightful and actionable.

What is the role?

This team is responsible for building a Data Platform for many different units. This platform will be built on Cloud and therefore in this role, the individual will be organizing and orchestrating different data sources, and

giving recommendations on the services that fulfil goals based on the type of data

Qualifications:

• Experience with Python, SQL, Spark

• Knowledge/notions of JavaScript

• Knowledge of data processing, data modeling, and algorithms

• Strong in data, software, and system design patterns and architecture

• API building and maintaining

• Strong soft skills, communication

Nice to have:

• Experience with cloud: Google Cloud Platform, AWS, Azure

• Knowledge of Google Analytics 360 and/or GA4.

Key Responsibilities

• Work on the core backend and ensure it meets the performance benchmarks.

• Designing and developing APIs for the front end to consume.

• Constantly improve the architecture of the application by clearing the technical backlog.

• Meeting both technical and consumer needs.

• Staying abreast of developments in web applications and programming languages.

Key Responsibilities

• Design and develop platform based on microservices architecture.

• Work on the core backend and ensure it meets the performance benchmarks.

• Work on the front end with ReactJS.

• Designing and developing APIs for the front end to consume.

• Constantly improve the architecture of the application by clearing the technical backlog.

• Meeting both technical and consumer needs.

• Staying abreast of developments in web applications and programming languages.

What are we looking for?

An enthusiastic individual with the following skills. Please do not hesitate to apply if you do not match all of it. We are open to promising candidates who are passionate about their work and are team players.

• Education - BE/MCA or equivalent.

• Agnostic/Polyglot with multiple tech stacks.

• Worked on open-source technologies – NodeJS, ReactJS, MySQL, NoSQL, MongoDB, DynamoDB.

• Good experience with Front-end technologies like ReactJS.

• Backend exposure – good knowledge of building API.

• Worked on serverless technologies.

• Efficient in building microservices in combining server & front-end.

• Knowledge of cloud architecture.

• Should have sound working experience with relational and columnar DB.

• Should be innovative and communicative in approach.

• Will be responsible for the functional/technical track of a project.

Whom will you work with?

You will closely work with the engineering team and support the Product Team.

Hiring Process includes :

a. Written Test on Python and SQL

b. 2 - 3 rounds of Interviews

Immediate Joiners will be preferred

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Big Data Engineer/Data Engineer

What we are solving

Welcome to today’s business data world where:

• Unification of all customer data into one platform is a challenge

• Extraction is expensive

• Business users do not have the time/skill to write queries

• High dependency on tech team for written queries

These facts may look scary but there are solutions with real-time self-serve analytics:

• Fully automated data integration from any kind of a data source into a universal schema

• Analytics database that streamlines data indexing, query and analysis into a single platform.

• Start generating value from Day 1 through deep dives, root cause analysis and micro segmentation

At Propellor.ai, this is what we do.

• We help our clients reduce effort and increase effectiveness quickly

• By clearly defining the scope of Projects

• Using Dependable, scalable, future proof technology solution like Big Data Solutions and Cloud Platforms

• Engaging with Data Scientists and Data Engineers to provide End to End Solutions leading to industrialisation of Data Science Model Development and Deployment

What we have achieved so far

Since we started in 2016,

• We have worked across 9 countries with 25+ global brands and 75+ projects

• We have 50+ clients, 100+ Data Sources and 20TB+ data processed daily

Work culture at Propellor.ai

We are a small, remote team that believes in

• Working with a few, but only with highest quality team members who want to become the very best in their fields.

• With each member's belief and faith in what we are solving, we collectively see the Big Picture

• No hierarchy leads us to believe in reaching the decision maker without any hesitation so that our actions can have fruitful and aligned outcomes.

• Each one is a CEO of their domain.So, the criteria while making a choice is so our employees and clients can succeed together!

To read more about us click here:

https://bit.ly/3idXzs0" target="_blank">https://bit.ly/3idXzs0

About the role

We are building an exceptional team of Data engineers who are passionate developers and wants to push the boundaries to solve complex business problems using the latest tech stack. As a Big Data Engineer, you will work with various Technology and Business teams to deliver our Data Engineering offerings to our clients across the globe.

Role Description

• The role would involve big data pre-processing & reporting workflows including collecting, parsing, managing, analysing, and visualizing large sets of data to turn information into business insights

• Develop the software and systems needed for end-to-end execution on large projects

• Work across all phases of SDLC, and use Software Engineering principles to build scalable solutions

• Build the knowledge base required to deliver increasingly complex technology projects

• The role would also involve testing various machine learning models on Big Data and deploying learned models for ongoing scoring and prediction.

Education & Experience

• B.Tech. or Equivalent degree in CS/CE/IT/ECE/EEE 3+ years of experience designing technological solutions to complex data problems, developing & testing modular, reusable, efficient and scalable code to implement those solutions.

Must have (hands-on) experience

• Python and SQL expertise

• Distributed computing frameworks (Hadoop Ecosystem & Spark components)

• Must be proficient in any Cloud computing platforms (AWS/Azure/GCP) • Experience in in any cloud platform would be preferred - GCP (Big Query/Bigtable, Pub sub, Data Flow, App engine )/ AWS/ Azure

• Linux environment, SQL and Shell scripting Desirable

• Statistical or machine learning DSL like R

• Distributed and low latency (streaming) application architecture

• Row store distributed DBMSs such as Cassandra, CouchDB, MongoDB, etc

. • Familiarity with API design

Hiring Process:

1. One phone screening round to gauge your interest and knowledge of fundamentals

2. An assignment to test your skills and ability to come up with solutions in a certain time

3. Interview 1 with our Data Engineer lead

4. Final Interview with our Data Engineer Lead and the Business Teams

Preferred Immediate Joiners

Similar companies

About the company

We are Hiver. We are a bunch of folks with borderline devotional love for email, committed to the idea of making it noise-free and collaborative for teams. Why email you may ask? Email is one of the most common ways to communicate at work, is far less distracting than its chat counterparts, and lets you respond at your own pace. But it hasn’t evolved over the years to meet the changing collaboration needs of businesses. Teams have to rely on inefficient methods like Forwards and CCs to share information, often leading to messy threads and missed emails. Not to mention the toll it takes on your productivity, ability to concentrate and all things zen. We at Hiver are trying to solve this by giving email collaborative superpowers and, in the process, giving you back the most important currency there is - time.

Jobs

4

About the company

The Wissen Group was founded in the year 2000. Wissen Technology, a part of Wissen Group, was established in the year 2015. Wissen Technology is a specialized technology company that delivers high-end consulting for organizations in the Banking & Finance, Telecom, and Healthcare domains.

With offices in US, India, UK, Australia, Mexico, and Canada, we offer an array of services including Application Development, Artificial Intelligence & Machine Learning, Big Data & Analytics, Visualization & Business Intelligence, Robotic Process Automation, Cloud, Mobility, Agile & DevOps, Quality Assurance & Test Automation.

Leveraging our multi-site operations in the USA and India and availability of world-class infrastructure, we offer a combination of on-site, off-site and offshore service models. Our technical competencies, proactive management approach, proven methodologies, committed support and the ability to quickly react to urgent needs make us a valued partner for any kind of Digital Enablement Services, Managed Services, or Business Services.

We believe that the technology and thought leadership that we command in the industry is the direct result of the kind of people we have been able to attract, to form this organization (you are one of them!).

Our workforce consists of 1000+ highly skilled professionals, with leadership and senior management executives who have graduated from Ivy League Universities like MIT, Wharton, IITs, IIMs, and BITS and with rich work experience in some of the biggest companies in the world.

Wissen Technology has been certified as a Great Place to Work®. The technology and thought leadership that the company commands in the industry is the direct result of the kind of people Wissen has been able to attract. Wissen is committed to providing them the best possible opportunities and careers, which extends to providing the best possible experience and value to our clients.

Jobs

456

About the company

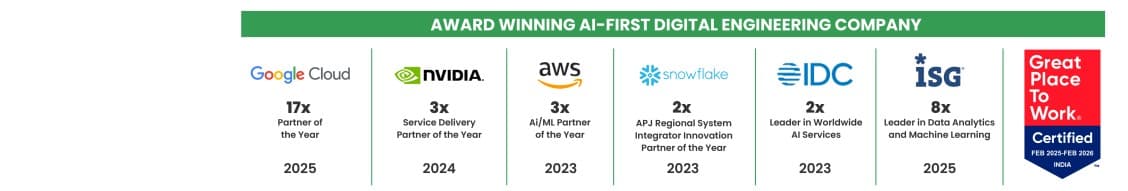

Quantiphi is an award-winning AI-first digital engineering company driven by the desire to reimagine and realize transformational opportunities at the heart of the business. Since its inception in 2013, Quantiphi has solved the toughest and most complex business problems by combining deep industry experience, disciplined cloud, and data-engineering practices, and cutting-edge artificial intelligence research to achieve accelerated and quantifiable business results.

Jobs

4

About the company

Automate Accounts is a technology-driven company dedicated to building intelligent automation solutions that streamline business operations and boost efficiency. We leverage modern platforms and tools to help businesses transform their workflows with cutting-edge solutions.

Jobs

3

About the company

Peak Hire Solutions is a leading Recruitment Firm that provides our clients with innovative IT / Non-IT Recruitment Solutions. We pride ourselves on our creativity, quality, and professionalism. Join our team and be a part of shaping the future of Recruitment.

Jobs

250

About the company

Jobs

3

About the company

Building the most advanced ad blocker on the planet!🌎

Loved by 3,50,000+ users on Chrome!

Jobs

1

About the company

Jobs

5

About the company

Jobs

5

About the company

Jobs

1