CLOUDSUFI

https://cloudsufi.comAbout

We exist to eliminate the gap between “Human Intuition” and “Data-Backed Decisions”

Data is the new oxygen, and we believe no organization can live without it. We partner with our customers to get to the core of their problems, enable the data supply chain and help them monetize their data. We make enterprise data dance!

Our work elevates the quality of lives for our family, customers, partners and the community.

The human values that we display in all our interactions are of:

Passion – we are committed in heart and head

Integrity – we are real, honest and, fair

Empathy – we understand business isn’t just B2B, or B2C, it is H2H i.e. Human to Human

Boldness – we have the courage to think and do differently

The CLOUDSUFI Foundation embraces the power of legacy and wisdom of those who have helped laid the foundation for all of us, our seniors. We believe in their abilities and we pledge to equip them, to provide them jobs, and to bring them sufi joy.

Tech stack

Connect with the team

Jobs at CLOUDSUFI

Technical Project Manager

Current Location - Bangalore

Remote (with quarterly visit to Noida)

You can share your resume at ayushi.dwivedi at the rate cloudsufi.com

Role Overview

We are seeking a highly technical and execution-oriented Technical Project Manager (TPM) to lead the delivery of core Platform Engineering capabilities and critical Enterprise Application Integrations (EAI) for CLOUDSUFI customers. Unlike a traditional PM, this role is deeply embedded in the "how" of technical delivery—ensuring that complex, cloud-native infrastructure projects are executed on time, within scope, and with high technical integrity.

The ideal candidate acts as the bridge between high-level architectural design and day-to-day engineering execution, possessing deep expertise in GCP (Google Cloud Platform) and modern integration patterns.

Key Responsibilities

- Technical Execution & Delivery: Lead the end-to-end project lifecycle for platform engineering and EAI initiatives. Convert high-level roadmaps into actionable technical workstreams, ensuring milestones are met across multi-disciplinary teams.

- Sprint & Release Management: Facilitate technical grooming, sprint planning, and daily stand-ups. Manage the velocity and throughput of the platform engineering team, ensuring that technical debt is balanced against feature delivery.

- Dependency & Risk Mitigation: Proactively identify and resolve technical blockers, resource constraints, and cross-team dependencies. Maintain a rigorous risk register for complex integration projects involving third-party systems.

- Technical Scoping & Documentation: Collaborate with Architects to translate business requirements into detailed technical specifications, data flow diagrams, and API documentation. Ensure the technical team has a clear, unambiguous path to implementation.

- Stakeholder Coordination: Serve as the primary technical point of contact for external customers and internal business units. Communicate project status, technical risks, and architectural trade-offs to both executive and technical audiences.

- Quality & Operational Excellence: Define and track project-based KPIs such as deployment frequency, mean time to recovery (MTTR), and integration success rates. Ensure all deliveries meet CLOUDSUFI’s high standards for security and scalability.

- GCP Ecosystem Oversight: Direct the implementation of services within the Google Cloud ecosystem, ensuring projects leverage GCP best practices for cost-optimization and performance.

Experience and Qualifications

- Experience: 8+ years of experience in Technical Project Management or Engineering Management, with at least 3 years specifically focused on Cloud Infrastructure, Platform Engineering, or EAI.

- Technical Depth: * GCP Mandatory: Deep hands-on familiarity with Google Cloud Platform services (GKE, Pub/Sub, Cloud Functions, Apigee).

- Integrations: Proven track record of delivering large-scale EAI projects (API Gateways, Event-Driven Architecture, Service Mesh).

- Cloud-Native: Strong understanding of Kubernetes, Docker, CI/CD pipelines (GitLab/Jenkins), and Infrastructure as Code (Terraform).

- Project Leadership: Exceptional ability to lead "deep-tech" teams. You should be able to challenge technical estimates and understand code-level blockers without necessarily writing the code yourself.

- Agile Mastery: Expert-level proficiency in Scrum and Kanban. Advanced skills in Jira (setting up complex workflows, dashboards, and automation) and Confluence.

- Education: Bachelor’s or Master’s degree in Computer Science, Information Technology, or a related engineering field.

- Certifications: * Required: PMP, PRINCE2, or CSM (Certified Scrum Master).

- Preferred: Google Cloud Professional Cloud Architect or Professional Data Engineer certifications.

About Us :

CLOUDSUFI, a Google Cloud Premier Partner, a Data Science and Product Engineering organization building Products and Solutions for Technology and Enterprise industries. We firmly believe in the power of data to transform businesses and make better decisions. We combine unmatched experience in business processes with cutting edge infrastructure and cloud services. We partner with our customers to monetize their data and make enterprise data dance.

Our Values :

We are a passionate and empathetic team that prioritizes human values. Our purpose is to elevate the quality of lives for our family, customers, partners and the community.

Equal Opportunity Statement :

CLOUDSUFI is an equal opportunity employer. We celebrate diversity and are committed to creating an inclusive environment for all employees. All qualified candidates receive consideration for employment without regard to race, color, religion, gender, gender identity or expression, sexual orientation, and national origin status. We provide equal opportunities in employment, advancement, and all other areas of our workplace.

Role : Lead AI/Senior Engineer-AI

Location : Noida, Delhi/NCR

Experience : 5- 12 years

Education : BTech / BE / MCA / MSc Computer Science

Must Haves :

Conversational AI & NLU :

- Advanced proficiency with Dialogflow CX

- Intent classification, entity extraction, conversation flow design

- Experience building structured dialogue flows with routing logic CCAI platform familiarity

Agentic AI & Multi-Step Reasoning :

- Production experience with Google ADK (or LangChain/LangGraph equivalent)

- Multi-step reasoning and tool orchestration capability

- Tool-use patterns and function calling implementation

RAG Systems & Knowledge Management :

- Hands-on Vertex AI RAG Engine experience (or equivalent)

- Semantic search, chunking strategies, retrieval optimization

- Document processing pipelines (PDF parsing, chunking)

LLM/GenAI & Prompt Engineering :

- Production experience with Gemini models

- Advanced prompt engineering for customer support

- Langfuse experience for prompt management

Google Cloud Platform & Vertex AI :

- Advanced Vertex AI proficiency (Generative AI APIs, Agent Engine)

- Cloud Functions and Cloud Run deployment experience

- BigQuery for conversation analytics

API Integration :

- Genesys Cloud CX integration experience

- REST API design and webhook implementation

- Enterprise authentication patterns (OAuth 2.0)

Good To Have :

Conversational AI & NLU :

- Multi-language support implementation (Spanish/English)

- Telephony integration (speech recognition, TTS, DTMF)

- Barge-in handling and voice optimization

Agentic AI :

- Agent state management and session persistence

- Advanced fallback strategies and error recovery

- Dynamic tool selection and evaluation

RAG Systems :

- Re-ranking and advanced retrieval quality metrics

- Query expansion and context-aware retrieval

- Corpus organization strategies

LLM/GenAI :

- Prompt versioning, A/B testing, iterative refinement

- Prompt injection mitigation strategies

- In-context learning, few-shot, chain-of-thought techniques

LLMOps & Observability :

- Vertex AI Evaluation Service experience

- Groundedness, relevance, coherence, safety metrics

- Trace-level debugging with Cloud Trace

- Centralized logging strategies

Google Cloud :

- Application Integration connectors

- VPC Service Controls and enterprise security

- Cloud Pub/Sub for event-driven systems

Enterprise Integration :

- Third-party AI agent orchestration (SAP Joule, ServiceNow AI, Agentforce)

- Salesforce, SAP, ServiceNow integration patterns

- Context passage strategies for escalations

Architecture & System Design :

- Configuration-driven systems (Meta-Agent patterns)

- Microservices and containerization

- Scalable, multi-tenant system design

- Disaster recovery and failover strategies

Product Quality & KPIs :

- Customer support metrics expertise (CSAT, SSR, escalation rate)

- A/B testing and experimentation frameworks

- User feedback loop implementation

Deliverables :

- Architecture Design : End-to-end platform architecture, data flow diagrams, Dialogflow CX vs. ADK routing decisions

- Conversational Flows : 15+ dialogue flows covering billing, networking, appointments, troubleshooting, and escalations

- ADK Agent Implementation : Complex reasoning agents for technical support, account analysis, and context preparation

- RAG Pipeline : Document processing, chunking configuration, corpus organization (product docs, support articles, policies, promotions)

- Prompt Management : System prompts, Langfuse setup, playbook governance, version control

- Quality Framework : Evaluation pipeline, metrics dashboards, automated assessment, continuous improvement recommendations

- Integration Layer : Genesys handoff, webhook integrations, Application Integration setup, session management

- Testing & Validation : Conversation flow tests, performance testing (latency, throughput, 1000 concurrent users), security validation

- Response time <2 seconds (p95), 99.9% uptime, 1000 concurrent conversations

- Data encryption (TLS 1.2+, AES-256 at rest), PII redaction, 1-year data retention

- Graceful degradation and fallback mechanisms

About Us:

CLOUDSUFI, a Google Cloud Premier Partner, is a global leading provider of data-driven digital transformation across cloud-based enterprises. With a global presence and focus on Software & Platforms, Life sciences and Healthcare, Retail, CPG, financial services, and supply chain, CLOUDSUFI is positioned to meet customers where they are in their data monetization journey.

Job Summary:

We are seeking a highly skilled and motivated Data Engineer to join our Development POD for the Integration Project. The ideal candidate will be responsible for designing, building, and maintaining robust data pipelines to ingest, clean, transform, and integrate diverse public datasets into our knowledge graph. This role requires a strong understanding of Cloud Platform (GCP) services, data engineering best practices, and a commitment to data quality and scalability.

Key Responsibilities:

- ETL Development: Design, develop, and optimize data ingestion, cleaning, and transformation pipelines for various data sources (e.g., CSV, API, XLS, JSON, SDMX) using Cloud Platform services (Cloud Run, Dataflow) and Python.

- Schema Mapping & Modeling: Work with LLM-based auto-schematization tools to map source data to our schema.org vocabulary, defining appropriate Statistical Variables (SVs) and generating MCF/TMCF files.

- Entity Resolution & ID Generation: Implement processes for accurately matching new entities with existing IDs or generating unique, standardized IDs for new entities.

- Knowledge Graph Integration: Integrate transformed data into the Knowledge Graph, ensuring proper versioning and adherence to existing standards.

- API Development: Develop and enhance REST and SPARQL APIs via Apigee to enable efficient access to integrated data for internal and external stakeholders.

- Data Validation & Quality Assurance: Implement comprehensive data validation and quality checks (statistical, schema, anomaly detection) to ensure data integrity, accuracy, and freshness. Troubleshoot and resolve data import errors.

- Automation & Optimization: Collaborate with the Automation POD to leverage and integrate intelligent assets for data identification, profiling, cleaning, schema mapping, and validation, aiming for significant reduction in manual effort.

- Collaboration: Work closely with cross-functional teams, including Managed Service POD, Automation POD, and relevant stakeholders.

Qualifications and Skills:

- Education: Bachelor's or Master's degree in Computer Science, Data Engineering, Information Technology, or a related quantitative field.

- Experience: 3+ years of proven experience as a Data Engineer, with a strong portfolio of successfully implemented data pipelines.

- Programming Languages: Proficiency in Python for data manipulation, scripting, and pipeline development.

- Cloud Platforms and Tools: Expertise in Google Cloud Platform (GCP) services, including Cloud Storage, Cloud SQL, Cloud Run, Dataflow, Pub/Sub, BigQuery, and Apigee. Proficiency with Git-based version control.

Core Competencies:

- Must Have - SQL, Python, BigQuery, (GCP DataFlow / Apache Beam), Google Cloud Storage (GCS)

- Must Have - Proven ability in comprehensive data wrangling, cleaning, and transforming complex datasets from various formats (e.g., API, CSV, XLS, JSON)

- Secondary Skills - SPARQL, Schema.org, Apigee, CI/CD (Cloud Build), GCP, Cloud Data Fusion, Data Modelling

- Solid understanding of data modeling, schema design, and knowledge graph concepts (e.g., Schema.org, RDF, SPARQL, JSON-LD).

- Experience with data validation techniques and tools.

- Familiarity with CI/CD practices and the ability to work in an Agile framework.

- Strong problem-solving skills and keen attention to detail.

Preferred Qualifications:

- Experience with LLM-based tools or concepts for data automation (e.g., auto-schematization).

- Familiarity with similar large-scale public dataset integration initiatives.

- Experience with multilingual data integration.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

About Us :

CLOUDSUFI, a Google Cloud Premier Partner, a Data Science and Product Engineering organization building Products and Solutions for Technology and Enterprise industries. We firmly believe in the power of data to transform businesses and make better decisions. We combine unmatched experience in business processes with cutting edge infrastructure and cloud services. We partner with our customers to monetize their data and make enterprise data dance.

Our Values :

We are a passionate and empathetic team that prioritizes human values. Our purpose is to elevate the quality of lives for our family, customers, partners and the community.

Equal Opportunity Statement :

CLOUDSUFI is an equal opportunity employer. We celebrate diversity and are committed to creating an inclusive environment for all employees. All qualified candidates receive consideration for employment without regard to race, color, religion, gender, gender identity or expression, sexual orientation, and national origin status. We provide equal opportunities in employment, advancement, and all other areas of our workplace.

About the Role

Job Title: Lead Java Developer

Location: Noida(Hybrid)

Experience: 7-12 years

Education: BTech / BE / ME /MTech/ MCA / MSc Computer Science

Primary Skills - Java 8-17+, Core Java, Design patterns (more than Singleton & Factory), Webservices development,REST/SOAP, XML & JSON manipulation, OAuth 2.0, CI/CD, SQL / NoSQL

Secondary Skills -Kafka, Jenkins, Kubernetes, Google Cloud Platform (GCP), SAP JCo library, Terraform

Certifications (Optional): OCPJP (the Oracle Certified Professional Java Programmer) / Google Professional Cloud

Required Experience:

● Must have integration component development experience using Java 8/9 technologies andservice-oriented architecture (SOA)

● Must have in-depth knowledge of design patterns and integration architecture

● Must have experience in system scalability and maintenance for complex enterprise applications and integration solutions

● Experience with developing solutions on Google Cloud Platform will be an added advantage.

● Should have good hands-on experience with Software Engineering tools viz. Eclipse, NetBeans, JIRA,Confluence, BitBucket, SVN etc.

● Should be very well verse with current technology trends in IT Solutions e.g. Cloud Platform Development,DevOps, Low Code solutions, Intelligent Automation

Good to Have:

● Experience of developing 3-4 integration adapters/connectors for enterprise applications (ERP, CRM, HCM,SCM, Billing etc.) using industry standard frameworks and methodologies following Agile/Scrum

Behavioral competencies required:

● Must have worked with US/Europe based clients in onsite/offshore delivery model

● Should have very good verbal and written communication, technical articulation, listening and presentation skills

● Should have proven analytical and problem solving skills

● Should have demonstrated effective task prioritization, time management and internal/external stakeholder management skills

● Should be a quick learner and team player

● Should have experience of working under stringent deadlines in a Matrix organization structure

● Should have demonstrated appreciable Organizational Citizenship Behavior (OCB) in past organizations

Job Responsibilities:

● Writing the design specifications and user stories for the functionalities assigned.

● Develop assigned components / classes and assist QA team in writing the test cases

● Create and maintain coding best practices and do peer code / solution reviews

● Participate in Daily Scrum calls, Scrum Planning, Retro and Demos meetings

● Bring out technical/design/architectural challenges/risks during execution, develop action plan for mitigation and aversion of identified risks

● Comply with development processes, documentation templates and tools prescribed by CloudSufi or and its clients

● Work with other teams and Architects in the organization and assist them on technical Issues/Demos/POCs and proposal writing for prospective clients

● Contribute towards the creation of knowledge repository, reusable assets/solution accelerators and IPs

● Provide feedback to junior developers and be a coach and mentor for them

● Provide training sessions on the latest technologies and topics to others employees in the organization

● Participate in organization development activities time to time - Interviews, CSR/Employee engagement activities, participation in business events/conferences, implementation of new policies, systems and procedures as decided by Management team

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

About Us :

CLOUDSUFI, a Google Cloud Premier Partner, a Data Science and Product Engineering organization building Products and Solutions for Technology and Enterprise industries. We firmly believe in the power of data to transform businesses and make better decisions. We combine unmatched experience in business processes with cutting edge infrastructure and cloud services. We partner with our customers to monetize their data and make enterprise data dance.

Our Values :

We are a passionate and empathetic team that prioritizes human values. Our purpose is to elevate the quality of lives for our family, customers, partners and the community.

Equal Opportunity Statement :

CLOUDSUFI is an equal opportunity employer. We celebrate diversity and are committed to creating an inclusive environment for all employees. All qualified candidates receive consideration for employment without regard to race, color, religion, gender, gender identity or expression, sexual orientation, and national origin status. We provide equal opportunities in employment, advancement, and all other areas of our workplace.

Role: Project Manager

Location: Noida, Delhi NCR

Experience: 8-15 years

Education: BTech / BE / MCA / MSc Computer Science

Primary Skills -

Java 8-17+, Core Java, Design patterns (more than Singleton & Factory), Multi-tenant SaaS platforms, Event-driven systems, Scalable Cloud-native architecture, Webservices development REST/SOAP, XML & JSON manipulation, OAuth 2.0, CI/CD, SQL / NoSQL, Program Strategy and execution, Technical & Architectural Leadership

Secondary Skills -Kafka, Jenkins, Kubernetes, Google Cloud Platform (GCP)

Certifications (Optional): OCPJP (the Oracle Certified Professional Java Programmer) / Google Professional Cloud Developer

Required Experience:

- Must have experience in creating Program Strategy and delivery milestones for complex integration solutions on Cloud

- Serve as the technical liaison between solution architects and delivery teams, ensuring that low-level designs (LLD) align with the high-level architectural vision (HLD)

- Must have integration component development experience using Java 8/9 technologies and service-oriented architecture (SOA)

- Must have in-depth knowledge of design patterns and integration architecture

- Must have experience in Cloud-native solutions, Domain-driven design, Secure application architecture

- Must have experience in system scalability and maintenance for complex enterprise applications and integration solutions

- API security, API gateways, OAuth 2.0

- Engineering roadmaps, Architecture governance, SDLC and DevOps strategy

- Experience with developing solutions on Google Cloud Platform will be an added advantage.

- Should have good hands-on experience with Software Engineering tools viz. Eclipse, NetBeans, JIRA, Confluence, BitBucket, SVN etc.

- Should be very well verse with current technology trends in IT Solutions e.g. Cloud Platform Development, DevOps, Low Code solutions, Intelligent Automation

Good to Have:

- Experience of developing 3-4 integration adapters/connectors for enterprise applications (ERP, CRM, HCM, SCM, Billing etc.) using industry standard frameworks and methodologies following Agile/Scrum

- Job Responsibilities

- Writing the design specifications and user stories for the functionalities assigned.

- Create and maintain coding best practices and do peer code / solution reviews

- Run Daily Scrum calls, Scrum Planning, Retro and Demos meetings

- Bring out technical/design/architectural challenges/risks during execution, develop action plan for mitigation and aversion of identified risks

- Monitor compliance with development processes, documentation templates and tools prescribed by CloudSufi or and its clients

- Work with other teams and Architects in the organization and assist them on technical Issues/Demos/POCs and proposal writing for prospective clients

- Contribute towards the creation of knowledge repository, reusable assets/solution accelerators and IPs

- Provide feedback to junior developers and be a coach and mentor for them

- Provide training sessions on the latest technologies and topics to others employees in the organization

- Participate in organization development activities time to time - Interviews, CSR/Employee engagement activities, participation in business events/conferences, implementation of new policies, systems and procedures as decided by Management team

Behavioural competencies required:

- Must have worked with US/Europe based clients in onsite/offshore delivery model

- Should have very good verbal and written communication, technical articulation, listening and presentation skills

- Should have proven analytical and problem solving skills

- Should have demonstrated effective task prioritization, time management and internal/external stakeholder management skills

- Should be a quick learner and team player

- Should have experience of working under stringent deadlines in a Matrix organization structure

- Should have demonstrated appreciable Organizational Citizenship Behavior (OCB) in past organizations

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

About Us

CLOUDSUFI, a Google Cloud Premier Partner, a Data Science and Product Engineering organization building Products and Solutions for Technology and Enterprise industries. We firmly believe in the power of data to transform businesses and make better decisions. We combine unmatched experience in business processes with cutting edge infrastructure and cloud services. We partner with our customers to monetize their data and make enterprise data dance.

Our Values

We are a passionate and empathetic team that prioritizes human values. Our purpose is to elevate the quality of lives for our family, customers, partners and the community.

Equal Opportunity Statement

CLOUDSUFI is an equal opportunity employer. We celebrate diversity and are committed to creating an inclusive environment for all employees. All qualified candidates receive consideration for employment without regard to race, color, religion, gender, gender identity or expression, sexual orientation, and national origin status. We provide equal opportunities in employment, advancement, and all other areas of our workplace. Please explore more at https://www.cloudsufi.com/.

About Role:

The Senior Python Developer will lead the design and implementation of ACL crawler connectors for Workato’s search platform. This role requires deep expertise in building scalable Python services, integrating with various SaaS APIs and designing robust data models. The developer will mentor junior team members and ensure that the solutions meet the technical and performance requirements outlined in the Statement of Work.

Key Responsibilities:

- Architecture and design: Translate business requirements into technical designs for ACL crawler connectors. Define data models, API interactions and modular components using the Workato SDK.

- Implementation: Build Python services to authenticate, enumerate domain entities and extract ACL information from OneDrive, ServiceNow, HubSpot and GitHub. Implement incremental sync, pagination, concurrency and caching.

- Performance optimisation: Profile code, parallelise API calls and utilise asynchronous programming to meet crawl time SLAs. Implement retry logic and error handling for network‑bound operations.

- Testing and code quality: Develop unit and integration tests, perform code reviews and enforce best practices (type hints, linting). Produce performance reports and documentation.

- Mentoring and collaboration: Guide junior developers, collaborate with QA, DevOps and product teams, and participate in design reviews and sprint planning.

- Hypercare support: Provide Level 2/3 support during the initial rollout, troubleshoot issues, implement minor enhancements and deliver knowledge transfer sessions.

Must Have Skills and Experiences:

- Bachelor’s degree in Computer Science or related field.

- 3-8 years of Python development experience, including asynchronous programming and API integration.

- Knowledge of python libraries-pandas,pytest,requests,asyncio

- Strong understanding of authentication protocols (OAuth 2.0, API keys) and access‑control models.

- Experience with integration with cloud or SaaS platforms such as Microsoft Graph, ServiceNow REST API, HubSpot API, GitHub API.

- Proven ability to lead projects and mentor other engineers.

- Excellent communication skills and ability to produce clear documentation.

Optional/Good to Have Skills and Experiences:

- Experience with integration with Microsoft Graph API, ServiceNow REST API, HubSpot API, GitHub API.

- Familiarity with the following libraries, tools and technologies will be advantageous-aiohttp,PyJWT,aiofiles / aiocache

- Experience with containerisation (Docker), CI/CD pipelines and Workato’s connector SDK is also considered a plus.

About Us

CLOUDSUFI, a Google Cloud Premier Partner, a Data Science and Product Engineering organization building Products and Solutions for Technology and Enterprise industries. We firmly believe in the power of data to transform businesses and make better decisions. We combine unmatched experience in business processes with cutting edge infrastructure and cloud services. We partner with our customers to monetize their data and make enterprise data dance.

Our Values

We are a passionate and empathetic team that prioritizes human values. Our purpose is to elevate the quality of lives for our family, customers, partners and the community.

Equal Opportunity Statement

CLOUDSUFI is an equal opportunity employer. We celebrate diversity and are committed to creating an inclusive environment for all employees. All qualified candidates receive consideration for employment without regard to race, color, religion, gender, gender identity or expression, sexual orientation, and national origin status. We provide equal opportunities in employment, advancement, and all other areas of our workplace. Please explore more at https://www.cloudsufi.com/.

Job Summary:

We are seeking a highly innovative and skilled AI Engineer to join our AI CoE for the Data Integration Project. The ideal candidate will be responsible for designing, developing, and deploying intelligent assets and AI agents that automate and optimize various stages of the data ingestion and integration pipeline. This role requires expertise in machine learning, natural language processing (NLP), knowledge representation, and cloud platform services, with a strong focus on building scalable and accurate AI solutions.

Key Responsibilities:

- LLM-based Auto-schematization: Develop and refine LLM-based models and techniques for automatically inferring schemas from diverse unstructured and semi-structured public datasets and mapping them to a standardized vocabulary.

- Entity Resolution & ID Generation AI: Design and implement AI models for highly accurate entity resolution, matching new entities with existing IDs and generating unique, standardized IDs for newly identified entities.

- Automated Data Profiling & Schema Detection: Develop AI/ML accelerators for automated data profiling, pattern detection, and schema detection to understand data structure and quality at scale.

- Anomaly Detection & Smart Imputation: Create AI-powered solutions for identifying outliers, inconsistencies, and corrupt records, and for intelligently filling missing values using machine learning algorithms.

- Multilingual Data Integration AI: Develop AI assets for accurately interpreting, translating (leveraging automated tools with human-in-the-loop validation), and semantically mapping data from diverse linguistic sources, preserving meaning and context.

- Validation Automation & Error Pattern Recognition: Build AI agents to run comprehensive data validation tool checks, identify common error types, suggest fixes, and automate common error corrections.

- Knowledge Graph RAG/RIG Integration: Integrate Retrieval Augmented Generation (RAG) and Retrieval Augmented Indexing (RIG) techniques to enhance querying capabilities and facilitate consistency checks within the Knowledge Graph.

- MLOps Implementation: Implement and maintain MLOps practices for the lifecycle management of AI models, including versioning, deployment, monitoring, and retraining on a relevant AI platform.

- Code Generation & Documentation Automation: Develop AI tools for generating reusable scripts, templates, and comprehensive import documentation to streamline development.

- Continuous Improvement Systems: Design and build learning systems, feedback loops, and error analytics mechanisms to continuously improve the accuracy and efficiency of AI-powered automation over time.

Required Skills and Qualifications:

- Bachelor's or Master's degree in Computer Science, Artificial Intelligence, Machine Learning, or a related quantitative field.

- Proven experience (e.g., 3+ years) as an AI/ML Engineer, with a strong portfolio of deployed AI solutions.

- Strong expertise in Natural Language Processing (NLP), including experience with Large Language Models (LLMs) and their applications in data processing.

- Proficiency in Python and relevant AI/ML libraries (e.g., TensorFlow, PyTorch, scikit-learn).

- Hands-on experience with cloud AI/ML services,

- Understanding of knowledge representation, ontologies (e.g., Schema.org, RDF), and knowledge graphs.

- Experience with data quality, validation, and anomaly detection techniques.

- Familiarity with MLOps principles and practices for model deployment and lifecycle management.

- Strong problem-solving skills and an ability to translate complex data challenges into AI solutions.

- Excellent communication and collaboration skills.

Preferred Qualifications:

- Experience with data integration projects, particularly with large-scale public datasets.

- Familiarity with knowledge graph initiatives.

- Experience with multilingual data processing and AI.

- Contributions to open-source AI/ML projects.

- Experience in an Agile development environment.

Benefits:

- Opportunity to work on a high-impact project at the forefront of AI and data integration.

- Contribute to solidifying a leading data initiative's role as a foundational source for grounding Large Models.

- Access to cutting-edge cloud AI technologies.

- Collaborative, innovative, and fast-paced work environment.

- Significant impact on data quality and operational efficiency.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

About Us :

CLOUDSUFI, a Google Cloud Premier Partner, a Data Science and Product Engineering organization building Products and Solutions for Technology and Enterprise industries. We firmly believe in the power of data to transform businesses and make better decisions. We combine unmatched experience in business processes with cutting edge infrastructure and cloud services. We partner with our customers to monetize their data and make enterprise data dance.

Our Values :

We are a passionate and empathetic team that prioritizes human values. Our purpose is to elevate the quality of lives for our family, customers, partners and the community.

Equal Opportunity Statement :

CLOUDSUFI is an equal opportunity employer. We celebrate diversity and are committed to creating an inclusive environment for all employees. All qualified candidates receive consideration for employment without regard to race, color, religion, gender, gender identity or expression, sexual orientation, and national origin status. We provide equal opportunities in employment, advancement, and all other areas of our workplace.

Role: Senior Integration Engineer

Location: Remote/Delhi NCR

Experience: 4-8 years

Position Overview :

We are seeking a Senior Integration Engineer with deep expertise in building and managing integrations across Finance, ERP, and business systems. The ideal candidate will have both technical proficiency and strong business understanding, enabling them to translate finance team needs into robust, scalable, and fault-tolerant solutions.

Key Responsibilities:

- Design, develop, and maintain integrations between financial systems, ERPs, and related applications (e.g., expense management, commissions, accounting, sales)

- Gather requirements from Finance and Business stakeholders, analyze pain points, and translate them into effective integration solutions

- Build and support integrations using SOAP and REST APIs, ensuring reliability, scalability, and best practices for logging, error handling, and edge cases

- Develop, debug, and maintain workflows and automations in platforms such as Workato and Exactly Connect

- Support and troubleshoot NetSuite SuiteScript, Suiteflows, and related ERP customizations

- Write, optimize, and execute queries for Zuora (ZQL, Business Objects) and support invoice template customization (HTML)

- Implement integrations leveraging AWS (RDS, S3) and SFTP for secure and scalable data exchange

- Perform database operations and scripting using Python and JavaScript for transformation, validation, and automation tasks

- Provide functional support and debugging for finance tools such as Concur and Coupa

- Ensure integration architecture follows best practices for fault tolerance, monitoring, and maintainability

- Collaborate cross-functionally with Finance, Business, and IT teams to align technology solutions with business goals.

Qualifications:

- 3-8 years of experience in software/system integration with strong exposure to Finance and ERP systems

- Proven experience integrating ERP systems (e.g., NetSuite, Zuora, Coupa, Concur) with financial tools

- Strong understanding of finance and business processes: accounting, commissions, expense management, sales operations

- Hands-on experience with SOAP, REST APIs, Workato, AWS services, SFTP

- Working knowledge of NetSuite SuiteScript, Suiteflows, and Zuora queries (ZQL, Business Objects, invoice templates)

- Proficiency with databases, Python, JavaScript, and SQL query optimization

- Familiarity with Concur and Coupa functionality

- Strong debugging, problem-solving, and requirement-gathering skills

- Excellent communication skills and ability to work with cross-functional business teams.

Preferred Skills:

- Experience with integration design patterns and frameworks

- Exposure to CI/CD pipelines for integration deployments

- Knowledge of business and operations practices in financial systems and finance teams

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

About Us

CLOUDSUFI, a Google Cloud Premier Partner, a Data Science and Product Engineering organization building Products and Solutions for Technology and Enterprise industries. We firmly believe in the power of data to transform businesses and make better decisions. We combine unmatched experience in business processes with cutting edge infrastructure and cloud services. We partner with our customers to monetize their data and make enterprise data dance.

Our Values

We are a passionate and empathetic team that prioritizes human values. Our purpose is to elevate the quality of lives for our family, customers, partners and the community.

Equal Opportunity Statement

CLOUDSUFI is an equal opportunity employer. We celebrate diversity and are committed to creating an inclusive environment for all employees. All qualified candidates receive consideration for employment without regard to race, color, religion, gender, gender identity or expression, sexual orientation, and national origin status. We provide equal opportunities in employment, advancement, and all other areas of our workplace. Please explore more at https://www.cloudsufi.com/.

Role Overview:

As a Senior Data Scientist / AI Engineer, you will be a key player in our technical leadership. You will be responsible for designing, developing, and deploying sophisticated AI and Machine Learning solutions, with a strong emphasis on Generative AI and Large Language Models (LLMs). You will architect and manage scalable AI microservices, drive research into state-of-the-art techniques, and translate complex business requirements into tangible, high-impact products. This role requires a blend of deep technical expertise, strategic thinking, and leadership.

Key Responsibilities:

- Architect & Develop AI Solutions: Design, build, and deploy robust and scalable machine learning models, with a primary focus on Natural Language Processing (NLP), Generative AI, and LLM-based Agents.

- Build AI Infrastructure: Create and manage AI-driven microservices using frameworks like Python FastAPI, ensuring high performance and reliability.

- Lead AI Research & Innovation: Stay abreast of the latest advancements in AI/ML. Lead research initiatives to evaluate and implement state-of-the-art models and techniques for performance and cost optimization.

- Solve Business Problems: Collaborate with product and business teams to understand challenges and develop data-driven solutions that create significant business value, such as building business rule engines or predictive classification systems.

- End-to-End Project Ownership: Take ownership of the entire lifecycle of AI projects—from ideation, data processing, and model development to deployment, monitoring, and iteration on cloud platforms.

- Team Leadership & Mentorship: Lead learning initiatives within the engineering team, mentor junior data scientists and engineers, and establish best practices for AI development.

- Cross-Functional Collaboration: Work closely with software engineers to integrate AI models into production systems and contribute to the overall system architecture.

Required Skills and Qualifications

- Master’s (M.Tech.) or Bachelor's (B.Tech.) degree in Computer Science, Artificial Intelligence, Information Technology, or a related field.

- 6+ years of professional experience in a Data Scientist, AI Engineer, or related role.

- Expert-level proficiency in Python and its core data science libraries (e.g., PyTorch, Huggingface Transformers, Pandas, Scikit-learn).

- Demonstrable, hands-on experience building and fine-tuning Large Language Models (LLMs) and implementing Generative AI solutions.

- Proven experience in developing and deploying scalable systems on cloud platforms, particularly AWS. Experience with GCS is a plus.

- Strong background in Natural Language Processing (NLP), including experience with multilingual models and transcription.

- Experience with containerization technologies, specifically Docker.

- Solid understanding of software engineering principles and experience building APIs and microservices.

Preferred Qualifications

- A strong portfolio of projects. A track record of publications in reputable AI/ML conferences is a plus.

- Experience with full-stack development (Node.js, Next.js) and various database technologies (SQL, MongoDB, Elasticsearch).

- Familiarity with setting up and managing CI/CD pipelines (e.g., Jenkins).

- Proven ability to lead technical teams and mentor other engineers.

- Experience developing custom tools or packages for data science workflows.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Reporting to: Solution Architect / Program Manager / COE Head

Location: Noida, Delhi NCR

Shift: Normal Day shift with some overlap with US timezones

Experience: 4-7 years

Education: BTech / BE / MCA / MSc Computer Science

Industry: Product Engineering Services or Enterprise Software Companies

Primary Skills - Java 8/9, Core Java, Design patterns (more than Singleton & Factory),

Webservices development REST/SOAP, XML & JSON manipulation, CI/CD.

Secondary Skills - Jenkins, Kubernetes, Google Cloud Platform (GCP), SAP JCo library

Certifications (Optional): OCPJP (the Oracle Certified Professional Java Programmer) / Google

Professional Cloud Developer

Required Experience:

● Must have integration component development experience using Java 8/9 technologies

and service-oriented architecture (SOA)

● Must have in-depth knowledge of design patterns and integration architecture

● Experience with developing solutions on Google Cloud Platform will be an added

advantage.

● Should have good hands-on experience with Software Engineering tools viz. Eclipse,

NetBeans, JIRA, Confluence, BitBucket, SVN etc.

● Should be very well verse with current technology trends in IT Solutions e.g. Cloud

Platform Development, DevOps, Low Code solutions, Intelligent Automation

Good to Have:

● Experience of developing 3-4 integration adapters/connectors for enterprise applications

(ERP, CRM, HCM, SCM, Billing etc.) using industry standard frameworks and

methodologies following Agile/Scrum

Non-Technical/ Behavioral competencies required:

● Must have worked with US/Europe based clients in onsite/offshore delivery model

● Should have very good verbal and written communication, technical articulation, listening

and presentation skills

● Should have proven analytical and problem solving skills

● Should have demonstrated effective task prioritization, time management and

internal/external stakeholder management skills

● Should be a quick learner, self starter, go-getter and team player

● Should have experience of working under stringent deadlines in a Matrix organization

structure

● Should have demonstrated appreciable Organizational Citizenship Behavior (OCB) in

past organizations

Job Responsibilities:

● Writing the design specifications and user stories for the functionalities assigned.

● Develop assigned components / classes and assist QA team in writing the test cases

● Create and maintain coding best practices and do peer code / solution reviews

● Participate in Daily Scrum calls, Scrum Planning, Retro and Demos meetings

● Bring out technical/design/architectural challenges/risks during execution, develop action

plan for mitigation and aversion of identified risks

● Comply with development processes, documentation templates and tools prescribed by

CloudSufi or and its clients

● Work with other teams and Architects in the organization and assist them on technical

Issues/Demos/POCs and proposal writing for prospective clients

● Contribute towards the creation of knowledge repository, reusable assets/solution

accelerators and IPs

● Provide feedback to junior developers and be a coach and mentor for them

● Provide training sessions on the latest technologies and topics to others employees in

the organization

● Participate in organization development activities time to time - Interviews,

CSR/Employee engagement activities, participation in business events/conferences,

implementation of new policies, systems and procedures as decided by Management team.

Similar companies

About the company

CAW Studios is Product Development Studio. WE BUILD TRUE PRODUCT TEAMS for our clients. Each team is a small, well-balanced group of geeks and a product manager that together produce relevant and high-quality products. We use data to make decisions, bringing big data and analysis to software development. We believe the product development process is broken as most studios operate as IT Services. We operate like a software factory that applies manufacturing principles of product development to the software.

Jobs

11

About the company

Jobs

8

About the company

Jobs

2

About the company

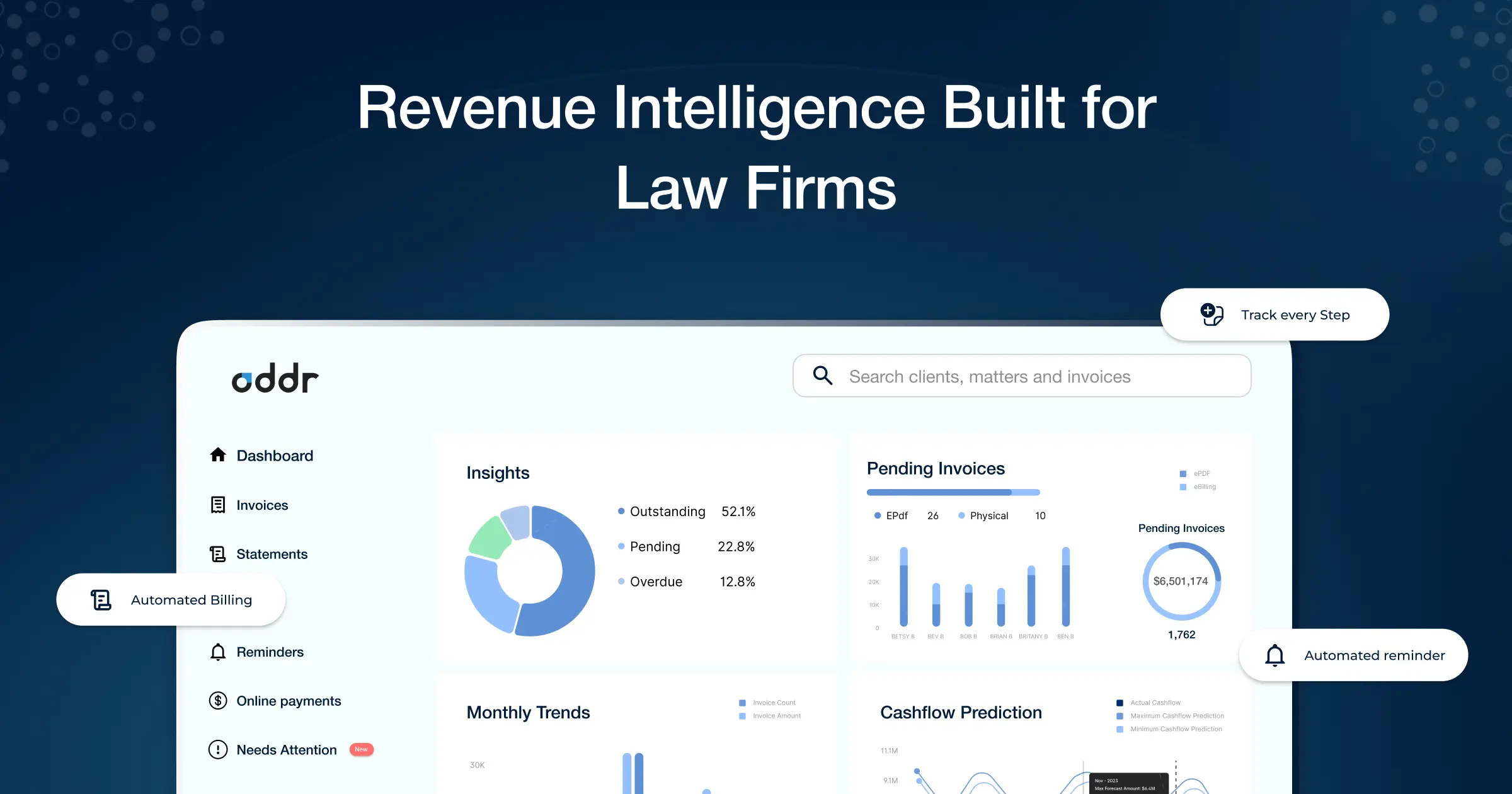

Oddr is the legal industry’s only AI-powered invoice-to-cash platform. Oddr’s AI-powered platform centralizes, streamlines and accelerates every step of billing + collections— from bill preparation and delivery to collections and reconciliation - enabling new possibilities in analytics, forecasting, and client service that eliminate revenue leakage and increase profitability in the billing and collections lifecycle.

www.oddr.com

Jobs

9

About the company

Integra Magna is a design and tech-first creative studio where designers, developers, and strategists collaborate to build meaningful brands and digital experiences. With 10+ years of industry experience and work across 100+ global brands, primarily in the UAE and the USA, the team focuses on end-to-end branding, UI/UX design, and website development. The studio’s work is recognised with a 4.9 rating on Clutch and an Awwwards honour, reflecting a strong culture of quality, ownership, and craft.

Jobs

6

About the company

Upland Software is a global cloud-software company that helps businesses “work smarter” by offering purpose-built, AI-enabled applications that drive revenue, reduce costs and deliver immediate value.

Founded in 2010 and headquartered in Austin, Texas, the company supports thousands of customers across industries—from enterprise to SMB—serving sectors such as manufacturing, retail, government, education, and technology.

Their product portfolio (20+ proven cloud products) spans knowledge-management, content lifecycle automation, digital marketing, project and work management, and contact-centre solutions—all enhanced by embedded AI.

Upland is committed to modern, flexible work—promoting a culture where remote, global teams collaborate and grow together

Jobs

2

About the company

Jobs

4

About the company

Jobs

3

About the company

Jobs

5