CoffeeBeans

https://coffeebeans.ioAbout

CoffeeBeans Consulting is a technology partner dedicated to driving business transformation. With deep expertise in Cloud, Data, MLOPs, AI, Infrastructure services, Application modernization services, Blockchain, and Big Data, we help organizations tackle complex challenges and seize growth opportunities in today’s fast-paced digital landscape. We’re more than just a tech service provider; we're a catalyst for meaningful change

Tech stack

Candid answers by the company

CoffeeBeans Consulting, founded in 2017, is a high-end technology consulting firm that helps businesses build better products and improve delivery quality through a mix of engineering, product, and process expertise. They work across domains to deliver scalable backend systems, data engineering pipelines, and AI-driven solutions, often using modern stacks like Java, Spring Boot, Python, Spark, Snowflake, Azure, and AWS. With a strong focus on clean architecture, performance optimization, and practical problem-solving, CoffeeBeans partners with clients for both internal and external projects—driving meaningful business outcomes through tech excellence.

Jobs at CoffeeBeans

No jobs found

Similar companies

About the company

Founded in 2004, Inteliment helps some of the most forward-thinking enterprises worldwide derive maximum business impact through their Data. With 20+ years in analytics, we help businesses harness deep expertise and the latest technologies to drive innovation, sharpen their competitive edge, and stay future-ready.

Inteliment is recognized as a leading provider of Data Driven Analytical Solutions & Services in Visual & Predictive Analytics, Data Science, IoT, Mobility & Artificial Intelligence areas. We strive for the success of our customers through Innovation, Technology and Partnerships.

Inteliment operates its Delivery & IP Centers in India and Australia and through its group companies in Singapore, Finland and USA.

Jobs

0

About the company

Jobs

10

About the company

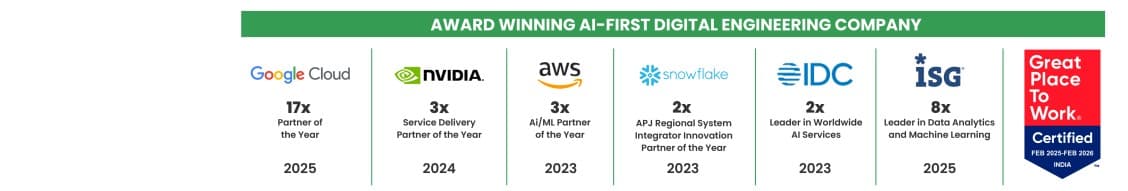

Quantiphi is an award-winning AI-first digital engineering company driven by the desire to reimagine and realize transformational opportunities at the heart of the business. Since its inception in 2013, Quantiphi has solved the toughest and most complex business problems by combining deep industry experience, disciplined cloud, and data-engineering practices, and cutting-edge artificial intelligence research to achieve accelerated and quantifiable business results.

Jobs

4

About the company

Jobs

7

About the company

Jobs

1

About the company

Jobs

5

About the company

Welcome to Neogencode Technologies, an IT services and consulting firm that provides innovative solutions to help businesses achieve their goals. Our team of experienced professionals is committed to providing tailored services to meet the specific needs of each client. Our comprehensive range of services includes software development, web design and development, mobile app development, cloud computing, cybersecurity, digital marketing, and skilled resource acquisition. We specialize in helping our clients find the right skilled resources to meet their unique business needs. At Neogencode Technologies, we prioritize communication and collaboration with our clients, striving to understand their unique challenges and provide customized solutions that exceed their expectations. We value long-term partnerships with our clients and are committed to delivering exceptional service at every stage of the engagement. Whether you are a small business looking to improve your processes or a large enterprise seeking to stay ahead of the competition, Neogencode Technologies has the expertise and experience to help you succeed. Contact us today to learn more about how we can support your business growth and provide skilled resources to meet your business needs.

Jobs

357

About the company

Jobs

4

About the company

Peak Hire Solutions is a leading Recruitment Firm that provides our clients with innovative IT / Non-IT Recruitment Solutions. We pride ourselves on our creativity, quality, and professionalism. Join our team and be a part of shaping the future of Recruitment.

Jobs

254

About the company

Jobs

13