What we look for:

As a DevOps Developer, you will contribute to a thriving and growing AIGovernance Engineering team. You will work in a Kubernetes-based microservices environment to support our bleeding-edge cloud services. This will include custom solutions, as well as open source DevOps tools (build and deploy automation, monitoring and data gathering for our software delivery pipeline). You will also be contributing to our continuous improvement and continuous delivery while increasing maturity of DevOps and agile adoption practices.

Responsibilities:

- Ability to deploy software using orchestrators /scripts/Automation on Hybrid and Public clouds like AWS

- Ability to write shell/python/ or any unix scripts

- Working Knowledge on Docker & Kubernetes

- Ability to create pipelines using Jenkins or any CI/CD tool and GitOps tool like ArgoCD

- Working knowledge of Git as a source control system and defect tracking system

- Ability to debug and troubleshoot deployment issues

- Ability to use tools for faster resolution of issues

- Excellent communication and soft skills

- Passionate and ability work and deliver in a multi-team environment

- Good team player

- Flexible and quick learner

- Ability to write docker files, Kubernetes yaml files / Helm charts

- Experience with monitoring tools like Nagios, Prometheus and visualisation tools such as Grafana.

- Ability to write Ansible, terraform scripts

- Linux System experience and Administration

- Effective cross-functional leadership skills: working with engineering and operational teams to ensure systems are secure, scalable, and reliable.

- Ability to review deployment and operational environments, i.e., execute initiatives to reduce failure, troubleshoot issues across the entire infrastructure stack, expand monitoring capabilities, and manage technical operations.

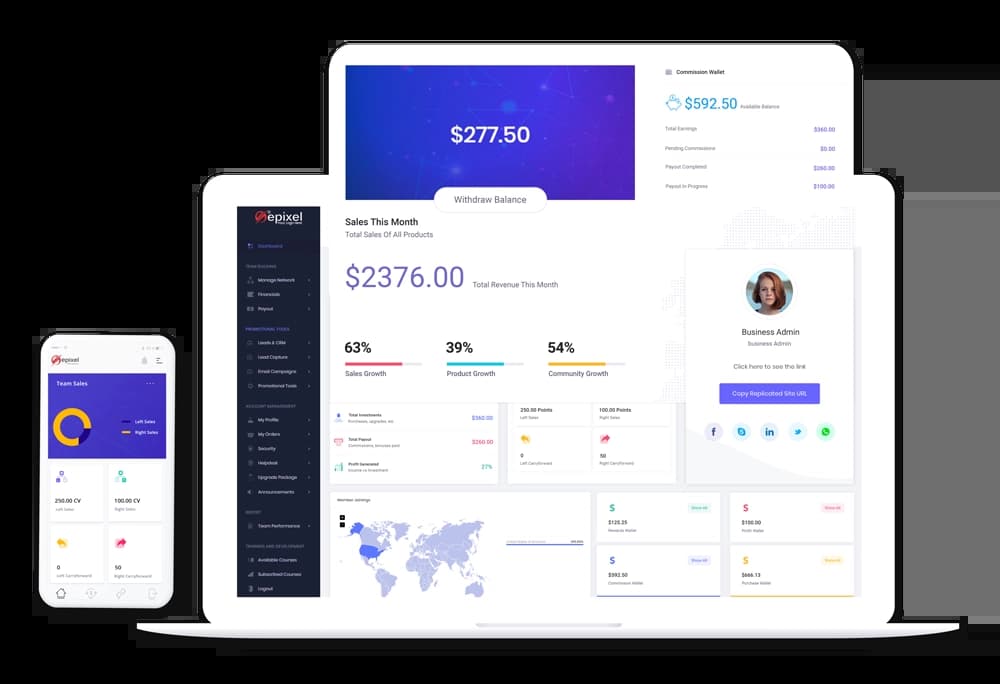

About Epixel MLM Software

About

Epixel MLM Software offers an advanced and sophisticated platform that guarantees quick business growth opportunities and helps to reach new customers and markets. Integrating your MLM business with Epixel MLM software provides you a stable back-office and commission processing system with features and tools that ensure maximum distributor engagement. Developed with the latest technologies and built-in features, Epixel can assure fast and real-time processing to return profitable results quickly. Epixel MLM system also supports custom requirements and integrations to adapt your business to the evolving requirements.

Want to join us? Contact our team at https://www.epixelmlmsoftware.com/contact-us

Company video

Photos

Connect with the team

Similar jobs

About Kanerika:

Kanerika Inc. is a premier global software products and services firm that specializes in providing innovative solutions and services for data-driven enterprises. Our focus is to empower businesses to achieve their digital transformation goals and maximize their business impact through the effective use of data and AI.

We leverage cutting-edge technologies in data analytics, data governance, AI-ML, GenAI/ LLM and industry best practices to deliver custom solutions that help organizations optimize their operations, enhance customer experiences, and drive growth.

Awards and Recognitions:

Kanerika has won several awards over the years, including:

1. Best Place to Work 2023 by Great Place to Work®

2. Top 10 Most Recommended RPA Start-Ups in 2022 by RPA Today

3. NASSCOM Emerge 50 Award in 2014

4. Frost & Sullivan India 2021 Technology Innovation Award for its Kompass composable solution architecture

5. Kanerika has also been recognized for its commitment to customer privacy and data security, having achieved ISO 27701, SOC2, and GDPR compliances.

Working for us:

Kanerika is rated 4.6/5 on Glassdoor, for many good reasons. We truly value our employees' growth, well-being, and diversity, and people’s experiences bear this out. At Kanerika, we offer a host of enticing benefits that create an environment where you can thrive both personally and professionally. From our inclusive hiring practices and mandatory training on creating a safe work environment to our flexible working hours and generous parental leave, we prioritize the well-being and success of our employees.

Our commitment to professional development is evident through our mentorship programs, job training initiatives, and support for professional certifications. Additionally, our company-sponsored outings and various time-off benefits ensure a healthy work-life balance. Join us at Kanerika and become part of a vibrant and diverse community where your talents are recognized, your growth is nurtured, and your contributions make a real impact. See the benefits section below for the perks you’ll get while working for Kanerika.

About the role:

As a DevOps Engineer, you will play a critical role in bridging the gap between development, operations, and security teams to enable fast, secure, and reliable software delivery. With 5+ years of hands-on experience, the engineer is responsible for designing, implementing, and maintaining scalable, automated, and cloud-native infrastructure solutions.

Key Responsibilities:

- 5+ years of hands-on experience in DevOps or Cloud Engineering roles.

- Strong expertise in at least one public cloud provider (AWS / Azure / GCP).

- Proficiency in Infrastructure as Code (IaC) tools (Terraform, Ansible, Pulumi, or CloudFormation).

- Solid experience with Kubernetes and containerized applications.

- Strong knowledge of CI/CD tools (Jenkins, GitHub Actions, GitLab CI, Azure DevOps, ArgoCD).

- Scripting/programming skills in Python, Shell, or Go for automation.

- Hands-on experience with monitoring, logging, and incident management.

- Familiarity with security practices in DevOps (secrets management, IAM, vulnerability scanning).

Employee Benefits:

1. Culture:

- Open Door Policy: Encourages open communication and accessibility to management.

- Open Office Floor Plan: Fosters a collaborative and interactive work environment.

- Flexible Working Hours: Allows employees to have flexibility in their work schedules.

- Employee Referral Bonus: Rewards employees for referring qualified candidates.

- Appraisal Process Twice a Year: Provides regular performance evaluations and feedback.

2. Inclusivity and Diversity:

- Hiring practices that promote diversity: Ensures a diverse and inclusive workforce.

- Mandatory POSH training: Promotes a safe and respectful work environment.

3. Health Insurance and Wellness Benefits:

- GMC and Term Insurance: Offers medical coverage and financial protection.

- Health Insurance: Provides coverage for medical expenses.

- Disability Insurance: Offers financial support in case of disability.

4. Child Care & Parental Leave Benefits:

- Company-sponsored family events: Creates opportunities for employees and their families to bond.

- Generous Parental Leave: Allows parents to take time off after the birth or adoption of a child.

- Family Medical Leave: Offers leave for employees to take care of family members' medical needs.

5. Perks and Time-Off Benefits:

- Company-sponsored outings: Organizes recreational activities for employees.

- Gratuity: Provides a monetary benefit as a token of appreciation.

- Provident Fund: Helps employees save for retirement.

- Generous PTO: Offers more than the industry standard for paid time off.

- Paid sick days: Allows employees to take paid time off when they are unwell.

- Paid holidays: Gives employees paid time off for designated holidays.

- Bereavement Leave: Provides time off for employees to grieve the loss of a loved one.

6. Professional Development Benefits:

- L&D with FLEX- Enterprise Learning Repository: Provides access to a learning repository for professional development.

- Mentorship Program: Offers guidance and support from experienced professionals.

- Job Training: Provides training to enhance job-related skills.

- Professional Certification Reimbursements: Assists employees in obtaining professional certifications.

- Promote from Within: Encourages internal growth and advancement opportunities.

About the Job

This is a full-time role for a Lead DevOps Engineer at Spark Eighteen. We are seeking an experienced DevOps professional to lead our infrastructure strategy, design resilient systems, and drive continuous improvement in our deployment processes. In this role, you will architect scalable solutions, mentor junior engineers, and ensure the highest standards of reliability and security across our cloud infrastructure. The job location is flexible with preference for the Delhi NCR region.

Responsibilities

- Lead and mentor the DevOps/SRE team

- Define and drive DevOps strategy and roadmaps

- Oversee infrastructure automation and CI/CD at scale

- Collaborate with architects, developers, and QA teams to integrate DevOps practices

- Ensure security, compliance, and high availability of platforms

- Own incident response, postmortems, and root cause analysis

- Budgeting, team hiring, and performance evaluation

Requirements

Technical Skills

- Bachelor's or Master's degree in Computer Science, Engineering, or related field.

- 7+ years of professional DevOps experience with demonstrated progression.

- Strong architecture and leadership background

- Deep hands-on knowledge of infrastructure as code, CI/CD, and cloud

- Proven experience with monitoring, security, and governance

- Effective stakeholder and project management

- Experience with tools like Jenkins, ArgoCD, Terraform, Vault, ELK, etc.

- Strong understanding of business continuity and disaster recovery

Soft Skills

- Cross-functional communication excellence with ability to lead technical discussions.

- Strong mentorship capabilities for junior and mid-level team members.

- Advanced strategic thinking and ability to propose innovative solutions.

- Excellent knowledge transfer skills through documentation and training.

- Ability to understand and align technical solutions with broader business strategy.

- Proactive problem-solving approach with focus on continuous improvement.

- Strong leadership skills in guiding team performance and technical direction.

- Effective collaboration across development, QA, and business teams.

- Ability to make complex technical decisions with minimal supervision.

- Strategic approach to risk management and mitigation.

What We Offer

- Professional Growth: Continuous learning opportunities through diverse projects and mentorship from experienced leaders

- Global Exposure: Work with clients from 20+ countries, gaining insights into different markets and business cultures

- Impactful Work: Contribute to projects that make a real difference, with solutions generating over $1B in revenue

- Work-Life Balance: Flexible arrangements that respect personal wellbeing while fostering productivity

- Career Advancement: Clear progression pathways as you develop skills within our growing organization

- Competitive Compensation: Attractive salary packages that recognize your contributions and expertise

Our Culture

At Spark Eighteen, our culture centers on innovation, excellence, and growth. We believe in:

- Quality-First: Delivering excellence rather than just quick solutions

- True Partnership: Building relationships based on trust and mutual respect

- Communication: Prioritizing clear, effective communication across teams

- Innovation: Encouraging curiosity and creative approaches to problem-solving

- Continuous Learning: Supporting professional development at all levels

- Collaboration: Combining diverse perspectives to achieve shared goals

- Impact: Measuring success by the value we create for clients and users

Apply Here - https://tinyurl.com/t6x23p9b

Key Qualifications :

- At least 2 years of hands-on experience with cloud infrastructure on AWS or GCP

- Exposure to configuration management and orchestration tools at scale (e.g. Terraform, Ansible, Packer)

- Knowledge in DevOps tools (e.g. Jenkins, Groovy, and Gradle)

- Familiarity with monitoring and alerting tools(e.g. CloudWatch, ELK stack, Prometheus)

- Proven ability to work independently or as an integral member of a team

Preferable Skills :

- Familiarity with standard IT security practices such as encryption, credentials and key management

- Proven ability to acquire various coding languages (Java, Python- ) to support DevOps operation and cloud transformation

- Familiarity in web standards (e.g. REST APIs, web security mechanisms)

- Multi-cloud management experience with GCP / Azure

- Experience in performance tuning, services outage management and troubleshooting

Senior DevOps Engineer

Experience: Minimum 5 years of relevant experience

Key Responsibilities:

• Hands-on experience with AWS tools and CI/CD pipelines, Redhat Linux

• Strong expertise in DevOps practices and principles

• Experience with infrastructure automation and configuration management

• Excellent problem-solving skills and attention to detail

Nice to Have:

• Redhat certification

Key Responsibilities:

- Work with the development team to plan, execute and monitor deployments

- Capacity planning for product deployments

- Adopt best practices for deployment and monitoring systems

- Ensure the SLAs for performance, up time are met

- Constantly monitor systems, suggest changes to improve performance and decrease costs.

- Ensure the highest standards of security

Key Competencies (Functional):

- Proficiency in coding in atleast one scripting language - bash, Python, etc

- Has personally managed a fleet of servers (> 15)

- Understand different environments production, deployment and staging

- Worked in micro service / Service oriented architecture systems

- Has worked with automated deployment systems – Ansible / Chef / Puppet.

- Can write MySQL queries

- Good knowledge of at least one language (C#, Java, Python, Go, PHP, Node.js)

- Have enough experience on application and infrastructure architectures

- Design and plan cloud solution architecture

- Design for security, network, and compliances

- Analyze and optimize technical and business processes

- Ensure solution and operational reliability

- Manage and provision cloud infrastructure

- Manage IaaS, PaaS, and SaaS solutions

- Design strategies around cloud governance, migration, Cloud operations and DevOps

- Design highly scalable, available, and reliable cloud applications

- Build and test applications

- Deploy applications on cloud

- Integration with cloud services

Certification:

- Architect level certificate of any cloud (AWS, GCP, Azure)

Acceldata is creating the Data observability space. We make it possible for data-driven enterprises to effectively monitor, discover, and validate Data platforms at Petabyte scale. Our customers are Fortune 500 companies including Asia's largest telecom company, a unicorn fintech startup of India, and many more. We are lean, hungry, customer-obsessed, and growing fast. Our Solutions team values productivity, integrity, and pragmatism. We provide a flexible, remote-friendly work environment.

We are building software that can provide insights into companies' data operations and allows them to focus on delivering data reliably with speed and effectiveness. Join us in building an industry-leading data observability platform that focuses on ensuring data reliability from every spectrum (compute, data and pipeline) of a cloud or on-premise data platform.

Position Summary-

This role will support the customer implementation of a data quality and reliability product. The candidate is expected to install the product in the client environment, manage proof of concepts with prospects, and become a product expert and troubleshoot post installation, production issues. The role will have significant interaction with the client data engineering team and the candidate is expected to have good communication skills.

Required experience

- 6-7 years experience providing engineering support to data domain/pipelines/data engineers.

- Experience in troubleshooting data issues, analyzing end to end data pipelines and in working with users in resolving issues

- Experience setting up enterprise security solutions including setting up active directories, firewalls, SSL certificates, Kerberos KDC servers, etc.

- Basic understanding of SQL

- Experience working with technologies like S3, Kubernetes experience preferred.

- Databricks/Hadoop/Kafka experience preferred but not required

About the Company

Blue Sky Analytics is a Climate Tech startup that combines the power of AI & Satellite data to aid in the creation of a global environmental data stack. Our funders include Beenext and Rainmatter. Over the next 12 months, we aim to expand to 10 environmental data-sets spanning water, land, heat, and more!

We are looking for DevOps Engineer who can help us build the infrastructure required to handle huge datasets on a scale. Primarily, you will work with AWS services like EC2, Lambda, ECS, Containers, etc. As part of our core development crew, you’ll be figuring out how to deploy applications ensuring high availability and fault tolerance along with a monitoring solution that has alerts for multiple microservices and pipelines. Come save the planet with us!

Your Role

- Applications built at scale to go up and down on command.

- Manage a cluster of microservices talking to each other.

- Build pipelines for huge data ingestion, processing, and dissemination.

- Optimize services for low cost and high efficiency.

- Maintain high availability and scalable PSQL database cluster.

- Maintain alert and monitoring system using Prometheus, Grafana, and Elastic Search.

Requirements

- 1-4 years of work experience.

- Strong emphasis on Infrastructure as Code - Cloudformation, Terraform, Ansible.

- CI/CD concepts and implementation using Codepipeline, Github Actions.

- Advanced hold on AWS services like IAM, EC2, ECS, Lambda, S3, etc.

- Advanced Containerization - Docker, Kubernetes, ECS.

- Experience with managed services like database cluster, distributed services on EC2.

- Self-starters and curious folks who don't need to be micromanaged.

- Passionate about Blue Sky Climate Action and working with data at scale.

Benefits

- Work from anywhere: Work by the beach or from the mountains.

- Open source at heart: We are building a community where you can use, contribute and collaborate on.

- Own a slice of the pie: Possibility of becoming an owner by investing in ESOPs.

- Flexible timings: Fit your work around your lifestyle.

- Comprehensive health cover: Health cover for you and your dependents to keep you tension free.

- Work Machine of choice: Buy a device and own it after completing a year at BSA.

- Quarterly Retreats: Yes there's work-but then there's all the non-work+fun aspect aka the retreat!

- Yearly vacations: Take time off to rest and get ready for the next big assignment by availing the paid leaves.

Experience and Education

• Bachelor’s degree in engineering or equivalent.

Work experience

• 4+ years of infrastructure and operations management

Experience at a global scale.

• 4+ years of experience in operations management, including monitoring, configuration management, automation, backup, and recovery.

• Broad experience in the data center, networking, storage, server, Linux, and cloud technologies.

• Broad knowledge of release engineering: build, integration, deployment, and provisioning, including familiarity with different upgrade models.

• Demonstratable experience with executing, or being involved of, a complete end-to-end project lifecycle.

Skills

• Excellent communication and teamwork skills – both oral and written.

• Skilled at collaborating effectively with both Operations and Engineering teams.

• Process and documentation oriented.

• Attention to details. Excellent problem-solving skills.

• Ability to simplify complex situations and lead calmly through periods of crisis.

• Experience implementing and optimizing operational processes.

• Ability to lead small teams: provide technical direction, prioritize tasks to achieve goals, identify dependencies, report on progress.

Technical Skills

• Strong fluency in Linux environments is a must.

• Good SQL skills.

• Demonstratable scripting/programming skills (bash, python, ruby, or go) and the ability to develop custom tool integrations between multiple systems using their published API’s / CLI’s.

• L3, load balancer, routing, and VPN configuration.

• Kubernetes configuration and management.

• Expertise using version control systems such as Git.

• Configuration and maintenance of database technologies such as Cassandra, MariaDB, Elastic.

• Designing and configuration of open-source monitoring systems such as Nagios, Grafana, or Prometheus.

• Designing and configuration of log pipeline technologies such as ELK (Elastic Search Logstash Kibana), FluentD, GROK, rsyslog, Google Stackdriver.

• Using and writing modules for Infrastructure as Code tools such as Ansible, Terraform, helm, customize.

• Strong understanding of virtualization and containerization technologies such as VMware, Docker, and Kubernetes.

• Specific experience with Google Cloud Platform or Amazon EC2 deployments and virtual machines.c