Auxo AI

https://auxoai.comAbout

Company social profiles

Jobs at Auxo AI

AuxoAI is seeking a skilled and experienced Data Engineer to join our dynamic team. The ideal candidate will have 6+ years of prior experience in data engineering, with a strong background in AWS (Amazon Web Services) technologies. This role offers an exciting opportunity to work on diverse projects, collaborating with cross-functional teams to design, build, and optimize data pipelines and infrastructure.

**Location : Bangalore, Hyderabad, Mumbai, and Gurgaon**

**Responsibilities:**

- Design, develop, and maintain scalable data pipelines and ETL processes leveraging AWS services such as S3, Glue, EMR, Lambda, and Redshift.

- Collaborate with data scientists and analysts to understand data requirements and implement solutions that support analytics and machine learning initiatives.

- Optimize data storage and retrieval mechanisms to ensure performance, reliability, and cost-effectiveness.

- Implement data governance and security best practices to ensure compliance and data integrity.

- Troubleshoot and debug data pipeline issues, providing timely resolution and proactive monitoring.

- Stay abreast of emerging technologies and industry trends, recommending innovative solutions to enhance data engineering capabilities.

**Requirements**

- Bachelor's or Master's degree in Computer Science, Engineering, or a related field.

- 6+ years of prior experience in data engineering, with a focus on designing and building data pipelines.

- Proficiency in AWS services, particularly S3, Glue, EMR, Lambda, and Redshift.

- Strong programming skills in languages such as Python, Java, or Scala.

- Experience with SQL and NoSQL databases, data warehousing concepts, and big data technologies.

- Familiarity with containerization technologies (e.g., Docker, Kubernetes) and orchestration tools (e.g., Apache Airflow) is a plus.

AuxoAI is seeking a skilled and experienced Data Engineer to join our dynamic team. The ideal candidate will have 3-7 years of prior experience in data engineering, with a strong background in working on modern data platforms. This role offers an exciting opportunity to work on diverse projects, collaborating with cross-functional teams to design, build, and optimize data pipelines and infrastructure.

Location : Bangalore, Hyderabad, Mumbai, and Gurgaon

Responsibilities:

· Designing, building, and operating scalable on-premises or cloud data architecture

· Analyzing business requirements and translating them into technical specifications

· Design, develop, and implement data engineering solutions using DBT on cloud platforms (Snowflake, Databricks)

· Design, develop, and maintain scalable data pipelines and ETL processes

· Collaborate with data scientists and analysts to understand data requirements and implement solutions that support analytics and machine learning initiatives.

· Optimize data storage and retrieval mechanisms to ensure performance, reliability, and cost-effectiveness

· Implement data governance and security best practices to ensure compliance and data integrity

· Troubleshoot and debug data pipeline issues, providing timely resolution and proactive monitoring

· Stay abreast of emerging technologies and industry trends, recommending innovative solutions to enhance data engineering capabilities.

Requirements

· Bachelor's or Master's degree in Computer Science, Engineering, or a related field.

· Overall 3+ years of prior experience in data engineering, with a focus on designing and building data pipelines

· Experience of working with DBT to implement end-to-end data engineering processes on Snowflake and Databricks

· Comprehensive understanding of the Snowflake and Databricks ecosystem

· Strong programming skills in languages like SQL and Python or PySpark.

· Experience with data modeling, ETL processes, and data warehousing concepts.

· Familiarity with implementing CI/CD processes or other orchestration tools is a plus.

AuxoAI is looking for a highly motivated and detail-oriented Anaplan Modeler with foundational knowledge of Supply Chain Planning. You will work closely with Solution Architects and Senior Modelers to support the design, development, and optimization of Anaplan models for enterprise planning needs across industries.

This role offers a strong growth path toward Senior Modeler and Solution Architect roles and a chance to contribute to cutting-edge planning projects.

**Responsibilities: **

Build and maintain Anaplan models, modules, dashboards, and actions under defined specifications.

Execute data loading, validation, and basic transformation within Anaplan.

Optimize existing models for better performance and usability.

Apply Anaplan best practices and methodology in daily work.

Use Anaplan Connect or similar tools for data integration.

Assist in creating model documentation, user guides, and test plans.

Support testing and troubleshooting activities.

Develop a basic understanding of supply chain processes (demand, supply, inventory planning).

Collaborate effectively with internal teams and business stakeholders.

Participate in training and skill-building programs within the Anaplan ecosystem.

Requirements

Bachelor’s or Master’s in Engineering, Supply Chain, Operations Research, or a related field

3+ years of experience in a business/IT role with hands-on Anaplan modelling exposure

Anaplan Model Builder Certification is mandatory

Basic understanding of supply chain concepts like demand, supply, and inventory planning

Strong problem-solving and logical thinking skills

Proficiency in Excel or basic data analysis tools

Excellent attention to detail and communication skills

Enthusiasm to grow within the Anaplan and supply chain domain

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Responsibilities:

- Build and optimize batch and streaming data pipelines using Apache Beam (Dataflow)

- Design and maintain BigQuery datasets using best practices in partitioning, clustering, and materialized views

- Develop and manage Airflow DAGs in Cloud Composer for workflow orchestration

- Implement SQL-based transformations using Dataform (or dbt)

- Leverage Pub/Sub for event-driven ingestion and Cloud Storage for raw/lake layer data architecture

- Drive engineering best practices across CI/CD, testing, monitoring, and pipeline observability

- Partner with solution architects and product teams to translate data requirements into technical designs

- Mentor junior data engineers and support knowledge-sharing across the team

- Contribute to documentation, code reviews, sprint planning, and agile ceremonies

Requirements

- 5+ years of hands-on experience in data engineering, with at least 2 years on GCP

- Proven expertise in BigQuery, Dataflow (Apache Beam), Cloud Composer (Airflow)

- Strong programming skills in Python and/or Java

- Experience with SQL optimization, data modeling, and pipeline orchestration

- Familiarity with Git, CI/CD pipelines, and data quality monitoring frameworks

- Exposure to Dataform, dbt, or similar tools for ELT workflows

- Solid understanding of data architecture, schema design, and performance tuning

- Excellent problem-solving and collaboration skills

Bonus Skills:

- GCP Professional Data Engineer certification

- Experience with Vertex AI, Cloud Functions, Dataproc, or real-time streaming architectures

- Familiarity with data governance tools (e.g., Atlan, Collibra, Dataplex)

- Exposure to Docker/Kubernetes, API integration, and infrastructure-as-code (Terraform)

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Key Responsibilities

- Design and implement Dremio lakehouse architecture on cloud (AWS/Azure/Snowflake/Databricks ecosystem).

- Define data ingestion, curation, and semantic modeling strategies to support analytics and AI workloads.

- Optimize Dremio reflections, caching, and query performance for diverse data consumption patterns.

- Collaborate with data engineering teams to integrate data sources via APIs, JDBC, Delta/Parquet, and object storage layers (S3/ADLS).

- Establish best practices for data security, lineage, and access control aligned with enterprise governance policies.

- Support self-service analytics by enabling governed data products and semantic layers.

- Develop reusable design patterns, documentation, and standards for Dremio deployment, monitoring, and scaling.

- Work closely with BI and data science teams to ensure fast, reliable, and well-modeled access to enterprise data.

Qualifications

- Bachelor’s or Master’s in Computer Science, Information Systems, or related field.

- 10+ years in data architecture and engineering, with 3+ years in Dremio or modern lakehouse platforms.

- Strong expertise in SQL optimization, data modeling, and performance tuning within Dremio or similar query engines (Presto, Trino, Athena).

- Hands-on experience with cloud storage (S3, ADLS, GCS), Parquet/Delta/Iceberg formats, and distributed query planning.

- Knowledge of data integration tools and pipelines (Airflow, DBT, Kafka, Spark, etc.).

- Familiarity with enterprise data governance, metadata management, and role-based access control (RBAC).

- Excellent problem-solving, documentation, and stakeholder communication skills.

Preferred:

- Experience integrating Dremio with BI tools (Tableau, Power BI, Looker) and data catalogs (Collibra, Alation, Purview).

- Exposure to Snowflake, Databricks, or BigQuery environments.

- Experience in high-tech, manufacturing, or enterprise data modernization programs.

Similar companies

About the company

As a prominent web development company in India, our core expertise lies in custom software development for web and mobile app development according to client’s need and business workflow. we assist our clients through every step of the way; from consultation, design, development to maintenance. Leave it all in the hands of our expert web developers and we will build you secure, innovative and responsive web applications.

Jobs

20

About the company

The Story

Founded in 2011, Poshmark started with a simple yet powerful idea in Manish Chandra's garage. Along with co-founders Tracy Sun, Gautam Golwala, and Chetan Pungaliya, Chandra envisioned a platform that would revolutionize how people buy and sell fashion. The inspiration came from seeing the potential of the iPhone 4 to create meaningful connections in the shopping experience.

What They Do

Poshmark is not just another e-commerce platform – it's a social marketplace that brings together shopping and community. Think of it as a place where social media meets shopping, allowing users to buy, sell, and discover fashion, home goods, and accessories. With over 80 million users , the platform has become a go-to destination for both casual sellers and entrepreneurial individuals looking to build their own digital boutiques.

Growth & Achievements

- Started as a fashion-only platform and successfully expanded into home goods and electronics

- Raised over $160 million in venture funding

- Achieved a successful IPO in January 2021

- Recently launched innovative features like AI-powered visual search and livestream shopping

- Built a vibrant community of millions of buyers and sellers across the country

Today, under CEO Manish Chandra's leadership, Poshmark continues to innovate in the social commerce space, blending technology with community to create a unique shopping experience. The platform's success story is a testament to how combining social connections with commerce can create a powerful marketplace that resonates with modern consumers.

Jobs

7

About the company

Certa’s no-code platform makes it easy to digitize and manage the lifecycle of all your suppliers, partners, and customers. With automated onboarding, contract lifecycle management, and ESG management, Certa eliminates the procurement bottleneck and allows companies to onboard third-parties 3x faster.

Jobs

2

About the company

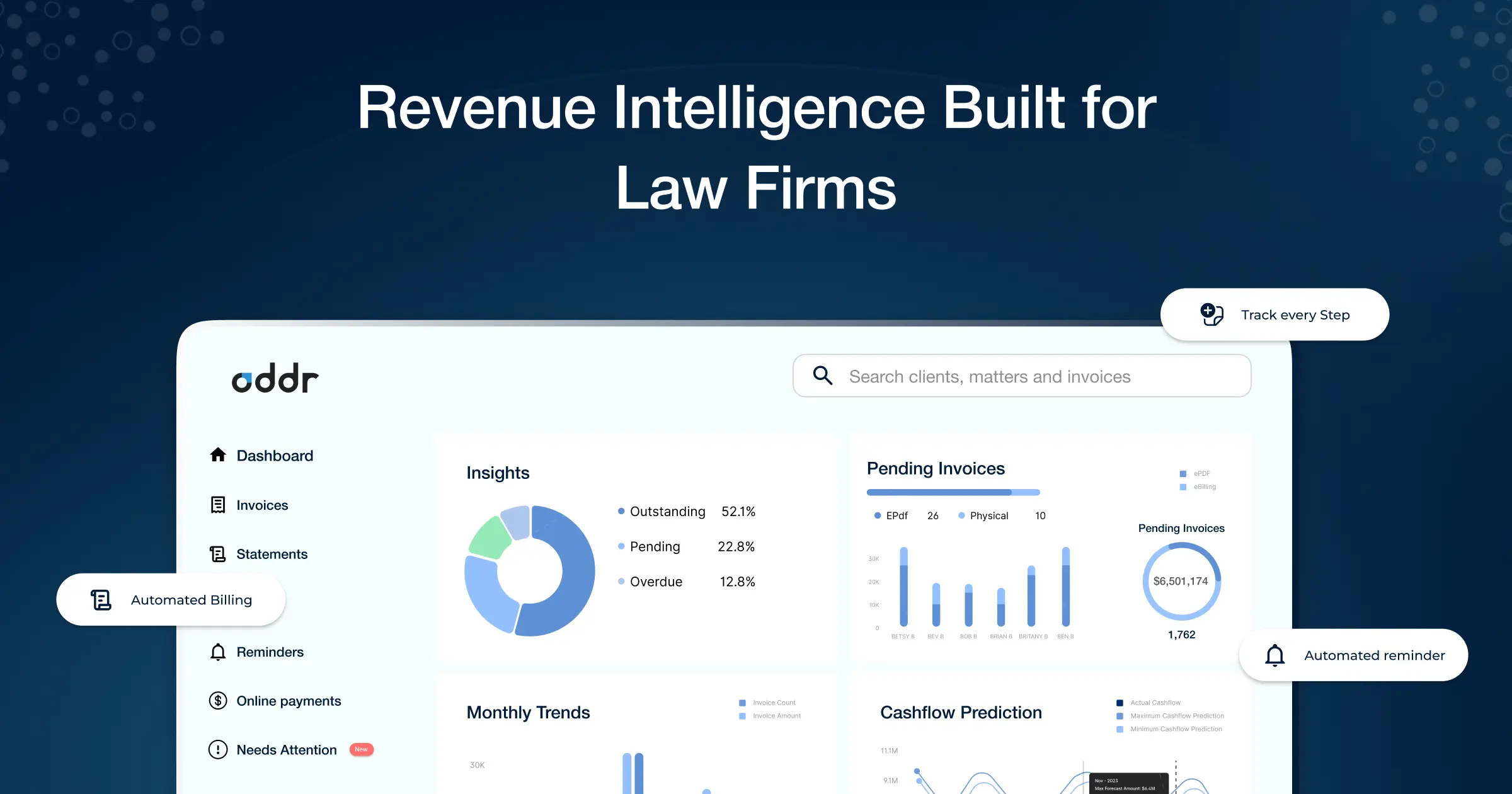

Oddr is the legal industry’s only AI-powered invoice-to-cash platform. Oddr’s AI-powered platform centralizes, streamlines and accelerates every step of billing + collections— from bill preparation and delivery to collections and reconciliation - enabling new possibilities in analytics, forecasting, and client service that eliminate revenue leakage and increase profitability in the billing and collections lifecycle.

www.oddr.com

Jobs

9

About the company

Ctruh is building the world’s first AI-powered Unified XR Commerce Studio, enabling brands to create immersive digital commerce experiences directly in the browser. The platform combines artificial intelligence with real-time 3D technology to transform how products are discovered, experienced, and purchased online.

Ctruh’s technology allows companies to instantly create virtual stores, AR try-ons, interactive 3D product pages, and mixed-reality marketing experiences without writing code or requiring specialized hardware. These experiences launch directly through a simple URL and work across web, mobile, and XR devices.

👥 About the Team

Ctruh is a deep-tech startup headquartered in Bengaluru and founded by Vinay Agastya. The team combines expertise across AI, XR, graphics engineering, and enterprise SaaS to build scalable infrastructure for immersive commerce.

Their mission is to democratize XR technology, making immersive digital experiences as easy to create as building a website.

🏆 Milestones

- Founded in 2022

- Built a no-code/low-code web-based 3D engine for XR experiences

- Platform powered by VersaAI, enabling instant text-to-3D asset creation

- Team of ~40+ employees globally

- Achieved approximately $5M revenue in 2025

- Growing community of 39K+ LinkedIn followers

Jobs

4

About the company

Jobs

7

About the company

Designbyte Studio is a digital design and development studio focused on building clean, modern, and reliable web experiences. We work closely with startups, creators, and growing businesses to design and develop websites, user interfaces, and digital products that are simple, functional, and easy to use.

Our approach is practical and detail-driven. We believe good design should be clear, purposeful, and aligned with real business goals. From concept to launch, we focus on quality, performance, and long-term value.

Jobs

1

About the company

Jobs

1