Tata Consultancy Services

https://tcs.comJobs at Tata Consultancy Services

• Knowledge on Routing and switching Technologies like IGP, OSPF, EGP, BGP, STP, RSTP, VTP, VSS, vPC, vDC, MSTP, LACP, VLAN

• Knowledge on Data Center, Cloud and Virtualization industry, ACI, Nexus products

• Experienced Knowledge on LAN SW Technologies like STP, RSTP, VTP, VSS, vPC, vDC, MSTP, LACP, VLAN, VXLAN-EVPN, DCNM, OTV, Fex, Fabricpath, VXlan, LISP.

• Knowledge of Cisco switching Platforms like Cat 6500, 6800, 4500, 3850, Nexus 7K, Nexus 5k, Nexus 3K and Nexus9k (standalone and ACI)

• Experience in installation, configuration, testing and troubleshooting and/or Network solution designing of Cisco routers and switch

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Key Responsibilities:

- Design, develop, and implement websites and components within Adobe Experience Manager (AEM).

- Develop and customize AEM components, templates, dialogs, workflows, servlets, and OSGi services.

- Integrate AEM with external systems and third-party services (CRM, DAM, Analytics, etc.).

- Implement responsive front-end solutions using HTML5, CSS3, JavaScript, ReactJS/Angular, and Sightly/HTL.

- Work closely with AEM Architects to ensure technical alignment and best practices.

- Optimize performance of AEM pages and ensure adherence to SEO and accessibility standards.

- Manage AEM environments, deployments, and configurations.

- Provide technical guidance and mentorship to junior developers.

- Collaborate with QA and DevOps teams for CI/CD automation and deployment pipelines.

- Troubleshoot and resolve AEM production issues.

Job Title: PySpark/Scala Developer

Functional Skills: Experience in Credit Risk/Regulatory risk domain

Technical Skills: Spark ,PySpark, Python, Hive, Scala, MapReduce, Unix shell scripting

Good to Have Skills: Exposure to Machine Learning Techniques

Job Description:

5+ Years of experience with Developing/Fine tuning and implementing programs/applications

Using Python/PySpark/Scala on Big Data/Hadoop Platform.

Roles and Responsibilities:

a) Work with a Leading Bank’s Risk Management team on specific projects/requirements pertaining to risk Models in

consumer and wholesale banking

b) Enhance Machine Learning Models using PySpark or Scala

c) Work with Data Scientists to Build ML Models based on Business Requirements and Follow ML Cycle to Deploy them all

the way to Production Environment

d) Participate Feature Engineering, Training Models, Scoring and retraining

e) Architect Data Pipeline and Automate Data Ingestion and Model Jobs

Skills and competencies:

Required:

· Strong analytical skills in conducting sophisticated statistical analysis using bureau/vendor data, customer performance

Data and macro-economic data to solve business problems.

· Working experience in languages PySpark & Scala to develop code to validate and implement models and codes in

Credit Risk/Banking

· Experience with distributed systems such as Hadoop/MapReduce, Spark, streaming data processing, cloud architecture.

- Familiarity with machine learning frameworks and libraries (like scikit-learn, SparkML, tensorflow, pytorch etc.

- Experience in systems integration, web services, batch processing

- Experience in migrating codes to PySpark/Scala is big Plus

- The ability to act as liaison conveying information needs of the business to IT and data constraints to the business

applies equal conveyance regarding business strategy and IT strategy, business processes and work flow

· Flexibility in approach and thought process

· Attitude to learn and comprehend the periodical changes in the regulatory requirement as per FED

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Desired Competencies (Technical/Behavioral Competency)

Must-Have

· Strong understanding of Kafka concepts, including topics, partitions, consumers, producers, and security.

· Experience with testing Kafka Connect, Kafka Streams, and other Kafka ecosystem components.

· API Testing Experience

· X-RAY and Test Automation Experience

· Expertise with Postman/SOAP

· Agile/JIRA/Confluence

· Strong familiarity such as XML, JSON, CSV, Avro, etc.

· Strong hands-on SQL, Mongo.

· Continuous integration and automated testing.

· Working knowledge and experience of Git.

Good-to-Have

· Troubleshoot Kafka related issues, Strong in Kafka client configuration and troubleshooting

SN

Responsibility of / Expectations from the Role

1

Engage with the customer to understand the requirements, provide technical solutions, provide value added suggestions

2

Help build and manage the team of Kafka and Java developers in the near future.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Skills and competencies:

Required:

· Strong analytical skills in conducting sophisticated statistical analysis using bureau/vendor data, customer performance

Data and macro-economic data to solve business problems.

· Working experience in languages PySpark & Scala to develop code to validate and implement models and codes in

Credit Risk/Banking

· Experience with distributed systems such as Hadoop/MapReduce, Spark, streaming data processing, cloud architecture.

- Familiarity with machine learning frameworks and libraries (like scikit-learn, SparkML, tensorflow, pytorch etc.

- Experience in systems integration, web services, batch processing

- Experience in migrating codes to PySpark/Scala is big Plus

- The ability to act as liaison conveying information needs of the business to IT and data constraints to the business

applies equal conveyance regarding business strategy and IT strategy, business processes and work flow

· Flexibility in approach and thought process

· Attitude to learn and comprehend the periodical changes in the regulatory requirement as per FED

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

• Technical expertise in the area of development of Master Data Management, data extraction, transformation, and load (ETL) applications, big data using existing and emerging technology platforms and cloud architecture

• Functions as lead developer• Support System Analysis, Technical/Data design, development, unit testing, and oversee end-to-end data solution.

• Technical SME in Master Data Management application, ETL, big data and cloud technologies

• Collaborate with IT teams to ensure technical designs and implementations account for requirements, standards, and best practices

• Performance tuning of end-to-end MDM, database, ETL, Big data processes or in the source/target database endpoints as needed.

• Mentor and advise junior members of team to provide guidance.

• Perform a technical lead and solution lead role for a team of onshore and offshore developers

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Responsibility of / Expectations from the Role

Assist in the design and implementation of Snowflake-based analytics solution (data lake and data warehouse) on Azure.

Profound experience in designing and developing data integration solutions using ETL tools such as DBT.

Hands-on experience in the implementation of cloud data warehouses using Snowflake & Azure Data Factory

Solid MS SQL Server skills including reporting experience.

Work closely with product managers and engineers to design, implement, test, and continually improve scalable data solutions and services running on DBT & Snowflake cloud platforms.

Implement critical and non-critical system data integration and ingestion fixes for the data platform and environment.

Ensuring root cause resolution to identified problems.

Monitor and support the Data Solutions jobs and processes to meet the daily SLA.

Analyze the current analytics environment and make recommendations for appropriate data warehouse modernization and migration to the cloud.

Develop Snowflake deployment (Using Azure DevOPS or similar CI/CD tool) and usage best practices.

Follow best practices and standards around data governance, security and privacy.

Comfortable working in a fast-paced team environment coordinating multiple projects.

Effective software development life cycle management skills and experience with GitHub

Leverage tools like Fivetran, DBT, Snowflake, GitHub, to drive ETL, data modeling and analytics.

Data transformation and Data Analytics Documentation

Similar companies

About the company

To hire better and faster, companies need rich candidate data, smart software and sound human judgement.

Cutshort is using AI to combine all these 3 to offer a 10x talent sourcing solution that is faster, better and cheaper.

We have 3 AI-powered offerings

- Hire using our AI platform: Affordable annual subscriptions

- Get only sourcing: 3.5% of annual CTC when you hire

- Get full recruiting: 6.99% of annual CTC when you hire

Customers such as Fractal, Sprinto, Shiprocket, Highlevel, ThoughtWorks, Deepintent have built strong engineering teams with Cutshort.

Jobs

1

About the company

About Company:

MyYogaTeacher is a fast-growing health tech startup with a mission to improve the physical and mental well-being of the entire planet. We are the first online marketplace to connect qualified Fitness and Yoga coaches from India with consumers worldwide to provide personalized 1-on-1 sessions via live video conference (app, web). We started in 2019 and have been showing tremendous traction with rave customer reviews.

- Over 200,000 happy customers

- Over 335,000 5 star reviews

- Over 150 Highly qualified coaches on the platform

- 95% of sessions are being completed with 5-star rating

Headquartered in California, with development and operations based in Bangalore, we are dedicated to providing exceptional service and promoting the benefits of yoga and fitness coaching worldwide. To learn more about us, visit https://myyogateacher.com/aboutus

We put our employees' well-being at the forefront by providing competitive industry salaries and robust benefits packages. We're proud to foster an inclusive workplace and make a positive impact on the community. Additionally, we actively promote internal mobility and professional development at every stage of your career.

Read more on our mission and culture at https://myyogateacher.com/articles/company-mission-culture

Jobs

0

About the company

Juntrax Solutions is a young company with a collaborative work culture, on a mission to bring efficient solutions to SMEs. We have release the first version of our product in 2019 and have over 300 users using it daily. Our current focus is to bring out release 2 of the product with a new redesign and new tech stack. Joining us you will be part of a great team building a integrated platform for SMEs to help them manage their daily business globally.

Jobs

2

About the company

Deep Tech Startup Focusing on Autonomy and Intelligence for Unmanned Systems. Guidance and Navigation, AI-ML, Computer Vision, Information Fusion, LLMs, Generative AI, Remote Sensing

Jobs

5

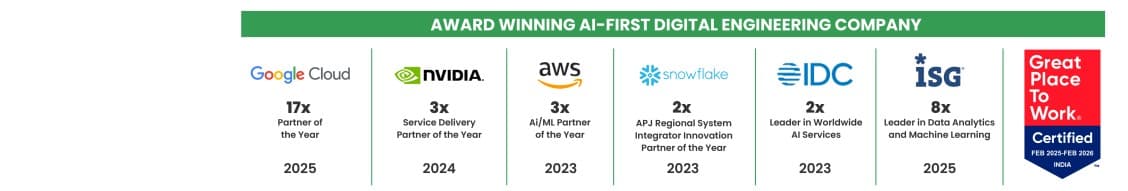

About the company

Quantiphi is an award-winning AI-first digital engineering company driven by the desire to reimagine and realize transformational opportunities at the heart of the business. Since its inception in 2013, Quantiphi has solved the toughest and most complex business problems by combining deep industry experience, disciplined cloud, and data-engineering practices, and cutting-edge artificial intelligence research to achieve accelerated and quantifiable business results.

Jobs

4

About the company

We help companies of all sizes from Startups to Unicorns in building and deploying scalable, future-ready solutions.

Jobs

2

About the company

TheCodersHub is a startup that offers services in #androiddevelopment, #webdevelopment, #softwaredevelopment, #mobiledevelopment, and #iosdevelopment. While still in the early stages, we are focused on growth and innovation within the tech industry.

Jobs

4

About the company

Jobs

2

About the company

Peak Hire Solutions is a leading Recruitment Firm that provides our clients with innovative IT / Non-IT Recruitment Solutions. We pride ourselves on our creativity, quality, and professionalism. Join our team and be a part of shaping the future of Recruitment.

Jobs

257

About the company

Designbyte Studio is a digital design and development studio focused on building clean, modern, and reliable web experiences. We work closely with startups, creators, and growing businesses to design and develop websites, user interfaces, and digital products that are simple, functional, and easy to use.

Our approach is practical and detail-driven. We believe good design should be clear, purposeful, and aligned with real business goals. From concept to launch, we focus on quality, performance, and long-term value.

Jobs

1