Sentienz

https://sentienz.comAbout

Sentienz is a technology company specializing in next-generation IT engineering solutions. They focus on AI, Data Analytics, Distributed Computing, Product Development, Platform Development, and IoT. The company has recently won the Startup Trailblazer category at LEAP India Startup Summit 2024 for their product Akiro

Tech stack

Candid answers by the company

Sentienz is a technology company founded in 2016 that specializes in two core areas:

- Product:

- Akiro - An AI-powered platform for IoT connectivity, focusing on smart meters, EV systems, and utilities

- Technology Services:

- AI & Data Intelligence solutions

- Product & Platform Development

- DevOps & MLOps services

Think of Sentienz as a tech partner that helps businesses:

- Build and scale their technology products

- Implement AI and data analytics solutions

- Modernize their IT operations

They primarily serve industries like finance, healthcare, telecom, and smart cities, with their headquarters in Bangalore. The company has grown to 11-50 employees and recently won recognition as a Startup Trailblazer at LEAP India Startup Summit 2024.

Jobs at Sentienz

No jobs found

Similar companies

About the company

Deep Tech Startup Focusing on Autonomy and Intelligence for Unmanned Systems. Guidance and Navigation, AI-ML, Computer Vision, Information Fusion, LLMs, Generative AI, Remote Sensing

Jobs

5

About the company

Jobs

2

About the company

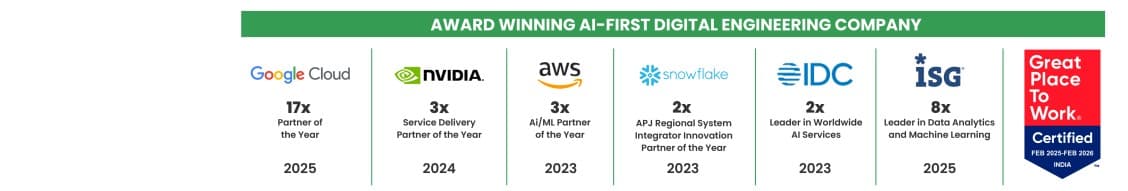

Quantiphi is an award-winning AI-first digital engineering company driven by the desire to reimagine and realize transformational opportunities at the heart of the business. Since its inception in 2013, Quantiphi has solved the toughest and most complex business problems by combining deep industry experience, disciplined cloud, and data-engineering practices, and cutting-edge artificial intelligence research to achieve accelerated and quantifiable business results.

Jobs

4

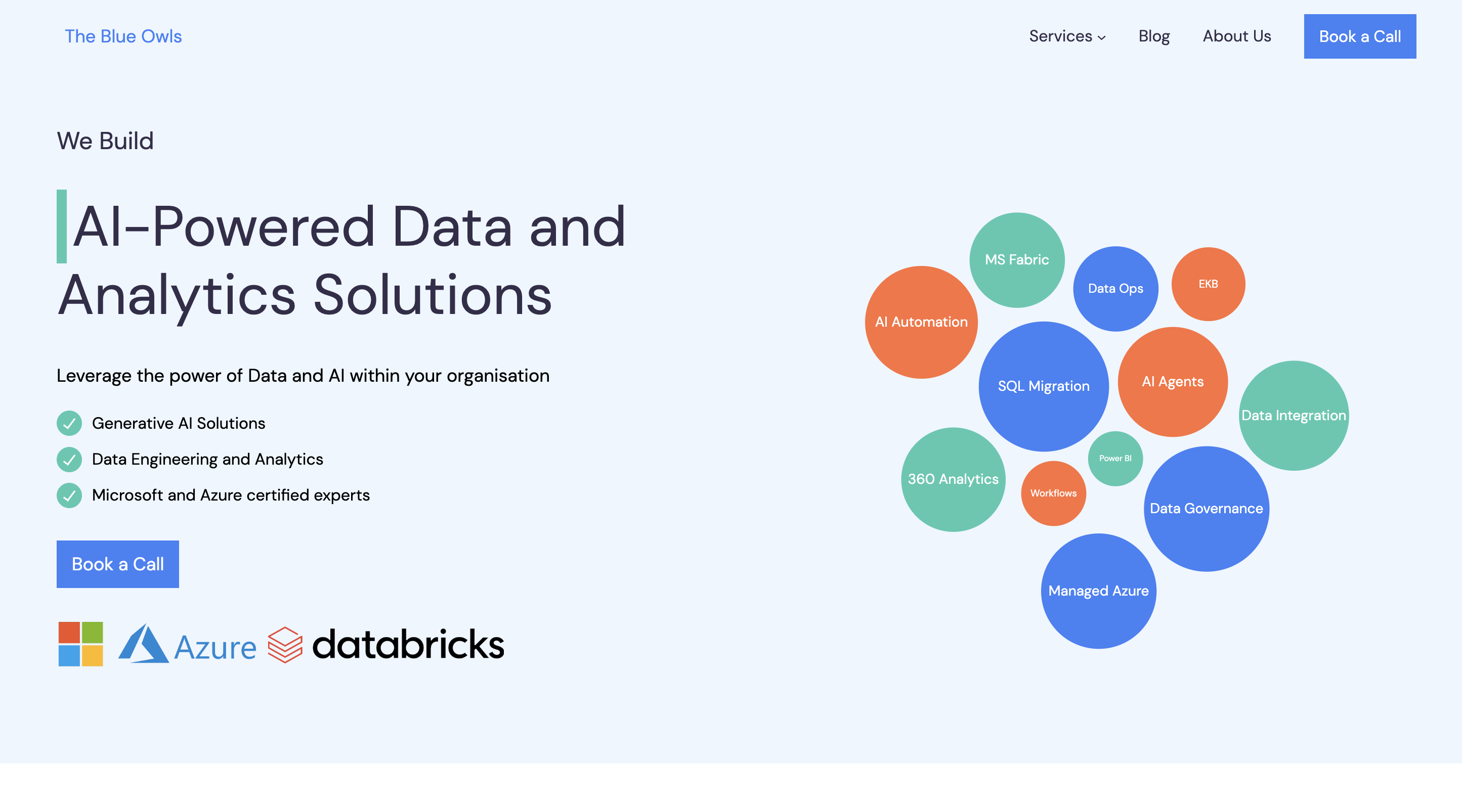

About the company

At TheBlueOwls, we are passionate about harnessing the power of data analytics and artificial intelligence to transform businesses and drive innovation. With a team of experts and cutting-edge technology, we help our clients unlock valuable insights from their data and leverage AI solutions to stay ahead in today's competitive landscape.

Our Founder

Our company was founded by Puran Ticku, an ex-Microsoft Architect with over 20+ years of experience in the field of data and digital health. Puran Ticku has a deep understanding of the potential of data analytics and AI and has successfully led transformative solutions for numerous organizations.

Our Expertise

We specialize in providing comprehensive data analytics services, helping businesses make data-driven decisions and uncover hidden patterns and trends. With our advanced AI capabilities, we enable our clients to automate processes, enhance productivity, and gain a competitive edge in their industries.

Our Approach

At TheBlueOwls, we believe that the key to successful data analytics and AI implementation lies in a holistic approach. We work closely with our clients to understand their unique challenges and goals, and tailor our solutions to meet their specific needs. Our team of skilled professionals utilizes state-of-the-art technology and industry best practices to deliver exceptional results.

Our Commitment

We are committed to delivering the highest level of quality and value to our clients. We strive for excellence in every project we undertake, ensuring that our solutions are not only effective, but also scalable and sustainable. With our deep domain expertise and customer-centric approach, we are dedicated to driving success for our clients and helping them achieve their business objectives.

Contact us today to learn more about how TheBlueOwls can empower your organization with data analytics and AI solutions that drive growth and innovation.

Jobs

4

About the company

Jobs

14

About the company

Peak Hire Solutions is a leading Recruitment Firm that provides our clients with innovative IT / Non-IT Recruitment Solutions. We pride ourselves on our creativity, quality, and professionalism. Join our team and be a part of shaping the future of Recruitment.

Jobs

258

About the company

Jobs

2

About the company

Jobs

1

About the company

Crea8 (www.crea8.co.in) helps you find skincare from top brands that actually works for your skinconcerns, lifestyle and goals. We decode ingredients to help you understand what’s in your product and guide you through the good, bad and ugly. Our mission is to make personal care more personal, simple and honest for everyone.

Jobs

1