Grand Hyper

https://grandhyper.comJobs at Grand Hyper

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Similar companies

About the company

E2M Solutions works as a trusted white-label partner for digital agencies. We support agencies with consistent and reliable delivery through services such as website design, web development, eCommerce, SEO, AI SEO, PPC, AI automation, and content writing.

Founded on strong business ethics, we are an equal opportunity organization powered by 300+ experienced professionals, partnering with 400+ digital agencies across the US, UK, Canada, Europe, and Australia. At E2M, we value ownership, consistency, and people who are committed to doing meaningful work and growing together.If you’re someone who dreams big and has the gumption to make them come true, E2M has a place for you.

Jobs

11

About the company

We are proud to offer the best online courses in a range of disciplines including healthcare, medicine, management, and research. Texila Educare Healthcare and Technology Enterprises Private Limited offers courses for professionals with over 20 years' of experience teaching students all around the world.

Jobs

3

About the company

About Us

HighLevel is an AI powered, all-in-one white-label sales & marketing platform that empowers agencies, entrepreneurs, and businesses to elevate their digital presence and drive growth. We are proud to support a global and growing community of over 2 million businesses, comprised of agencies, consultants, and businesses of all sizes and industries. HighLevel empowers users with all the tools needed to capture, nurture, and close new leads into repeat customers. As of mid 2025, HighLevel processes over 15 billion API hits and handles more than 2.5 billion message events every day. Our platform manages over 470 terabytes of data distributed across five databases, operates with a network of over 250 microservices, and supports over 1 million domain names.

Our People

With over 1,500 team members across 15+ countries, we operate in a global, remote-first environment. We are building more than software; we are building a global community rooted in creativity, collaboration, and impact. We take pride in cultivating a culture where innovation thrives, ideas are celebrated, and people come first, no matter where they call home.

Our Impact

As of mid 2025, our platform powers over 1.5 billion messages, helps generate over 200 million leads, and facilitates over 20 million conversations for the more than 2 million businesses we serve each month. Behind those numbers are real people growing their companies, connecting with customers, and making their mark - and we get to help make that happen.

EEO Statement:

At HighLevel, we value diversity. In fact, we understand it makes our organisation stronger. We are committed to inclusive hiring/promotion practices that evaluate skill sets, abilities, and qualifications without regard to any characteristic unrelated to performing the job at the highest level. Our objective is to foster an environment where really talented employees from all walks of life can be their true and whole selves, cherished and welcomed for their differences while providing excellent service to our clients and learning from one another along the way! Reasonable accommodations may be made to enable individuals with disabilities to perform essential functions.

Jobs

9

About the company

Jobs

15

About the company

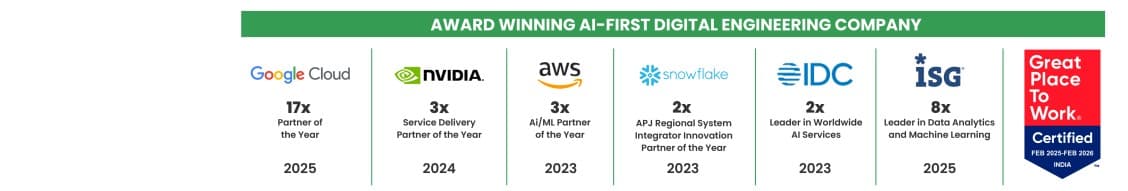

Quantiphi is an award-winning AI-first digital engineering company driven by the desire to reimagine and realize transformational opportunities at the heart of the business. Since its inception in 2013, Quantiphi has solved the toughest and most complex business problems by combining deep industry experience, disciplined cloud, and data-engineering practices, and cutting-edge artificial intelligence research to achieve accelerated and quantifiable business results.

Jobs

4

About the company

Recruiting Bond is a global leader in Recruitment Process Outsourcing (RPO), Executive Search, Headhunting, Talent Mapping, and Workforce Consulting. Founded by Pavan B, we are on a mission to power businesses through transformative talent strategies that scale teams, accelerate innovation, and unlock human potential.

With a presence across 25+ industries—from IT, Healthcare, and FinTech to Gaming, BioTech, and Web3—we specialize in hiring that drives outcomes. Our domain expertise spans high-growth startups to Fortune 500 companies, delivering elite CXO and leadership talent, strategic workforce solutions, and inclusive hiring at scale.

We help businesses:

✔️ Hire the right leaders and builders

✔️ Scale globally with speed and precision

✔️ Build talent-first roadmaps from MVP to IPO

Whether you're launching, scaling, or transforming—Recruiting Bond is your strategic partner in talent.

🔹 Industries: Technology | Healthcare | FinTech | Retail | Manufacturing | EdTech | Crypto | Real Estate | Web3 | Logistics | Energy & more

🔹 Services: Executive Hiring | RPO | Talent Strategy | Workforce Design | Startup Consulting | Diversity Recruitment

📨 Let’s build the future—together: https://recruitingbond.c

Jobs

14

About the company

Jobs

4

About the company

Jobs

5

About the company

Shopflo is an enterprise technology company providing a specialized checkout infrastructure platform designed to boost conversion rates for direct-to-consumer (D2C) e-commerce brands. Founded in 2021, it focuses on enhancing the online buying experience through fast, customizable, and secure checkout pages that reduce cart abandonment.

We aim to supercharge conversions for e-commerce websites at checkout by improving user experience, helping build stronger intent and trust during the purchase

Problem statement -

(1) There is ~70% drop off at checkout for most independent e-commerce retailer (outside of large marketplaces)

(2) E-commerce cart platforms allow minimal flexibility on checkout, with their experience still same as the last decade

(3) Whereas user experiences are defined by new consumer platforms such as Swiggy, Amazon, etc.

There is a fundamental unbundling of monolith shopping cart platforms globally for mid-market and enterprise customers, who are moving towards headless (read modular) architecture.

Shopflo aims to be the global default for checkout experiences.

Jobs

3

About the company

Jobs

1