Codalyze Technologies

https://codalyze.comJobs at Codalyze Technologies

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Your mission is to help lead team towards creating solutions that improve the way our business is run. Your knowledge of design, development, coding, testing and application programming will help your team raise their game, meeting your standards, as well as satisfying both business and functional requirements. Your expertise in various technology domains will be counted on to set strategic direction and solve complex and mission critical problems, internally and externally. Your quest to embracing leading-edge technologies and methodologies inspires your team to follow suit.

Responsibilities and Duties :

- As a Data Engineer you will be responsible for the development of data pipelines for numerous applications handling all kinds of data like structured, semi-structured &

unstructured. Having big data knowledge specially in Spark & Hive is highly preferred.

- Work in team and provide proactive technical oversight, advice development teams fostering re-use, design for scale, stability, and operational efficiency of data/analytical solutions

Education level :

- Bachelor's degree in Computer Science or equivalent

Experience :

- Minimum 3+ years relevant experience working on production grade projects experience in hands on, end to end software development

- Expertise in application, data and infrastructure architecture disciplines

- Expert designing data integrations using ETL and other data integration patterns

- Advanced knowledge of architecture, design and business processes

Proficiency in :

- Modern programming languages like Java, Python, Scala

- Big Data technologies Hadoop, Spark, HIVE, Kafka

- Writing decently optimized SQL queries

- Orchestration and deployment tools like Airflow & Jenkins for CI/CD (Optional)

- Responsible for design and development of integration solutions with Hadoop/HDFS, Real-Time Systems, Data Warehouses, and Analytics solutions

- Knowledge of system development lifecycle methodologies, such as waterfall and AGILE.

- An understanding of data architecture and modeling practices and concepts including entity-relationship diagrams, normalization, abstraction, denormalization, dimensional

modeling, and Meta data modeling practices.

- Experience generating physical data models and the associated DDL from logical data models.

- Experience developing data models for operational, transactional, and operational reporting, including the development of or interfacing with data analysis, data mapping,

and data rationalization artifacts.

- Experience enforcing data modeling standards and procedures.

- Knowledge of web technologies, application programming languages, OLTP/OLAP technologies, data strategy disciplines, relational databases, data warehouse development and Big Data solutions.

- Ability to work collaboratively in teams and develop meaningful relationships to achieve common goals

Skills :

Must Know :

- Core big-data concepts

- Spark - PySpark/Scala

- Data integration tool like Pentaho, Nifi, SSIS, etc (at least 1)

- Handling of various file formats

- Cloud platform - AWS/Azure/GCP

- Orchestration tool - Airflow

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Your mission is to help lead team towards creating solutions that improve the way our business is run. Your knowledge of design, development, coding, testing and application programming will help your team raise their game, meeting your standards, as well as satisfying both business and functional requirements. Your expertise in various technology domains will be counted on to set strategic direction and solve complex and mission critical problems, internally and externally. Your quest to embracing leading-edge technologies and methodologies inspires your team to follow suit.

Responsibilities and Duties :

- As a Data Engineer you will be responsible for the development of data pipelines for numerous applications handling all kinds of data like structured, semi-structured &

unstructured. Having big data knowledge specially in Spark & Hive is highly preferred.

- Work in team and provide proactive technical oversight, advice development teams fostering re-use, design for scale, stability, and operational efficiency of data/analytical solutions

Education level :

- Bachelor's degree in Computer Science or equivalent

Experience :

- Minimum 5+ years relevant experience working on production grade projects experience in hands on, end to end software development

- Expertise in application, data and infrastructure architecture disciplines

- Expert designing data integrations using ETL and other data integration patterns

- Advanced knowledge of architecture, design and business processes

Proficiency in :

- Modern programming languages like Java, Python, Scala

- Big Data technologies Hadoop, Spark, HIVE, Kafka

- Writing decently optimized SQL queries

- Orchestration and deployment tools like Airflow & Jenkins for CI/CD (Optional)

- Responsible for design and development of integration solutions with Hadoop/HDFS, Real-Time Systems, Data Warehouses, and Analytics solutions

- Knowledge of system development lifecycle methodologies, such as waterfall and AGILE.

- An understanding of data architecture and modeling practices and concepts including entity-relationship diagrams, normalization, abstraction, denormalization, dimensional

modeling, and Meta data modeling practices.

- Experience generating physical data models and the associated DDL from logical data models.

- Experience developing data models for operational, transactional, and operational reporting, including the development of or interfacing with data analysis, data mapping,

and data rationalization artifacts.

- Experience enforcing data modeling standards and procedures.

- Knowledge of web technologies, application programming languages, OLTP/OLAP technologies, data strategy disciplines, relational databases, data warehouse development and Big Data solutions.

- Ability to work collaboratively in teams and develop meaningful relationships to achieve common goals

Skills :

Must Know :

- Core big-data concepts

- Spark - PySpark/Scala

- Data integration tool like Pentaho, Nifi, SSIS, etc (at least 1)

- Handling of various file formats

- Cloud platform - AWS/Azure/GCP

- Orchestration tool - Airflow

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Similar companies

About the company

Founded in 2008, Ignite Solutions is cutting-edge software development company that transforms innovative ideas into powerful digital products. Based in Pune with a team of 40+ experts, we specialize in UI/UX design, custom software development, and AI/ML solutions for both startups and established businesses.

Our comprehensive service portfolio ranges from 2-week rapid prototypes to full-scale product development, leveraging modern tech stacks like React, Android, Flutter, Python, and cloud technologies. Our agile approach enables quick iterations and consistent product releases every two weeks, ensuring projects stay aligned with market needs.

We stand out for delivering high-impact results across diverse industries - from healthcare platforms to eCommerce solutions. Our proven track record includes successful partnerships with companies like Parallel Health, OptIn, and Tiivra, where we've developed scalable, user-centric applications that drive business growth.

Work With Us

- We help ambitious companies with UX design, technology strategy, and software development.

- We believe in small teams. Small teams and smart individuals can make things happen.

- We have an open, honest culture. As part of our team, you will get to contribute to our ideas, our plans, and our success.

- We treat you with respect and trust you will do the same to your team members.

- We hire carefully, looking for attitude along with aptitude.

- We like to have fun so bring your sense of humour with you.

Jobs

0

About the company

At Zobaze, we're not just developing apps; we're crafting the future of SMEs worldwide. Our quest to become the premier business utility app drives us to deliver revolutionary solutions like Zobaze POS and Restokeep. These tools don't just manage business—they propel it, enabling owners to monitor everything from sales to staff performance with unparalleled ease and efficiency.

With a remarkable footprint spanning 192 countries and over 2.5+ million downloads, our apps have already generated 140+ million receipts, evidencing our substantial global impact.

As a 100% bootstrapped company, our journey from inception to cash-positive operations exemplifies our dedication and resilience. We stand as a beacon of innovation and sustainability in the SME sector.

🤑 Funding

We are proud to be cash flow positive and have not yet tapped into VC funding, setting us apart from 99% of startups. This financial stability allows us to invest in our team's growth through a robust ESOP pool.

Securing funding will further accelerate our growth. Joining our team now offers a unique opportunity to create significant value, especially before our fundraising efforts take off.

⚠️ Before you apply

If you're looking for a standard 9-5 job, we are not a match. However, if you seek an exceptional learning curve, exposure to multiple verticals, and the opportunity to help establish processes that will propel our growth, we encourage you to apply. Our journey is dynamic, requiring flexibility and adaptability to changing needs.

Jobs

2

About the company

Founded in 2016, Oneture is a cloud-first, full-service digital solutions company, helping clients harness the power of Digital Technologies and Data to drive transformations and turning ideas into business realities. Our team is full of curious, full-stack, innovative thought leaders who are dedicated to providing outstanding customer experiences and building authentic relationships.

We are compelled by our core values to drive transformational results from Ideas to Reality for clients across all company sizes, geographies, and industries. The Oneture team delivers full lifecycle solutions—from ideation, project inception, planning through deployment to ongoing support and maintenance. Our core competencies and technical expertise includes Cloud powered: Product Engineering, Bigdata and AI ML. Our deep commitment to value creation for our clients and partners and “Startups-like agility with Enterprises-like maturity” philosophy has helped us establish long-term relationships with our clients and enabled us to build and manage mission-critical platforms for them.

Jobs

1

About the company

Jobs

7

About the company

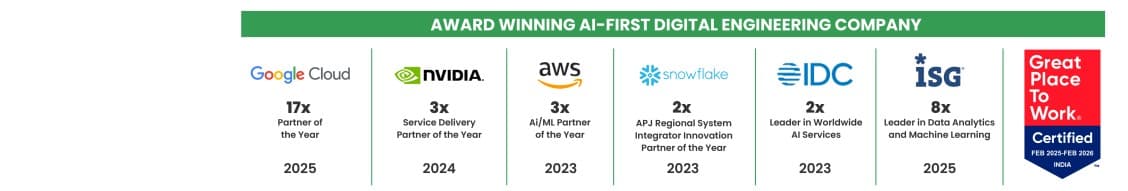

Quantiphi is an award-winning AI-first digital engineering company driven by the desire to reimagine and realize transformational opportunities at the heart of the business. Since its inception in 2013, Quantiphi has solved the toughest and most complex business problems by combining deep industry experience, disciplined cloud, and data-engineering practices, and cutting-edge artificial intelligence research to achieve accelerated and quantifiable business results.

Jobs

4

About the company

At Xenspire Group, we are on a mission to revolutionize the hiring and talent industry through science, technology, and a deep understanding of our clients' needs.

Our global talent solutions are designed to foster growth and innovation across multiple sectors, making us a trusted partner for enterprises and individuals alike.

We prioritize the personal touch in every interaction, ensuring a smooth, transparent journey for job seekers while empowering companies to find the right talent quickly. By revolutionizing the way we connect, we are building a future where hiring is effortless, intuitive, and focused on mutual success.

Stay updated with our latest insights on #workforceoptimization, #talentsolutions, #innovation, #salesops #revops #socialresponsibility, and the #futureofwork.

Together, let's build a brighter future!

Jobs

1

About the company

Jobs

3

About the company

Shopflo is an enterprise technology company providing a specialized checkout infrastructure platform designed to boost conversion rates for direct-to-consumer (D2C) e-commerce brands. Founded in 2021, it focuses on enhancing the online buying experience through fast, customizable, and secure checkout pages that reduce cart abandonment.

We aim to supercharge conversions for e-commerce websites at checkout by improving user experience, helping build stronger intent and trust during the purchase

Problem statement -

(1) There is ~70% drop off at checkout for most independent e-commerce retailer (outside of large marketplaces)

(2) E-commerce cart platforms allow minimal flexibility on checkout, with their experience still same as the last decade

(3) Whereas user experiences are defined by new consumer platforms such as Swiggy, Amazon, etc.

There is a fundamental unbundling of monolith shopping cart platforms globally for mid-market and enterprise customers, who are moving towards headless (read modular) architecture.

Shopflo aims to be the global default for checkout experiences.

Jobs

3

About the company

Building the most advanced ad blocker on the planet!🌎

Loved by 3,50,000+ users on Chrome!

Jobs

1