Byteware Cloud PVT LTD

https://bytewareinc.comAbout

Jobs at Byteware Cloud PVT LTD

The recruiter has not been active on this job recently. You may apply but please expect a delayed response.

Job Overview:

As a Lead ETL Developer for a very large client of Paradigm, you are in charge of design and creation of data warehouse related functions such as, extraction, transformation and loading of data and expected to have specialized working knowledge in Cloud platforms especially Snowflake. In this role, you’ll be part of Paradigm’s Digital Solutions group, where we are looking for someone with the technical expertise to build and maintain sustainable ETL Solutions around data modeling and data profiling to support identified needs and expectations from the client.

Delivery Responsibilities

- Lead the technical planning, architecture, estimation, develop, and testing of ETL solutions

- Knowledge and experience in most of the following architectural styles: Layered Architectures, Transactional applications, PaaS-based architectures, and SaaS-based applications; Experience developing ETL-based Cloud PaaS and SaaS solutions.

- Create Data models that are aligned with clients requirements.

- Design, Develop and support ETL mapping, strong SQL skills with experience in developing ETL specifications

- Create ELT pipeline, Data Model Updates, & Orchestration using DBT / Streams/ Tasks / Astronomer & Testing

- Focus on ETL aspects including performance, scalability, reliability, monitoring, and other operational concerns of data warehouse solutions

- Design reusable assets, components, standards, frameworks, and processes to support and facilitate end to end ETL solutions

- Experience gathering requirements and defining the strategy for 3rd party data ingestion methodologies such as SAP Hana, and Oracle

- Understanding and experience on most of the following architectural styles: Layered Architectures, Transactional applications, PaaS-based architectures and SaaS-based applications; Experience designing ETL based Cloud PaaS and SaaS solutions.

Required Qualifications

- Expert Hands-on experience in the following:

- Technologies such as Python, Teradata, MYSQL, SQL Server, RDBMS, Apache Airflow, AWS S3, AWS Datalake, Unix scripting, AWS Cloud Formation, DevOps, GitHub

- Demonstrate best practices in implementing Airflow orchestration best practices such as creating DAG’s, and hands on knowledge in Python libraries including Pandas, Numpy, Boto3, Dataframe, connectors to different databases, APIs

- Data modelling, Master and Operational Data Stores, Data ingestion & distribution patterns, ETL / ELT technologies, Relational and Non-Relational DB's, DB Optimization patterns

- Develop virtual warehouses using Snowflake for data-sharing needs for both internal and external customers.

- Create Snowflake data-sharing capabilities that will create a marketplace for sharing files, datasets, and other types of data in real-time and batch frequencies

- At least 8+ years’ experience in ETL/Data Development experience

- Working knowledge of Fact / Dimensional data models and AWS Cloud

- Strong Experience in creating Technical design documents, source-to-target mapping, Test cases/resultsd.

- Understand the security requirements and apply RBAC, PBAC, ABAC policies on the data.

- Build data pipelines in Snowflake leveraging Data Lake (S3/Blob), Stages, Streams, Tasks, Snowpipe, Time travel, and other critical capabilities within Snowflake

- Ability to collaborate, influence, and communicate across multiple stakeholders and levels of leadership, speaking at the appropriate level of detail to both business executives and technology teams

- Excellent communication skills with a demonstrated ability to engage, influence, and encourage partners and stakeholders to drive collaboration and alignment

- High degree of organization, individual initiative, results and solution oriented, and personal accountability and resiliency

- Demonstrated learning agility, ability to make decisions quickly and with the highest level of integrity

- Demonstrable experience of driving meaningful improvements in business value through data management and strategy

- Must have a positive, collaborative leadership style with colleague and customer first attitude

- Should be a self-starter and team player, capable of working with a team of architects, co-developers, and business analysts

Preferred Qualifications:

- Experience with Azure Cloud, DevOps implementation

- Ability to work as a collaborative team, mentoring and training the junior team members

- Position requires expert knowledge across multiple platforms, data ingestion patterns, processes, data/domain models, and architectures.

- Candidates must demonstrate an understanding of the following disciplines: enterprise architecture, business architecture, information architecture, application architecture, and integration architecture.

- Ability to focus on business solutions and understand how to achieve them according to the given timeframes and resources.

- Recognized as an expert/thought leader. Anticipates and solves highly complex problems with a broad impact on a business area.

- Experience with Agile Methodology / Scaled Agile Framework (SAFe).

- Outstanding oral and written communication skills including formal presentations for all levels of management combined with strong collaboration/influencing.

Preferred Education/Skills:

- Prefer Master’s degree

- Bachelor’s Degree in Computer Science with a minimum of 8+ years relevant experience or equivalent.

Similar companies

About the company

Jobs

2

About the company

Juntrax Solutions is a young company with a collaborative work culture, on a mission to bring efficient solutions to SMEs. We have release the first version of our product in 2019 and have over 300 users using it daily. Our current focus is to bring out release 2 of the product with a new redesign and new tech stack. Joining us you will be part of a great team building a integrated platform for SMEs to help them manage their daily business globally.

Jobs

2

About the company

We are a fast growing virtual & hybrid events and engagement platform. Gevme has already powered hundreds of thousands of events around the world for clients like Facebook, Netflix, Starbucks, Forbes, MasterCard, Citibank, Google, Singapore Government etc.

We are a SAAS product company with a strong engineering and family culture; we are always looking for new ways to enhance the event experience and empower efficient event management. We’re on a mission to groom the next generation of event technology thought leaders as we grow.

Join us if you want to become part of a vibrant and fast moving product company that's on a mission to connect people around the world through events.

Jobs

5

About the company

Deep Tech Startup Focusing on Autonomy and Intelligence for Unmanned Systems. Guidance and Navigation, AI-ML, Computer Vision, Information Fusion, LLMs, Generative AI, Remote Sensing

Jobs

5

About the company

Jobs

5

About the company

Jobs

54

About the company

Peak Hire Solutions is a leading Recruitment Firm that provides our clients with innovative IT / Non-IT Recruitment Solutions. We pride ourselves on our creativity, quality, and professionalism. Join our team and be a part of shaping the future of Recruitment.

Jobs

257

About the company

Jobs

2

About the company

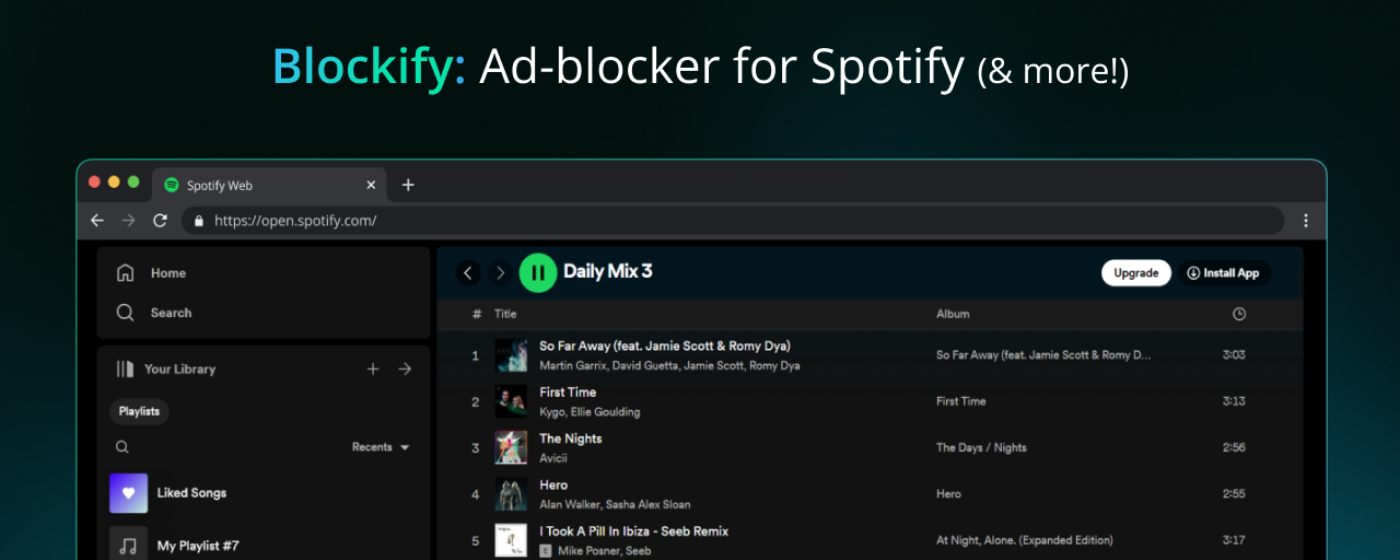

Building the most advanced ad blocker on the planet!🌎

Loved by 3,50,000+ users on Chrome!

Jobs

1

About the company

Jobs

1